This is the multi-page printable view of this section.

Click here to print.

Return to the regular view of this page.

Curated Package management

Managing EKS Anywhere Curated Packages

EKS Anywhere Curated Packages make it easy to install, configure, and maintain operational components in EKS Anywhere clusters. EKS Anywhere Curated Packages are built, tested, and distributed by AWS to use with EKS Anywhere clusters as part of EKS Anywhere Enterprise Subscriptions.

See Prerequisites

to get started.

Check out EKS Anywhere curated packages concepts

for more details.

1 - Overview of curated packages

Components of EKS Anywhere curated packages consist of a controller, a CLI, and artifacts.

Package controller

The package controller is responsible for installing, upgrading, configuring, and removing packages from the cluster. It performs these actions by watching the package and packagebundle custom resources. Moreover, it uses the packagebundle to determine which packages to run and sets appropriate configuration values.

Packages custom resources map to helm charts that the package controller uses to install packages workloads (such as cluster-autoscaler or metrics-server) on your clusters. The packagebundle object is the mapping between the package name and the specific helm chart and images that will be installed.

The package controller only runs on the management cluster, including single-node clusters, to perform the above outlined responsibilities. However, packages may be installed on both management and workload clusters. For more information, see the guide on installing packages on workload clusters.

Package release information is stored in a package bundle manifest. The package controller will continually monitor and download new package bundles. When a new package bundle is downloaded, it will show up as “available” in the PackageBundleController resource’s status.detail field. A package bundle upgrade always requires manual intervention as outlined in the package bundles

docs.

Any changes to a package custom resource will trigger an install, upgrade, configuration or removal of that package. The package controller will use ECR or private registry to get all resources including bundle, helm charts, and container images.

You may install the package controller

after cluster creation to take advantage of curated package features.

Packages CLI

The Curated Packages CLI provides the user experience required to manage curated packages.

Through the CLI, a user is able to discover, create, delete, and upgrade curated packages to a cluster.

These functionalities can be achieved during and after an EKS Anywhere cluster is created.

The CLI provides both imperative and declarative mechanisms to manage curated packages. These

packages will be included as part of a packagebundle that will be provided by the EKS Anywhere team.

Whenever a user requests a package creation through the CLI (eksctl anywhere create package), a custom resource is created on the cluster

indicating the existence of a new package that needs to be installed. When a user executes a delete operation (eksctl anywhere delete package),

the custom resource will be removed from the cluster indicating the need for uninstalling a package.

An upgrade through the CLI (eksctl anywhere upgrade packages) upgrades all packages to the latest release.

Please check out Install EKS Anywhere

to install the eksctl anywhere CLI on your machine.

The create cluster page for each EKS Anywhere provider

describes how to configure and install curated packages at cluster creation time.

Curated packages artifacts

There are three types of build artifacts for packages: the container images, the helm charts and the package bundle manifests. The container images, helm charts and bundle manifests for all of the packages will be built and stored in EKS Anywhere ECR repository. Each package may have multiple versions specified in the packages bundle. The bundle will reference the helm chart tag in the ECR repository. The helm chart will reference the container images for the package.

Installing packages on workload clusters

The package controller only runs on the management cluster. It determines which cluster to install your package on based on the namespace specified in the Package resource.

See an example package below:

apiVersion: packages.eks.amazonaws.com/v1alpha1

kind: Package

metadata:

name: my-hello-eks-anywhere

namespace: eksa-packages-wk0

spec:

config: |

title: "My Hello"

packageName: hello-eks-anywhere

targetNamespace: default

By specifying metadata.namespace: eksa-packages-wk0, the package controller will install the resource on workload cluster wk0.

The pattern for these namespaces is always eksa-packages-<cluster-name>.

By specifying spec.targetNamespace: default, the package controller will install the hello-eks-anywhere package helm chart in the default namespace in cluster wk0.

2 - Prerequisites

Prerequisites for using curated packages

Prerequisites

Before installing any curated packages for EKS Anywhere, do the following:

-

Check that the cluster Kubernetes version is v1.21 or above. For example, you could run kubectl get cluster -o yaml <cluster-name> | grep -i kubernetesVersion

-

Check that the version of eksctl anywhere is v0.11.0 or above with the eksctl anywhere version command.

-

It is recommended that the package controller is only installed on the management cluster.

-

Check the existence of package controller:

kubectl get pods -n eksa-packages | grep "eks-anywhere-packages"

If the returned result is empty, you need to install the package controller.

-

Install the package controller if it is not installed:

Install the package controller

Note This command is temporarily provided to ease integration with curated packages. This command will be deprecated in the future

eksctl anywhere install packagecontroller -f $CLUSTER_NAME.yaml

To request a free trial, talk to your Amazon representative or connect with one here

.

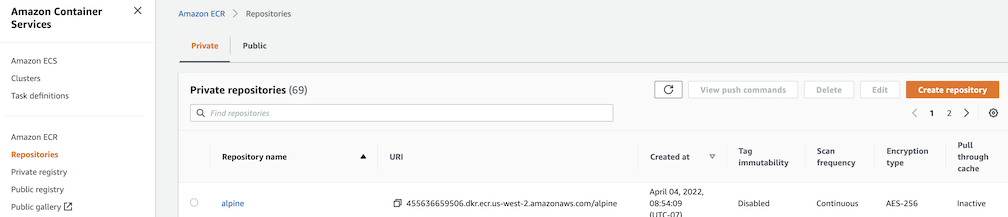

Identify AWS account ID for ECR packages registry

The AWS account ID for ECR packages registry depends on the EKS Anywhere Enterprise Subscription.

- For EKS Anywhere Enterprise Subscriptions purchased through the AWS console or APIs the AWS account ID for ECR packages registry varies depending on the region the Enterprise Subscription was purchased. Reference the table in the expanded output below for a mapping of AWS Regions to ECR package registries.

Expand for packages registry to AWS Region table

| AWS Region |

Packages Registry Account |

| us-west-2 |

346438352937 |

| us-west-1 |

440460740297 |

| us-east-1 |

331113665574 |

| us-east-2 |

297090588151 |

| ap-east-1 |

804323328300 |

| ap-northeast-1 |

143143237519 |

| ap-northeast-2 |

447311122189 |

| ap-south-1 |

357015164304 |

| ap-south-2 |

388483641499 |

| ap-southeast-1 |

654894141437 |

| ap-southeast-2 |

299286866837 |

| ap-southeast-3 |

703305448174 |

| ap-southeast-4 |

106475008004 |

| af-south-1 |

783635962247 |

| ca-central-1 |

064352486547 |

| eu-central-1 |

364992945014 |

| eu-central-2 |

551422459769 |

| eu-north-1 |

826441621985 |

| eu-south-1 |

787863792200 |

| eu-west-1 |

090204409458 |

| eu-west-2 |

371148654473 |

| eu-west-3 |

282646289008 |

| il-central-1 |

131750224677 |

| me-central-1 |

454241080883 |

| me-south-1 |

158698011868 |

| sa-east-1 |

517745584577 |

- For EKS Anywhere Curated Packages trials or EKS Anywhere Enterprise Subscriptions purchased before October 2023 the AWS account ID for ECR packages registry is

783794618700. This supports pulling images from the following regions.

Expand for AWS Regions table

| AWS Region |

| us-east-2 |

| us-east-1 |

| us-west-1 |

| us-west-2 |

| ap-northeast-3 |

| ap-northeast-2 |

| ap-southeast-1 |

| ap-southeast-2 |

| ap-northeast-1 |

| ca-central-1 |

| eu-central-1 |

| eu-west-1 |

| eu-west-2 |

| eu-west-3 |

| eu-north-1 |

| sa-east-1 |

After identifying the AWS account ID; export it for further reference. Example

export ECR_PACKAGES_ACCOUNT=346438352937

Setup authentication to use curated packages

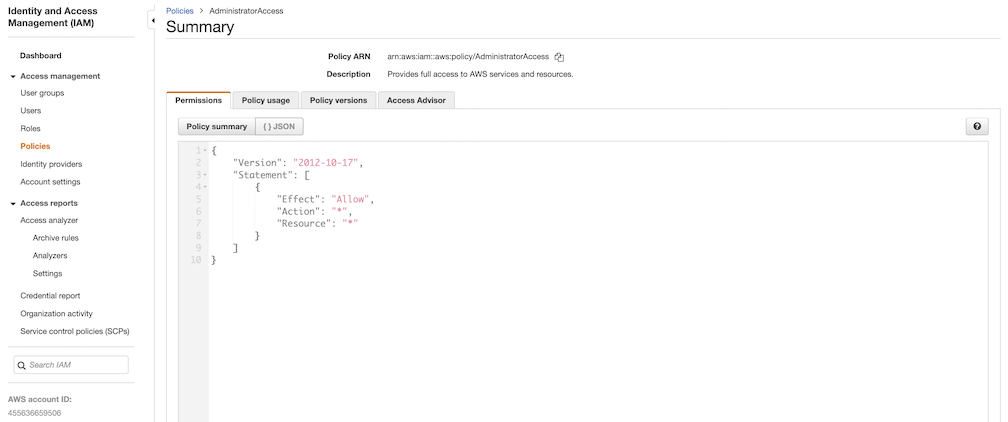

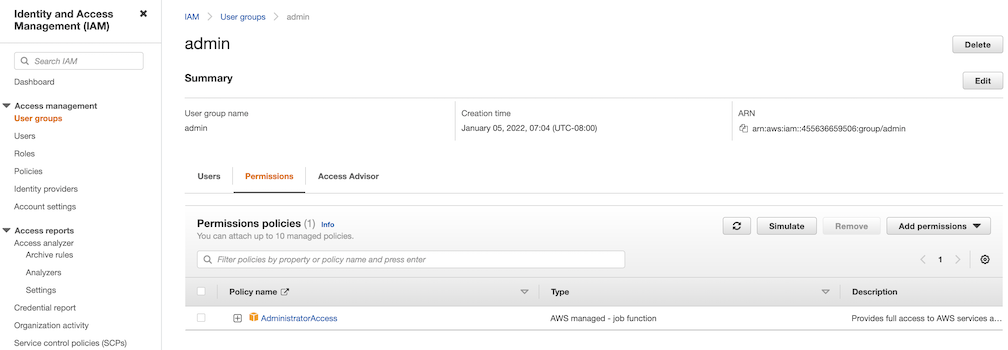

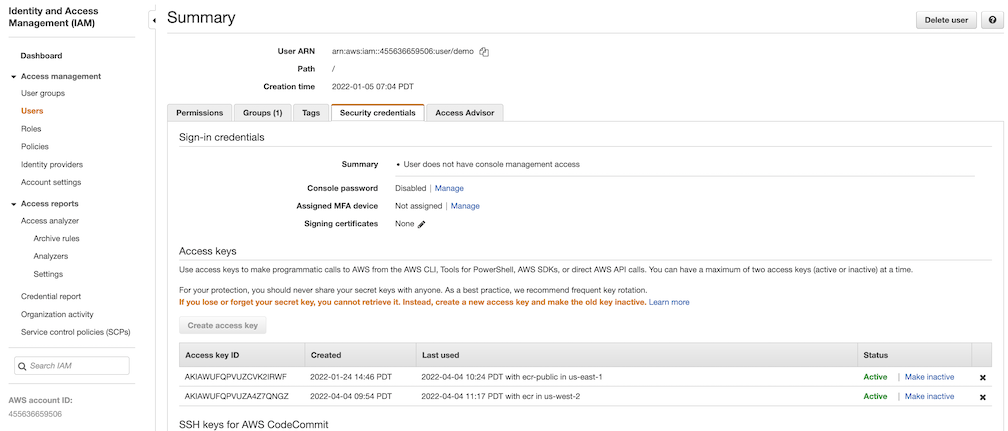

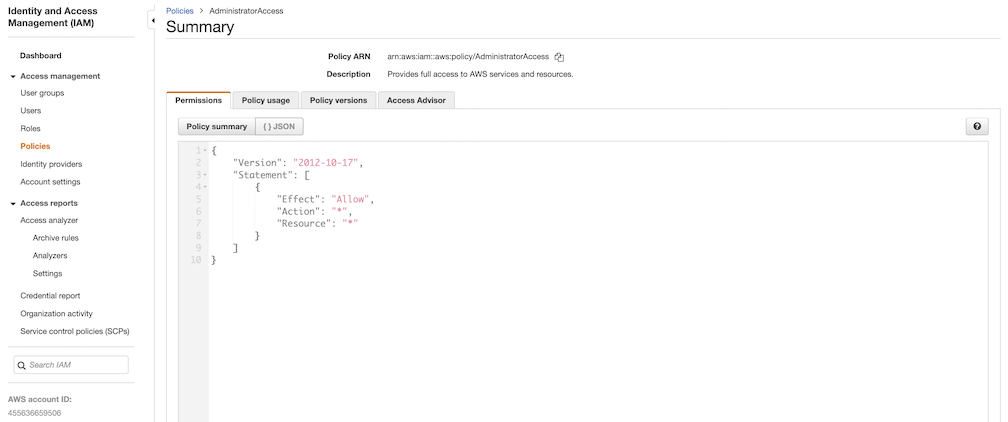

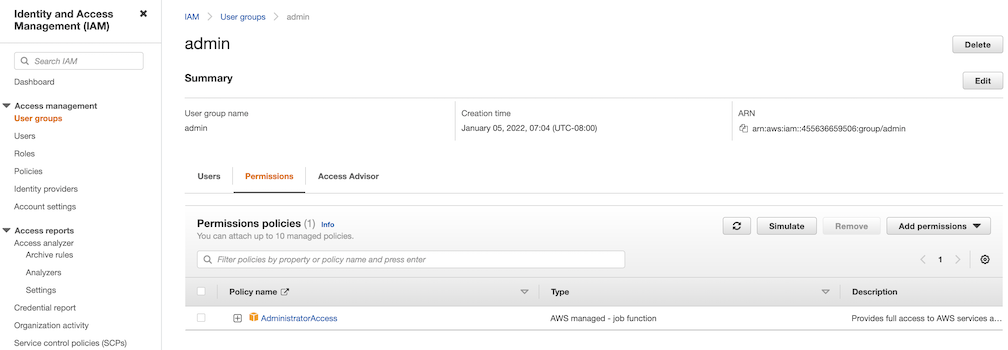

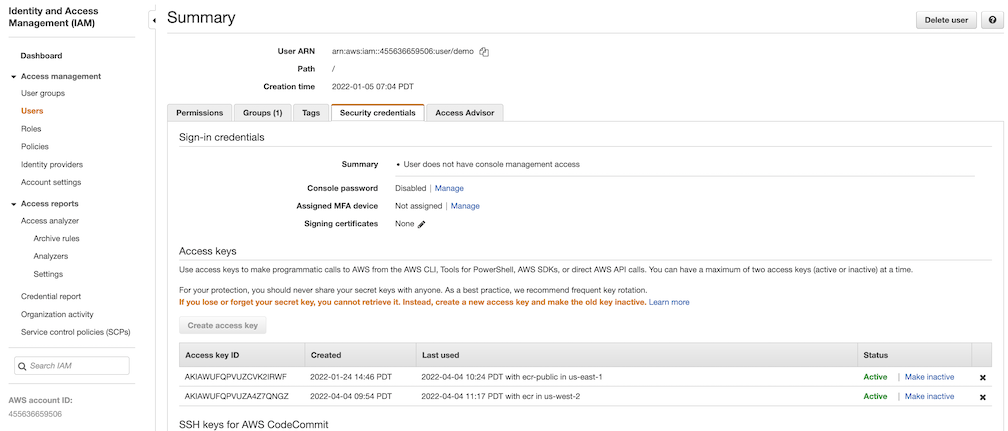

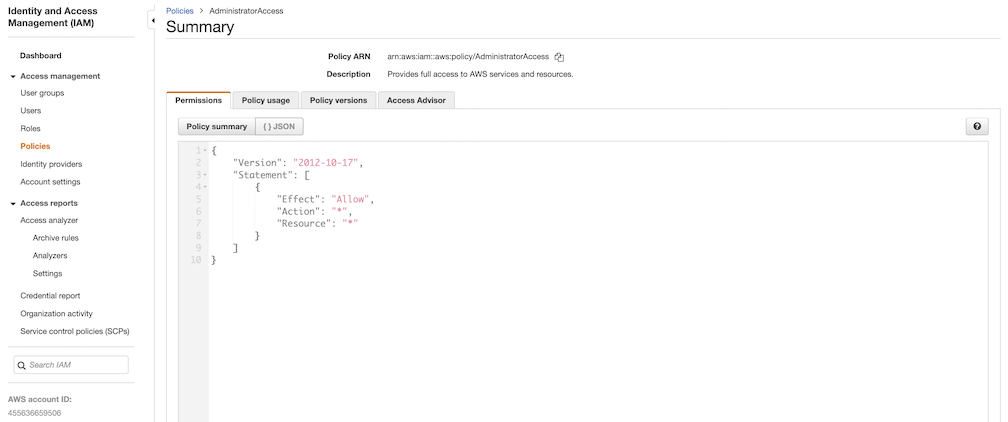

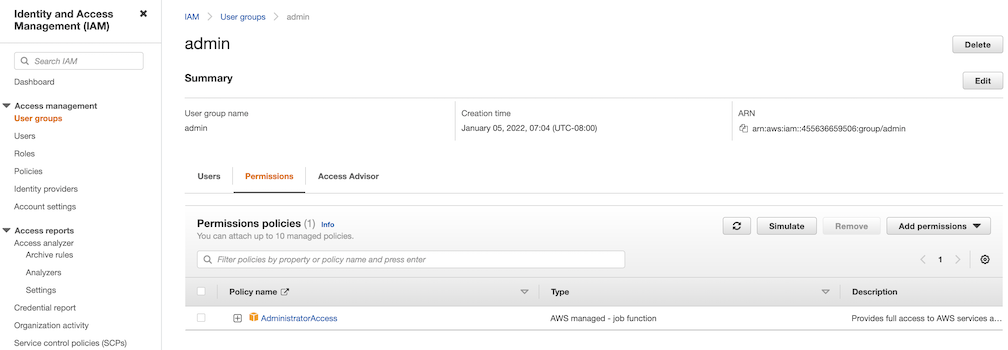

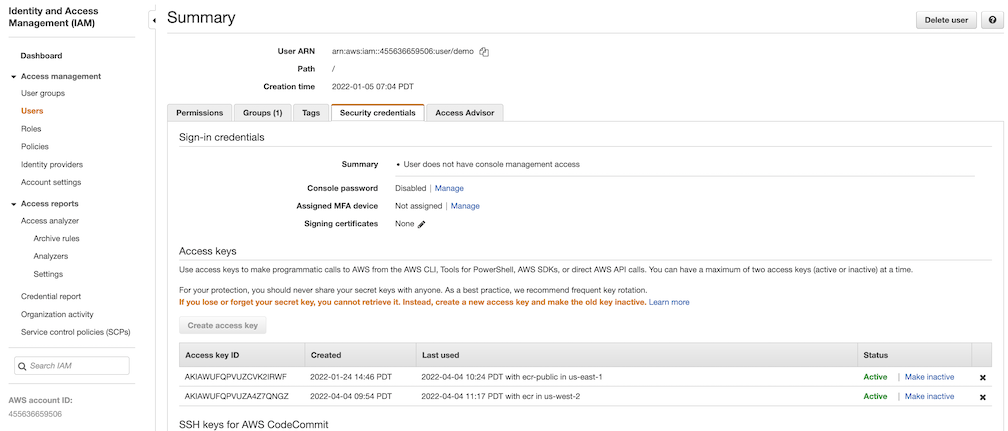

When you have been notified that your account has been given access to curated packages, create an IAM user in your account with a policy that only allows ECR read access to the Curated Packages repository; similar to this:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "ECRRead",

"Effect": "Allow",

"Action": [

"ecr:DescribeImageScanFindings",

"ecr:GetDownloadUrlForLayer",

"ecr:DescribeRegistry",

"ecr:DescribePullThroughCacheRules",

"ecr:DescribeImageReplicationStatus",

"ecr:ListTagsForResource",

"ecr:ListImages",

"ecr:BatchGetImage",

"ecr:DescribeImages",

"ecr:DescribeRepositories",

"ecr:BatchCheckLayerAvailability"

],

"Resource": "arn:aws:ecr:*:<ECR_PACKAGES_ACCOUNT>:repository/*"

},

{

"Sid": "ECRLogin",

"Effect": "Allow",

"Action": [

"ecr:GetAuthorizationToken"

],

"Resource": "*"

}

]

}

Note Use the corresponding EKSA_AWS_REGION prior to cluster creation to choose which region to pull form.

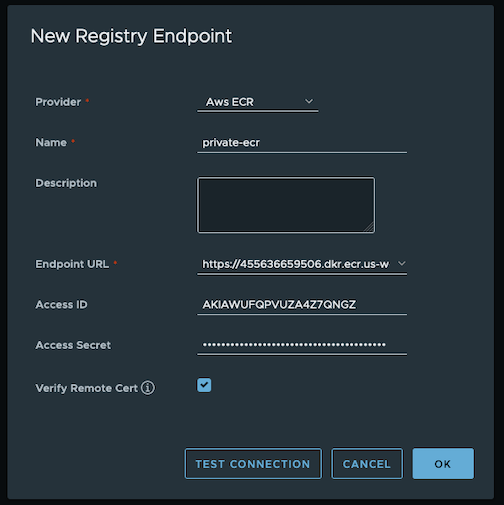

Create credentials for this user and set and export the following environment variables:

export EKSA_AWS_ACCESS_KEY_ID="your*access*id"

export EKSA_AWS_SECRET_ACCESS_KEY="your*secret*key"

export EKSA_AWS_REGION="aws*region"

Make sure you are authenticated with the AWS CLI

export AWS_ACCESS_KEY_ID="your*access*id"

export AWS_SECRET_ACCESS_KEY="your*secret*key"

aws sts get-caller-identity

Login to docker

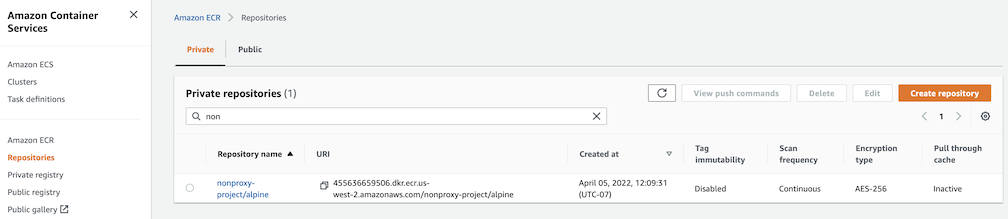

aws ecr get-login-password --region us-west-2 | docker login --username AWS --password-stdin $ECR_PACKAGES_ACCOUNT.dkr.ecr.$EKSA_AWS_REGION.amazonaws.com

Verify you can pull an image

docker pull $ECR_PACKAGES_ACCOUNT.dkr.ecr.$EKSA_AWS_REGION.amazonaws.com/emissary-ingress/emissary:v3.9.1-828e7d186ded23e54f6bd95a5ce1319150f7e325

If the image downloads successfully, it worked!

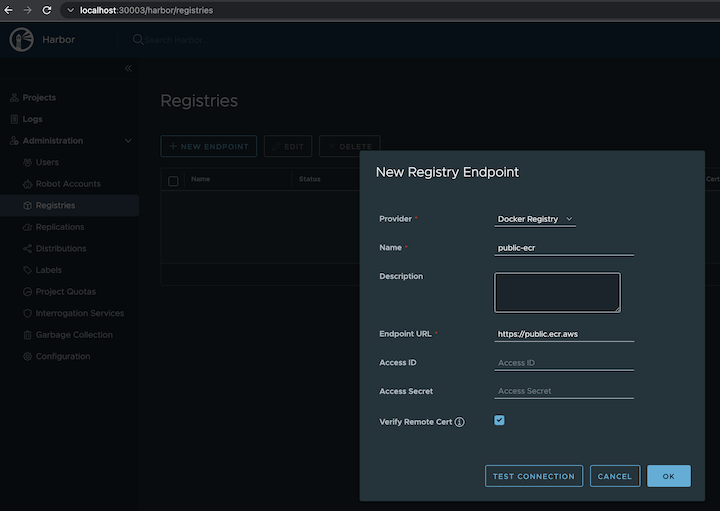

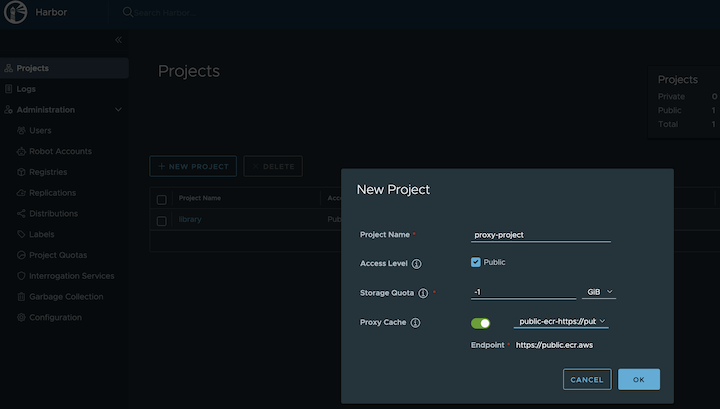

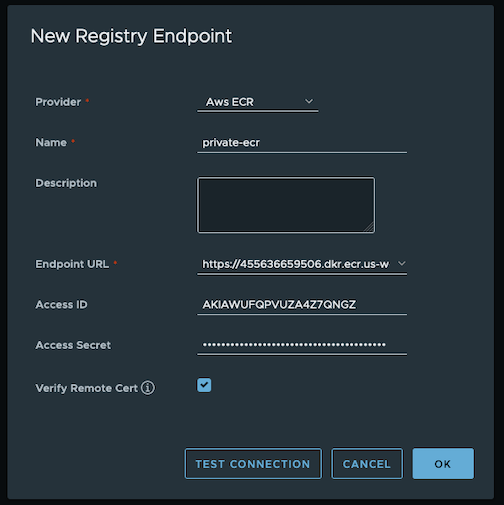

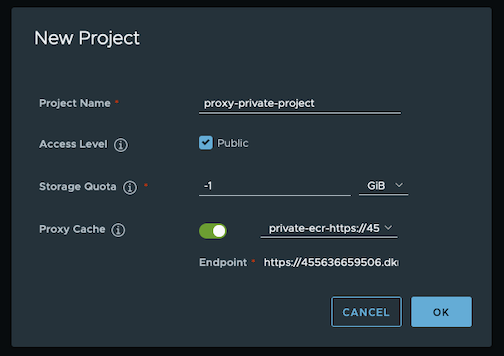

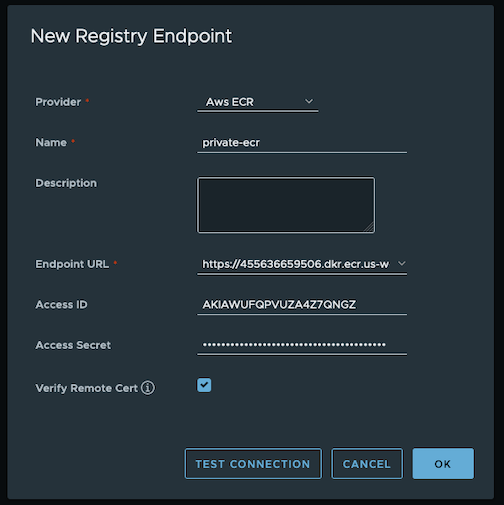

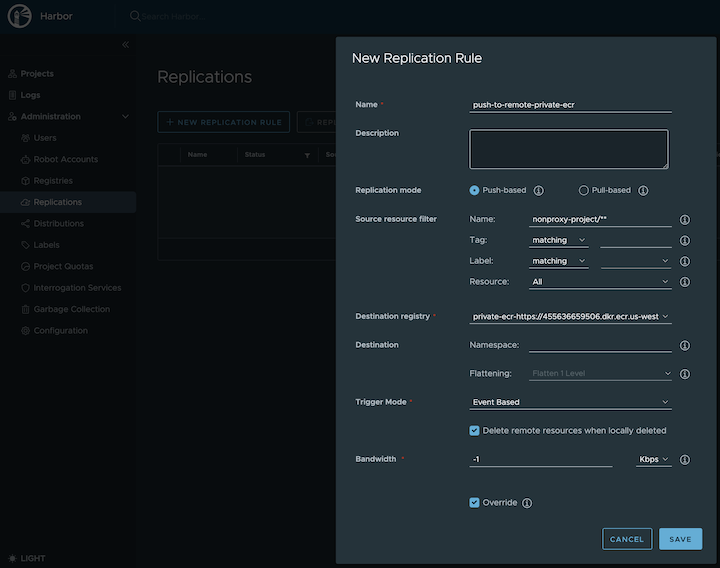

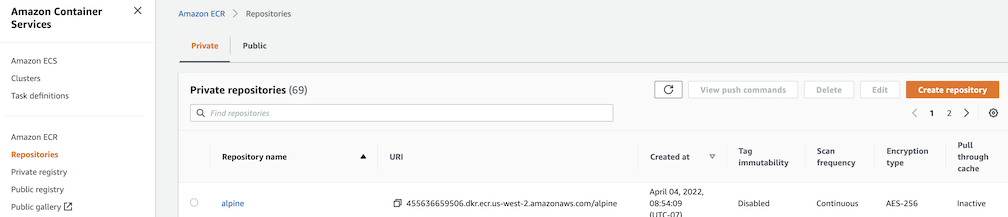

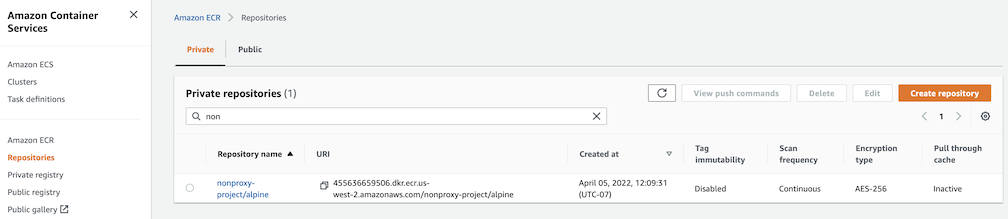

Prepare for using curated packages for airgapped environments

If you are running in an airgapped environment and you set up a local registry mirror, you can copy curated packages from Amazon ECR to your local registry mirror with the following command.

The $BUNDLE_RELEASE_YAML_PATH should be set to the eks-anywhere-downloads/bundle-release.yaml location where you unpacked the tarball from theeksctl anywhere download artifacts command. The $REGISTRY_MIRROR_CERT_PATH and $REGISTRY_MIRROR_URL values must be the same as the registryMirrorConfiguration in your EKS Anywhere cluster specification.

eksctl anywhere copy packages \

--bundle ${BUNDLE_RELEASE_YAML_PATH} \

--dst-cert ${REGISTRY_MIRROR_CERT_PATH} \

${REGISTRY_MIRROR_URL}

Once the curated packages images are in your local registry mirror, you must configure the curated packages controller to use your local registry mirror post-cluster creation. Configure the defaultImageRegistry and defaultRegistry settings for the PackageBundleController to point to your local registry mirror by applying a similar yaml definition as the one below to your standalone or management cluster. Existing PackageBundleController can be changed, and you do not need to deploy a new PackageBundleController. See the Packages configuration documentation

for more information.

apiVersion: anywhere.eks.amazonaws.com/v1alpha1

kind: PackageBundleController

metadata:

name: eksa-packages-bundle-controller

namespace: eksa-packages

spec:

defaultImageRegistry: ${REGISTRY_MIRROR_URL}/curated-packages

defaultRegistry: ${REGISTRY_MIRROR_URL}/eks-anywhere

Discover curated packages

You can get a list of the available packages from the command line:

export CLUSTER_NAME=<your-cluster-name>

export KUBECONFIG=${PWD}/${CLUSTER_NAME}/${CLUSTER_NAME}-eks-a-cluster.kubeconfig

eksctl anywhere list packages --kube-version 1.27

Example command output:

Package Version(s)

------- ----------

hello-eks-anywhere 0.1.2-a6847010915747a9fc8a412b233a2b1ee608ae76

adot 0.25.0-c26690f90d38811dbb0e3dad5aea77d1efa52c7b

cert-manager 1.9.1-dc0c845b5f71bea6869efccd3ca3f2dd11b5c95f

cluster-autoscaler 9.21.0-1.23-5516c0368ff74d14c328d61fe374da9787ecf437

harbor 2.5.1-ee7e5a6898b6c35668a1c5789aa0d654fad6c913

metallb 0.13.7-758df43f8c5a3c2ac693365d06e7b0feba87efd5

metallb-crds 0.13.7-758df43f8c5a3c2ac693365d06e7b0feba87efd5

metrics-server 0.6.1-eks-1-23-6-c94ed410f56421659f554f13b4af7a877da72bc1

emissary 3.3.0-cbf71de34d8bb5a72083f497d599da63e8b3837b

emissary-crds 3.3.0-cbf71de34d8bb5a72083f497d599da63e8b3837b

prometheus 2.41.0-b53c8be243a6cc3ac2553de24ab9f726d9b851ca

Generate curated packages configuration

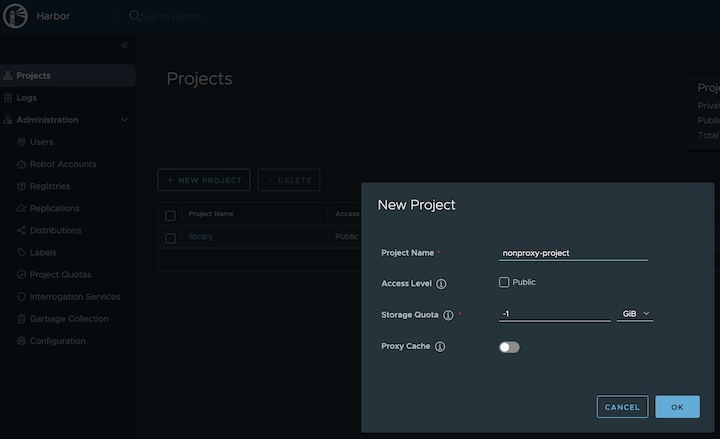

The example shows how to install the harbor package from the curated package list

.

export CLUSTER_NAME=<your-cluster-name>

eksctl anywhere generate package harbor --cluster ${CLUSTER_NAME} --kube-version 1.27 > harbor-spec.yaml

3 - Packages configuration

Full EKS Anywhere configuration reference for curated packages

This is a generic template with detailed descriptions below for reference. To generate your own package configuration, follow instructions from Package Management

section and modify it using descriptions below.

apiVersion: anywhere.eks.amazonaws.com/v1alpha1

kind: PackageBundleController

metadata:

name: eksa-packages-bundle-controller

namespace: eksa-packages

spec:

activeBundle: v1-21-83

defaultImageRegistry: 783794618700.dkr.ecr.us-west-2.amazonaws.com

defaultRegistry: public.ecr.aws/eks-anywhere

privateRegistry: ""

upgradeCheckInterval: 24h0m0s

---

apiVersion: packages.eks.amazonaws.com/v1alpha1

kind: PackageBundle

metadata:

name: package-bundle

namespace: eksa-packages

spec:

packages:

- name: hello-eks-anywhere

source:

repository: hello-eks-anywhere

versions:

- digest: sha256:c31242a2f94a58017409df163debc01430de65ded6bdfc5496c29d6a6cbc0d94

images:

- digest: sha256:26e3f2f9aa546fee833218ece3fe7561971fd905cef40f685fd1b5b09c6fb71d

repository: hello-eks-anywhere

name: 0.1.1-083e68edbbc62ca0228a5669e89e4d3da99ff73b

schema: H4sIAJc5EW...

---

apiVersion: packages.eks.amazonaws.com/v1alpha1

kind: Package

metadata:

name: my-hello-eks-anywhere

namespace: eksa-packages

spec:

config: |

title: "My Hello"

packageName: hello-eks-anywhere

targetNamespace: eksa-packages

API Reference

Packages:

packages.eks.amazonaws.com/v1alpha1

Resource Types:

PackageBundleController

↩ Parent

PackageBundleController is the Schema for the packagebundlecontroller API.

| Name |

Type |

Description |

Required |

| apiVersion |

string |

packages.eks.amazonaws.com/v1alpha1 |

true |

| kind |

string |

PackageBundleController |

true |

| metadata |

object |

Refer to the Kubernetes API documentation for the fields of the `metadata` field. |

true |

| spec |

object |

PackageBundleControllerSpec defines the desired state of PackageBundleController.

|

false |

| status |

object |

PackageBundleControllerStatus defines the observed state of PackageBundleController.

|

false |

PackageBundleController.spec

↩ Parent

PackageBundleControllerSpec defines the desired state of PackageBundleController.

| Name |

Type |

Description |

Required |

| activeBundle |

string |

ActiveBundle is name of the bundle from which packages should be sourced.

|

false |

| bundleRepository |

string |

Repository portion of an OCI address to the bundle

Default: eks-anywhere-packages-bundles

|

false |

| createNamespace |

boolean |

Allow target namespace creation by the controller

Default: false

|

false |

| defaultImageRegistry |

string |

DefaultImageRegistry for pulling images

Default: 783794618700.dkr.ecr.us-west-2.amazonaws.com

|

false |

| defaultRegistry |

string |

DefaultRegistry for pulling helm charts and the bundle

Default: public.ecr.aws/eks-anywhere

|

false |

| logLevel |

integer |

LogLevel controls the verbosity of logging in the controller.

Format: int32

|

false |

| privateRegistry |

string |

PrivateRegistry is the registry being used for all images, charts and bundles

|

false |

| upgradeCheckInterval |

string |

UpgradeCheckInterval is the time between upgrade checks.

The format is that of time's ParseDuration.

Default: 24h

|

false |

| upgradeCheckShortInterval |

string |

UpgradeCheckShortInterval time between upgrade checks if there is a problem.

The format is that of time's ParseDuration.

Default: 1h

|

false |

PackageBundleController.status

↩ Parent

PackageBundleControllerStatus defines the observed state of PackageBundleController.

| Name |

Type |

Description |

Required |

| detail |

string |

Detail of the state.

|

false |

| spec |

object |

Spec previous settings

|

false |

| state |

enum |

State of the bundle controller.

Enum: ignored, active, disconnected, upgrade available

|

false |

PackageBundleController.status.spec

↩ Parent

Spec previous settings

| Name |

Type |

Description |

Required |

| activeBundle |

string |

ActiveBundle is name of the bundle from which packages should be sourced.

|

false |

| bundleRepository |

string |

Repository portion of an OCI address to the bundle

Default: eks-anywhere-packages-bundles

|

false |

| createNamespace |

boolean |

Allow target namespace creation by the controller

Default: false

|

false |

| defaultImageRegistry |

string |

DefaultImageRegistry for pulling images

Default: 783794618700.dkr.ecr.us-west-2.amazonaws.com

|

false |

| defaultRegistry |

string |

DefaultRegistry for pulling helm charts and the bundle

Default: public.ecr.aws/eks-anywhere

|

false |

| logLevel |

integer |

LogLevel controls the verbosity of logging in the controller.

Format: int32

|

false |

| privateRegistry |

string |

PrivateRegistry is the registry being used for all images, charts and bundles

|

false |

| upgradeCheckInterval |

string |

UpgradeCheckInterval is the time between upgrade checks.

The format is that of time's ParseDuration.

Default: 24h

|

false |

| upgradeCheckShortInterval |

string |

UpgradeCheckShortInterval time between upgrade checks if there is a problem.

The format is that of time's ParseDuration.

Default: 1h

|

false |

PackageBundle

↩ Parent

PackageBundle is the Schema for the packagebundle API.

| Name |

Type |

Description |

Required |

| apiVersion |

string |

packages.eks.amazonaws.com/v1alpha1 |

true |

| kind |

string |

PackageBundle |

true |

| metadata |

object |

Refer to the Kubernetes API documentation for the fields of the `metadata` field. |

true |

| spec |

object |

PackageBundleSpec defines the desired state of PackageBundle.

|

false |

| status |

object |

PackageBundleStatus defines the observed state of PackageBundle.

|

false |

PackageBundle.spec

↩ Parent

PackageBundleSpec defines the desired state of PackageBundle.

| Name |

Type |

Description |

Required |

| packages |

[]object |

Packages supported by this bundle.

|

true |

| minControllerVersion |

string |

Minimum required packages controller version

|

false |

PackageBundle.spec.packages[index]

↩ Parent

BundlePackage specifies a package within a bundle.

| Name |

Type |

Description |

Required |

| source |

object |

Source location for the package (probably a helm chart).

|

true |

| name |

string |

Name of the package.

|

false |

| workloadonly |

boolean |

WorkloadOnly specifies if the package should be installed only on the workload cluster

|

false |

PackageBundle.spec.packages[index].source

↩ Parent

Source location for the package (probably a helm chart).

| Name |

Type |

Description |

Required |

| repository |

string |

Repository within the Registry where the package is found.

|

true |

| versions |

[]object |

Versions of the package supported by this bundle.

|

true |

| registry |

string |

Registry in which the package is found.

|

false |

PackageBundle.spec.packages[index].source.versions[index]

↩ Parent

SourceVersion describes a version of a package within a repository.

| Name |

Type |

Description |

Required |

| digest |

string |

Digest is a checksum value identifying the version of the package and its contents.

|

true |

| name |

string |

Name is a human-friendly description of the version, e.g. "v1.0".

|

true |

| dependencies |

[]string |

Dependencies to be installed before the package

|

false |

| images |

[]object |

Images is a list of images used by this version of the package.

|

false |

| schema |

string |

Schema is a base64 encoded, gzipped json schema used to validate package configurations.

|

false |

PackageBundle.spec.packages[index].source.versions[index].images[index]

↩ Parent

VersionImages is an image used by a version of a package.

| Name |

Type |

Description |

Required |

| digest |

string |

Digest is a checksum value identifying the version of the package and its contents.

|

true |

| repository |

string |

Repository within the Registry where the package is found.

|

true |

PackageBundle.status

↩ Parent

PackageBundleStatus defines the observed state of PackageBundle.

| Name |

Type |

Description |

Required |

| state |

enum |

PackageBundleStateEnum defines the observed state of PackageBundle.

Enum: available, ignored, invalid, controller upgrade required

|

true |

| spec |

object |

PackageBundleSpec defines the desired state of PackageBundle.

|

false |

PackageBundle.status.spec

↩ Parent

PackageBundleSpec defines the desired state of PackageBundle.

| Name |

Type |

Description |

Required |

| packages |

[]object |

Packages supported by this bundle.

|

true |

| minControllerVersion |

string |

Minimum required packages controller version

|

false |

PackageBundle.status.spec.packages[index]

↩ Parent

BundlePackage specifies a package within a bundle.

| Name |

Type |

Description |

Required |

| source |

object |

Source location for the package (probably a helm chart).

|

true |

| name |

string |

Name of the package.

|

false |

| workloadonly |

boolean |

WorkloadOnly specifies if the package should be installed only on the workload cluster

|

false |

PackageBundle.status.spec.packages[index].source

↩ Parent

Source location for the package (probably a helm chart).

| Name |

Type |

Description |

Required |

| repository |

string |

Repository within the Registry where the package is found.

|

true |

| versions |

[]object |

Versions of the package supported by this bundle.

|

true |

| registry |

string |

Registry in which the package is found.

|

false |

PackageBundle.status.spec.packages[index].source.versions[index]

↩ Parent

SourceVersion describes a version of a package within a repository.

| Name |

Type |

Description |

Required |

| digest |

string |

Digest is a checksum value identifying the version of the package and its contents.

|

true |

| name |

string |

Name is a human-friendly description of the version, e.g. "v1.0".

|

true |

| dependencies |

[]string |

Dependencies to be installed before the package

|

false |

| images |

[]object |

Images is a list of images used by this version of the package.

|

false |

| schema |

string |

Schema is a base64 encoded, gzipped json schema used to validate package configurations.

|

false |

PackageBundle.status.spec.packages[index].source.versions[index].images[index]

↩ Parent

VersionImages is an image used by a version of a package.

| Name |

Type |

Description |

Required |

| digest |

string |

Digest is a checksum value identifying the version of the package and its contents.

|

true |

| repository |

string |

Repository within the Registry where the package is found.

|

true |

Package

↩ Parent

Package is the Schema for the package API.

| Name |

Type |

Description |

Required |

| apiVersion |

string |

packages.eks.amazonaws.com/v1alpha1 |

true |

| kind |

string |

Package |

true |

| metadata |

object |

Refer to the Kubernetes API documentation for the fields of the `metadata` field. |

true |

| spec |

object |

PackageSpec defines the desired state of an package.

|

false |

| status |

object |

PackageStatus defines the observed state of Package.

|

false |

Package.spec

↩ Parent

PackageSpec defines the desired state of an package.

| Name |

Type |

Description |

Required |

| packageName |

string |

PackageName is the name of the package as specified in the bundle.

|

true |

| config |

string |

Config for the package.

|

false |

| packageVersion |

string |

PackageVersion is a human-friendly version name or sha256 checksum for the package, as specified in the bundle.

|

false |

| targetNamespace |

string |

TargetNamespace defines where package resources will be deployed.

|

false |

Package.status

↩ Parent

PackageStatus defines the observed state of Package.

| Name |

Type |

Description |

Required |

| currentVersion |

string |

Version currently installed.

|

true |

| source |

object |

Source associated with the installation.

|

true |

| detail |

string |

Detail of the state.

|

false |

| spec |

object |

Spec previous settings

|

false |

| state |

enum |

State of the installation.

Enum: initializing, installing, installing dependencies, installed, updating, uninstalling, unknown

|

false |

| targetVersion |

string |

Version to be installed.

|

false |

| upgradesAvailable |

[]object |

UpgradesAvailable indicates upgraded versions in the bundle.

|

false |

Package.status.source

↩ Parent

Source associated with the installation.

| Name |

Type |

Description |

Required |

| digest |

string |

Digest is a checksum value identifying the version of the package and its contents.

|

true |

| registry |

string |

Registry in which the package is found.

|

true |

| repository |

string |

Repository within the Registry where the package is found.

|

true |

| version |

string |

Versions of the package supported.

|

true |

Package.status.spec

↩ Parent

Spec previous settings

| Name |

Type |

Description |

Required |

| packageName |

string |

PackageName is the name of the package as specified in the bundle.

|

true |

| config |

string |

Config for the package.

|

false |

| packageVersion |

string |

PackageVersion is a human-friendly version name or sha256 checksum for the package, as specified in the bundle.

|

false |

| targetNamespace |

string |

TargetNamespace defines where package resources will be deployed.

|

false |

Package.status.upgradesAvailable[index]

↩ Parent

PackageAvailableUpgrade details the package’s available upgrade versions.

| Name |

Type |

Description |

Required |

| tag |

string |

Tag is a specific version number or sha256 checksum for the package upgrade.

|

true |

| version |

string |

Version is a human-friendly version name for the package upgrade.

|

true |

4 - Managing the package controller

Installing the package controller

Important

The package controller installation creates a package bundle controller resource for each cluster, thus allowing each to activate a different package bundle version. Ideally, you should never delete this resource because it would mean losing that information and upon re-installing, the latest bundle would be selected. However, you can always go back to the previous bundle version. For more information, see

Managing package bundles.

The package controller is typically installed during cluster creation, but may be disabled intentionally in your cluster.yaml by setting spec.packages.disable to true.

If you created a cluster without the package controller or if the package controller was not properly configured, you may need to manually install it.

-

Enable the package controller in your cluster.yaml, if it was previously disabled:

apiVersion: anywhere.eks.amazonaws.com/v1alpha1

kind: Cluster

metadata:

name: mgmt

spec:

packages:

disable: false

-

Make sure you are authenticated with the AWS CLI. Use the credentials you set up for packages. These credentials should have limited capabilities

:

export AWS_ACCESS_KEY_ID="your*access*id"

export AWS_SECRET_ACCESS_KEY="your*secret*key"

export EKSA_AWS_ACCESS_KEY_ID="your*access*id"

export EKSA_AWS_SECRET_ACCESS_KEY="your*secret*key"

-

Verify your credentials are working:

aws sts get-caller-identity

-

Authenticate docker to the private AWS ECR registry with your AWS credentials. Reference prerequisites to identity the AWS account that houses the EKS Anywhere packages artifacts. Authentication is required to pull images from it.

aws ecr get-login-password | docker login --username AWS --password-stdin $ECR_PACKAGES_ACCOUNT.dkr.ecr.$EKSA_AWS_REGION.amazonaws.com

-

Verify you can pull an image from the packages registry:

docker pull $ECR_PACKAGES_ACCOUNT.dkr.ecr.$EKSA_AWS_REGION.amazonaws.com/emissary-ingress/emissary:v3.9.1-828e7d186ded23e54f6bd95a5ce1319150f7e325

If the image downloads successfully, it worked!

-

Now, install the package controller using the EKS Anywhere Packages CLI:

eksctl anywhere install packagecontroller -f cluster.yaml

The package controller should now be installed!

-

Use kubectl to check the eks-anywhere-packages pod is running in your management cluster:

kubectl get pods -n eksa-packages

NAME READY STATUS RESTARTS AGE

eks-anywhere-packages-55bc54467c-jfhgp 1/1 Running 0 21s

Updating the package credentials

You may need to create or update your credentials which you can do with a command like this. Set the environment variables to the proper values before running the command.

kubectl delete secret -n eksa-packages aws-secret

kubectl create secret -n eksa-packages generic aws-secret \

--from-literal=AWS_ACCESS_KEY_ID=${EKSA_AWS_ACCESS_KEY_ID} \

--from-literal=AWS_SECRET_ACCESS_KEY=${EKSA_AWS_SECRET_ACCESS_KEY} \

--from-literal=REGION=${EKSA_AWS_REGION}

Upgrade the packages controller

EKS Anywhere v0.15.0 (packages controller v0.3.9+) and onwards includes support for the eks-anywhere-packages controller as a self-managed package feature. The package controller now upgrades automatically according to the version specified within the management cluster’s selected package bundle.

For any version prior to v0.3.X, manual steps must be executed to upgrade.

Important

This operation may change your cluster’s selected package bundle to the latest version. However, you can always go back to the previous bundle version. For more information, see

Managing package bundles.

To manually upgrade the package controller, do the following:

- Ensure the namespace will be kept

kubectl annotate namespaces eksa-packages helm.sh/resource-policy=keep

- Uninstall the eks-anywhere-packages helm release

helm uninstall -n eksa-packages eks-anywhere-packages

- Remove the secret called aws-secret (we will need credentials when installing the new version)

kubectl delete secret -n eksa-packages aws-secret

- Install the new version using the latest eksctl-anywhere binary on your management cluster

eksctl anywhere install packagecontroller -f eksa-mgmt-cluster.yaml

5 - Packages regional ECR migration

Migrating EKS Anywhere Curated Packages to latest regional ECR repositories

When you purchase an EKS Anywhere Enterprise Subscription through the Amazon EKS console or APIs, the AWS account that purchased the subscription is automatically granted access to EKS Anywhere Curated Packages in the AWS Region where the subscription is created. If you received trial access to EKS Anywhere Curated Packages or if you have an EKS Anywhere Enterprise Subscription that was created before October 2023, then you need to migrate your EKS Anywhere Curated Packages configuration to use the latest ECR regional repositories. This process would cause all the Curated Packages installed on the cluster to rollout and be deployed from the latest ECR regional repositories.

Expand for packages registry to AWS Region table

| AWS Region |

Packages Registry Account |

| us-west-2 |

346438352937 |

| us-west-1 |

440460740297 |

| us-east-1 |

331113665574 |

| us-east-2 |

297090588151 |

| ap-east-1 |

804323328300 |

| ap-northeast-1 |

143143237519 |

| ap-northeast-2 |

447311122189 |

| ap-south-1 |

357015164304 |

| ap-south-2 |

388483641499 |

| ap-southeast-1 |

654894141437 |

| ap-southeast-2 |

299286866837 |

| ap-southeast-3 |

703305448174 |

| ap-southeast-4 |

106475008004 |

| af-south-1 |

783635962247 |

| ca-central-1 |

064352486547 |

| eu-central-1 |

364992945014 |

| eu-central-2 |

551422459769 |

| eu-north-1 |

826441621985 |

| eu-south-1 |

787863792200 |

| eu-west-1 |

090204409458 |

| eu-west-2 |

371148654473 |

| eu-west-3 |

282646289008 |

| il-central-1 |

131750224677 |

| me-central-1 |

454241080883 |

| me-south-1 |

158698011868 |

| sa-east-1 |

517745584577 |

Steps for Migration

-

Ensure you have an active EKS Anywhere Enterprise Subscription. For more information, refer Purchase subscriptions.

-

If the AWS account that created the EKS Anywhere Enterprise Subscription through the Amazon EKS console or APIs and the AWS IAM user credentials for curated packages on your existing cluster are different, you need to update the aws-secret object on the cluster with new credentials. Refer Updating the package credentials

.

-

Edit the ecr-credential-provider-package package on the cluster and update matchImages with the correct ECR package registry for the AWS Region where you created your subscription. Example, 346438352937.dkr.ecr.us-west-2.amazonaws.com for us-west-2. Reference the table in the expanded output at the top of this page for a mapping of AWS Regions to ECR package registries.

kubectl edit package ecr-credential-provider-package -n eksa-packages-<cluster name>

This causes ecr-credential-provider-package pods to rollout and the kubelet is configured to use AWS credentials for pulling images from the new regional ECR packages registry.

-

Edit the PackageBundleController object on the cluster and set the defaultImageRegistry and defaultRegistry to point to the ECR package registry for the AWS Region where you created your subscription.

kubectl edit packagebundlecontroller <cluster name> -n eksa-packages

-

Restart the eks-anywhere-packages controller deployment.

kubectl rollout restart deployment eks-anywhere-packages -n eksa-packages

This step causes the package controller to pull down a new package bundle onto the cluster and marks the PackageBundleController as upgrade available. Example

NAMESPACE NAME ACTIVEBUNDLE STATE DETAIL

eksa-packages my-cluster-name v1-28-160 upgrade available v1-28-274 available

-

Edit the PackageBundleController object on the cluster and set the activeBundle field to the new bundle number that is available.

kubectl edit packagebundlecontroller <cluster name> -n eksa-packages

This step causes all the packages on the cluster to be reinstalled and pods rolled out from the new registry.

-

Edit the ecr-credential-provider-package package again and now set the sourceRegistry to point to the ECR package registry for the AWS Region where you created your subscription.

kubectl edit package ecr-credential-provider-package -n eksa-packages-<cluster name>

This causes ecr-credential-provider-package to be reinstalled from the new registry.

6 - Managing package bundles

Getting new package bundles

Package bundle resources are created and managed in the management cluster, so first set up the KUBECONFIG environment variable for the management cluster.

export KUBECONFIG=mgmt/mgmt-eks-a-cluster.kubeconfig

The EKS Anywhere package controller periodically checks upstream for the latest package bundle and applies it to your management cluster, except for when in an airgapped environment

. In that case, you would have to get the package bundle manually from outside of the airgapped environment and apply it to your management cluster.

To view the available packagebundles in your cluster, run the following:

kubectl get packagebundles -n eksa-packages

NAMESPACE NAME STATE

eksa-packages v1-27-125 available

To get a package bundle manually, you can use oras to pull the package bundle (bundle.yaml) from the public.ecr.aws/eks-anywhere repository. (See the ORAS CLI official documentation

for more details)

oras pull public.ecr.aws/eks-anywhere/eks-anywhere-packages-bundles:v1-27-latest

Downloading 1ba8253d19f9 bundle.yaml

Downloaded 1ba8253d19f9 bundle.yaml

Pulled [registry] public.ecr.aws/eks-anywhere/eks-anywhere-packages-bundles:v1-27-latest

Use kubectl to apply the new package bundle to your cluster to make it available for use.

kubectl apply -f bundle.yaml

The package bundle should now be available for use in the management cluster.

kubectl get packagebundles -n eksa-packages

NAMESPACE NAME STATE

eksa-packages v1-27-125 available

eksa-packages v1-27-126 available

Activating a package bundle

There are multiple packagebundlecontrollers resources in the management cluster which allows for each cluster to activate different package bundle versions. The active package bundle determines the versions of the packages that are installed on that cluster.

To view which package bundle is active for each cluster, use the kubectl command to list the packagebundlecontrollers objects in the management cluster.

kubectl get packagebundlecontrollers -A

NAMESPACE NAME ACTIVEBUNDLE STATE DETAIL

eksa-packages mgmt v1-27-125 active

eksa-packages w01 v1-27-125 active

To upgrade the active package bundle for the target cluster, edit the packagebundlecontroller object on the cluster and set the activeBundle field to the new bundle number that is available.

kubectl edit packagebundlecontroller <cluster name> -n eksa-packages

7 - Configuration best practices

Best practices with curated packages

Best Practice

Any supported EKS Anywhere curated package should be modified through package yaml files (with kind: Package) and applied through the command eksctl anywhere apply package -f packageFileName. Modifying objects outside of package yaml files may lead to unpredictable behaviors.

For automatic namespace (targetNamespace) creation, see createNamespace field: PackagebundleController.spec

8 - Packages

List of EKS Anywhere curated packages

Curated package list

9 - Curated packages troubleshooting

Troubleshooting specific to curated packages

General debugging

The major component of Curated Packages is the package controller. If the container is not running or not running correctly, packages will not be installed. Generally it should be debugged like any other Kubernetes application. The first step is to check that the pod is running.

kubectl get pods -n eksa-packages

You should see at least two pods with running and one or more refresher completed.

NAME READY STATUS RESTARTS AGE

eks-anywhere-packages-69d7bb9dd9-9d47l 1/1 Running 0 14s

eksa-auth-refresher-w82nm 0/1 Completed 0 10s

The describe command might help to get more detail on why there is a problem:

kubectl describe pods -n eksa-packages

Logs of the controller can be seen in a normal Kubernetes fashion:

kubectl logs deploy/eks-anywhere-packages -n eksa-packages controller

To get the general state of the package controller, run the following command:

kubectl get packages,packagebundles,packagebundlecontrollers -A

You should see an active packagebundlecontroller and an available bundle. The packagebundlecontroller should indicate the active bundle. It may take a few minutes to download and activate the latest bundle. The state of the package in this example is installing and there is an error downloading the chart.

NAMESPACE NAME PACKAGE AGE STATE CURRENTVERSION TARGETVERSION DETAIL

eksa-packages-sammy package.packages.eks.amazonaws.com/my-hello hello-eks-anywhere 42h installed 0.1.1-bc7dc6bb874632972cd92a2bca429a846f7aa785 0.1.1-bc7dc6bb874632972cd92a2bca429a846f7aa785 (latest)

eksa-packages-tlhowe package.packages.eks.amazonaws.com/my-hello hello-eks-anywhere 44h installed 0.1.1-083e68edbbc62ca0228a5669e89e4d3da99ff73b 0.1.1-083e68edbbc62ca0228a5669e89e4d3da99ff73b (latest)

NAMESPACE NAME STATE

eksa-packages packagebundle.packages.eks.amazonaws.com/v1-21-83 available

eksa-packages packagebundle.packages.eks.amazonaws.com/v1-23-70 available

eksa-packages packagebundle.packages.eks.amazonaws.com/v1-23-81 available

eksa-packages packagebundle.packages.eks.amazonaws.com/v1-23-82 available

eksa-packages packagebundle.packages.eks.amazonaws.com/v1-23-83 available

NAMESPACE NAME ACTIVEBUNDLE STATE DETAIL

eksa-packages packagebundlecontroller.packages.eks.amazonaws.com/sammy v1-23-70 upgrade available v1-23-83 available

eksa-packages packagebundlecontroller.packages.eks.amazonaws.com/tlhowe v1-21-83 active active

Package controller not running

If you do not see a pod or various resources for the package controller, it may be that it is not installed.

No resources found in eksa-packages namespace.

Most likely the cluster was created with an older version of the EKS Anywhere CLI. Curated packages became generally available with v0.11.0. Use the eksctl anywhere version command to verify you are running a new enough release and you can use the eksctl anywhere install packagecontroller command to install the package controller on an older release.

Error: this command is currently not supported

Error: this command is currently not supported

Curated packages became generally available with version v0.11.0. Use the version command to make sure you are running version v0.11.0 or later:

Error: cert-manager is not present in the cluster

Error: curated packages cannot be installed as cert-manager is not present in the cluster

This is most likely caused by an action to install curated packages at a workload cluster with eksctl anywhere version older than v0.12.0. In order to use packages on workload clusters, please upgrade eksctl anywhere version to v0.12+. The package manager will remotely manage packages on the workload cluster from the management cluster.

Package registry authentication

Error: ImagePullBackOff on Package

If a package fails to start with ImagePullBackOff:

NAME READY STATUS RESTARTS AGE

generated-harbor-jobservice-564d6fdc87 0/1 ImagePullBackOff 0 2d23h

If a package pod cannot pull images, you may not have your AWS credentials set up properly. Verify that your credentials are working properly.

Make sure you are authenticated with the AWS CLI. Use the credentials you set up for packages. These credentials should have limited capabilities

:

export AWS_ACCESS_KEY_ID="your*access*id"

export AWS_SECRET_ACCESS_KEY="your*secret*key"

aws sts get-caller-identity

Login to docker

aws ecr get-login-password |docker login --username AWS --password-stdin 783794618700.dkr.ecr.us-west-2.amazonaws.com

Verify you can pull an image

docker pull 783794618700.dkr.ecr.us-west-2.amazonaws.com/emissary-ingress/emissary:v3.5.1-bf70150bcdfe3a5383ec8ad9cd7eea801a0cb074

If the image downloads successfully, it worked!

You may need to create or update your credentials which you can do with a command like this. Set the environment variables to the proper values before running the command.

kubectl delete secret -n eksa-packages aws-secret

kubectl create secret -n eksa-packages generic aws-secret --from-literal=AWS_ACCESS_KEY_ID=${EKSA_AWS_ACCESS_KEY_ID} --from-literal=AWS_SECRET_ACCESS_KEY=${EKSA_AWS_SECRET_ACCESS_KEY} --from-literal=REGION=${EKSA_AWS_REGION}

Package on workload clusters

Starting at eksctl anywhere version v0.12.0, packages on workload clusters are remotely managed by the management cluster. While interacting with the package resources by the following commands for a workload cluster, please make sure the kubeconfig is pointing to the management cluster that was used to create the workload cluster.

Package manager is not managing packages on workload cluster

If the package manager is not managing packages on a workload cluster, make sure the management cluster has various resources for the workload cluster:

kubectl get packages,packagebundles,packagebundlecontrollers -A

You should see a PackageBundleController for the workload cluster named with the name of the workload cluster and the status should be set. There should be a namespace for the workload cluster as well:

kubectl get ns | grep eksa-packages

Create a PackageBundlecController for the workload cluster if it does not exist (where billy here is the cluster name):

cat <<! | kubectl apply -f -

apiVersion: packages.eks.amazonaws.com/v1alpha1

kind: PackageBundleController

metadata:

name: billy

namespace: eksa-packages

!

Workload cluster is disconnected

Cluster is disconnected:

NAMESPACE NAME ACTIVEBUNDLE STATE DETAIL

eksa-packages packagebundlecontroller.packages.eks.amazonaws.com/billy disconnected initializing target client: getting kubeconfig for cluster "billy": Secret "billy-kubeconfig" not found

In the example above, the secret does not exist which may be that the management cluster is not managing the cluster, the PackageBundleController name is wrong or the secret was deleted.

This also may happen if the management cluster cannot communicate with the workload cluster or the workload cluster was deleted, although the detail would be different.

Error: the server doesn’t have a resource type “packages”

All packages are remotely managed by the management cluster, and packages, packagebundles, and packagebundlecontrollers resources are all deployed on the management cluster. Please make sure the kubeconfig is pointing to the management cluster that was used to create the workload cluster while interacting with package-related resources.

A package command run on a cluster that does not seem to be managed by the management cluster. To get a list of the clusters managed by the management cluster run the following command:

eksctl anywhere get packagebundlecontroller

NAME ACTIVEBUNDLE STATE DETAIL

billy v1-21-87 active

There will be one packagebundlecontroller for each cluster that is being managed. The only valid cluster name in the above example is billy.

10 - ADOT Configuration

OpenTelemetry Collector provides a vendor-agnostic solution to receive, process and export telemetry data. It removes the need to run, operate, and maintain multiple agents/collectors. ADOT Collector is an AWS-supported distribution of the OpenTelemetry Collector.

Best Practice

Any supported EKS Anywhere curated package should be modified through package yaml files (with kind: Package) and applied through the command eksctl anywhere apply package -f packageFileName. Modifying objects outside of package yaml files may lead to unpredictable behaviors.

For automatic namespace (targetNamespace) creation, see createNamespace field: PackagebundleController.spec

Configuration options for ADOT

10.1 - ADOT with AMP and AMG

This tutorial demonstrates how to config the ADOT package to scrape metrics from an EKS Anywhere cluster, and send them to Amazon Managed Service for Prometheus

(AMP) and Amazon Managed Grafana

(AMG).

This tutorial walks through the following procedures:

Note

- We included

Test sections below for critical steps to help users to validate they have completed such procedure properly. We recommend going through them in sequence as checkpoints of the progress.

- We recommend creating all resources in the

us-west-2 region.

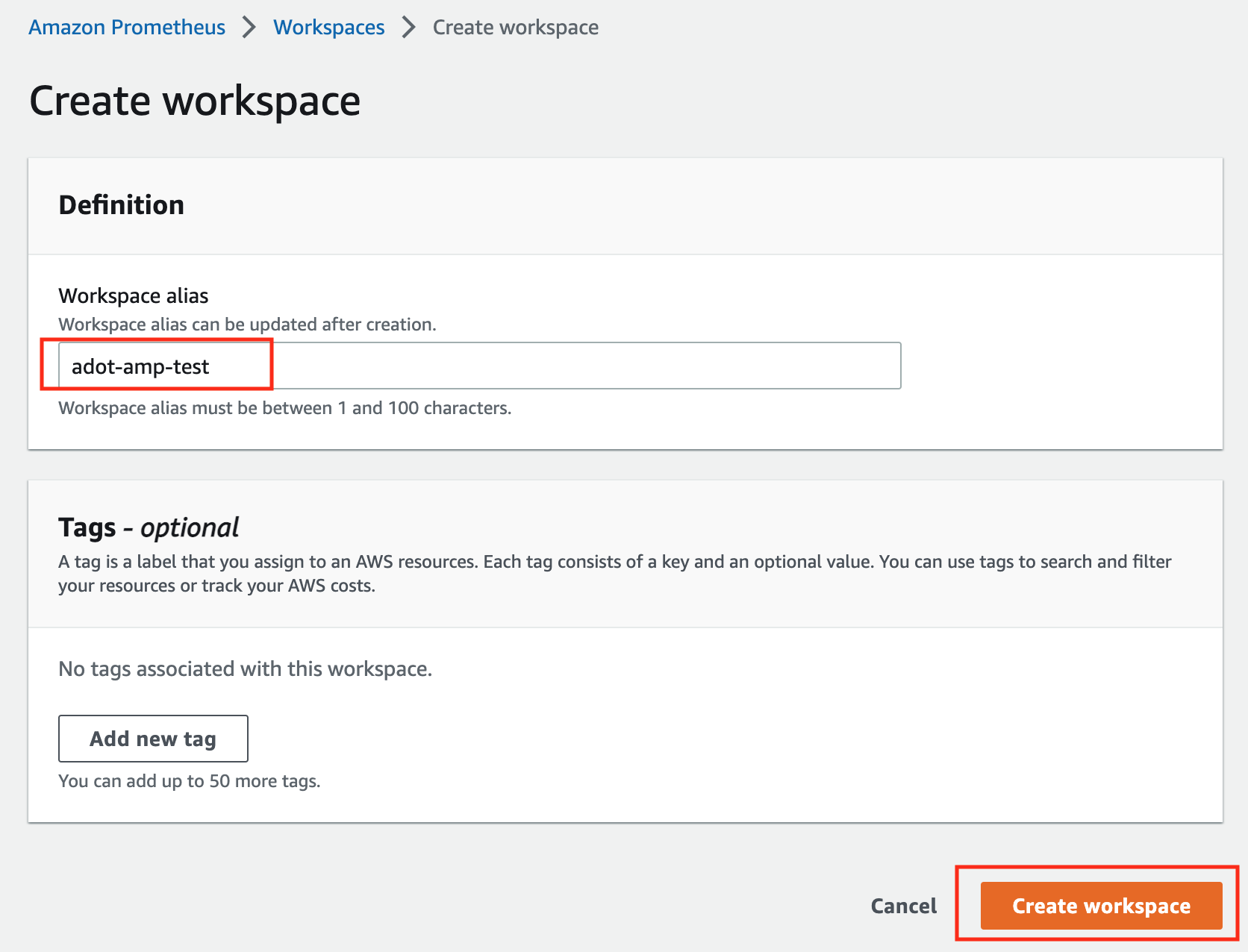

Create an AMP workspace

An AMP workspace is created to receive metrics from the ADOT package, and respond to query requests from AMG. Follow steps below to complete the set up:

-

Open the AMP console at https://console.aws.amazon.com/prometheus/.

-

Choose region us-west-2 from the top right corner.

-

Click on Create to create a workspace.

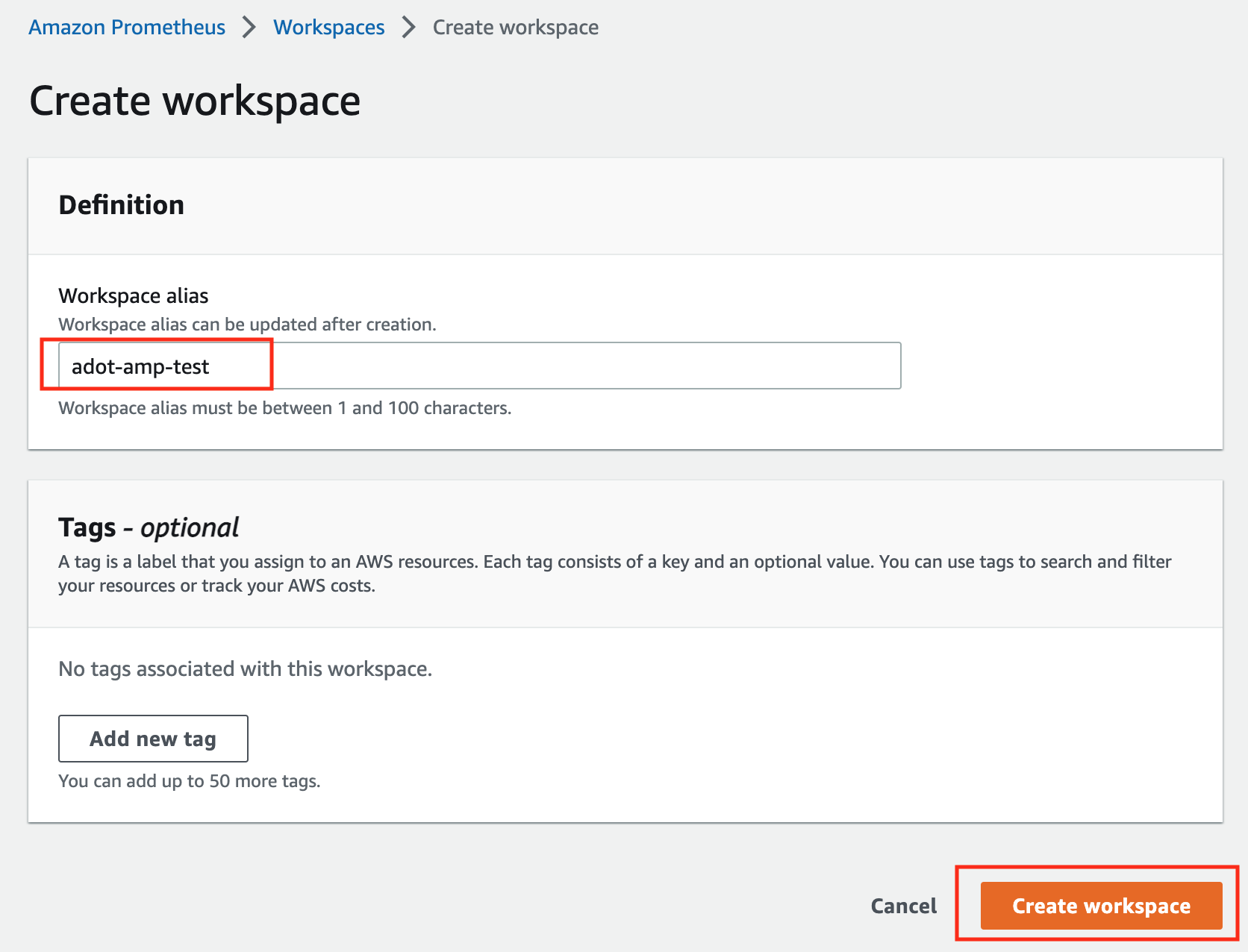

-

Type a workspace alias (adot-amp-test as an example), and click on Create workspace.

-

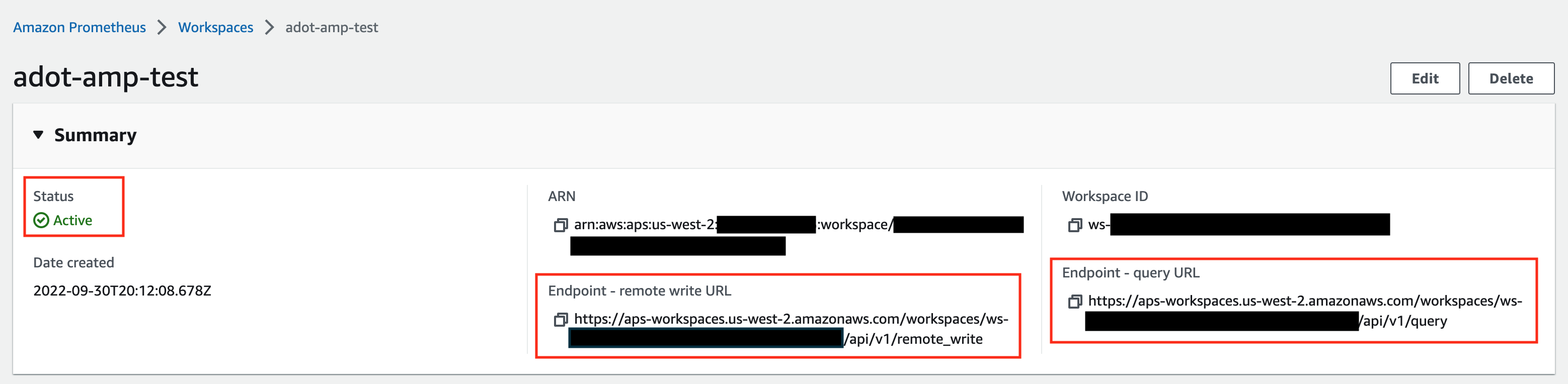

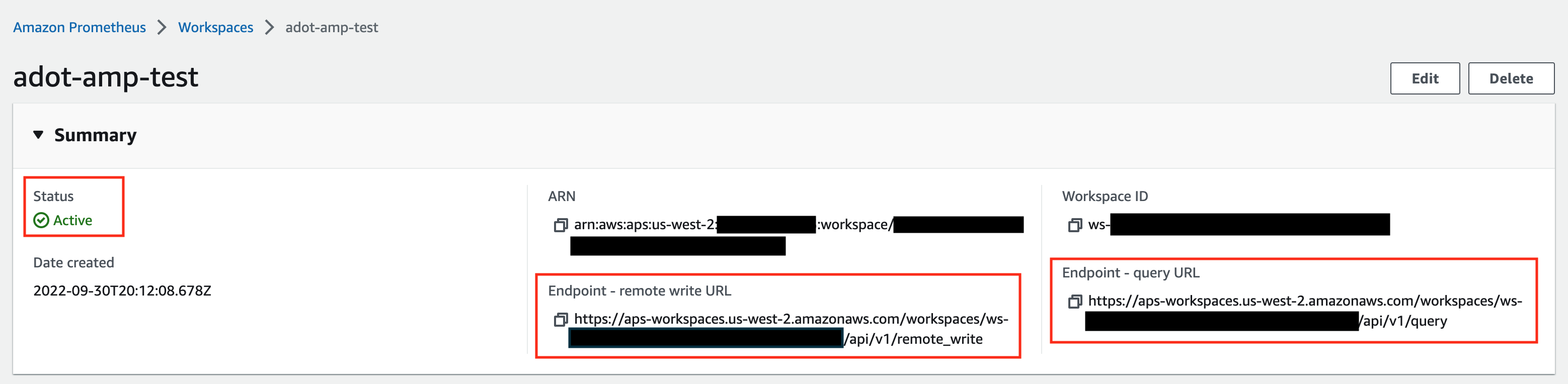

Make notes of the URLs displayed for Endpoint - remote write URL and Endpoint - query URL. You’ll need them when you configure your ADOT package to remote write metrics to this workspace and when you query metrics from this workspace. Make sure the workspace’s Status shows Active before proceeding to the next step.

For additional options (i.e. through CLI) and configurations (i.e. add a tag) to create an AMP workspace, refer to AWS AMP create a workspace guide.

Create a cluster with IRSA

To enable ADOT pods that run in EKS Anywhere clusters to authenticate with AWS services, a user needs to set up IRSA at cluster creation. EKS Anywhere cluster spec for Pod IAM

gives step-by-step guidance on how to do so. There are a few things to keep in mind while working through the guide:

-

While completing step Create an OIDC provider

, a user should:

-

create the S3 bucket in the us-west-2 region, and

-

attach an IAM policy with proper AMP access to the IAM role.

Below is an example that gives full access to AMP actions and resources. Refer to AMP IAM permissions and policies guide

for more customized options.

{

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"aps:*"

],

"Effect": "Allow",

"Resource": "*"

}

]

}

-

While completing step deploy pod identity webhook

, a user should:

- make sure the service account is created in the same namespace as the ADOT package (which is controlled by the

package definition file with field spec.targetNamespace);

- take a note of the service account that gets created in this step as it will be used in ADOT package installation;

- add an annotation

eks.amazonaws.com/role-arn: <role-arn> to the created service account.

By default, the service account is installed in the default namespace with name pod-identity-webhook, and the annotation eks.amazonaws.com/role-arn: <role-arn> is not added automatically.

IRSA Set Up Test

To ensure IRSA is set up properly in the cluster, a user can create an awscli pod for testing.

-

Apply the following yaml file in the cluster:

kubectl apply -f - <<EOF

apiVersion: v1

kind: Pod

metadata:

name: awscli

spec:

serviceAccountName: pod-identity-webhook

containers:

- image: amazon/aws-cli

command:

- sleep

- "infinity"

name: awscli

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

EOF

-

Exec into the pod:

kubectl exec -it awscli -- /bin/bash

-

Check if the pod can list AMP workspaces:

aws amp list-workspaces --region=us-west-2

-

If the pod has issues listing AMP workspaces, re-visit IRSA set up guidance before proceeding to the next step.

-

Exit the pod:

exit

Install the ADOT package

The ADOT package will be created with three components:

-

the Prometheus Receiver, which is designed to be a drop-in replacement for a Prometheus Server and is capable of scraping metrics from microservices instrumented with the Prometheus client library

;

-

the Prometheus Remote Write Exporter, which employs the remote write features and send metrics to AMP for long term storage;

-

the Sigv4 Authentication Extension, which enables ADOT pods to authenticate to AWS services.

Follow steps below to complete the ADOT package installation:

-

Update the following config file. Review comments carefully and replace everything that is wrapped with a <> tag. Note this configuration aims to mimic the Prometheus community helm chart. A user can tailor the scrape targets further by modifying the receiver section below. Refer to ADOT package spec

for additional explanations of each section.

Click to expand ADOT package config

apiVersion: packages.eks.amazonaws.com/v1alpha1

kind: Package

metadata:

name: my-adot

namespace: eksa-packages

spec:

packageName: adot

targetNamespace: default # this needs to match the namespace of the serviceAccount below

config: |

mode: deployment

serviceAccount:

# Specifies whether a service account should be created

create: false

# Annotations to add to the service account

annotations: {}

# Specifies the serviceAccount annotated with eks.amazonaws.com/role-arn.

name: "pod-identity-webhook" # name of the service account created at step Create a cluster with IRSA

config:

extensions:

sigv4auth:

region: "us-west-2"

service: "aps"

assume_role:

sts_region: "us-west-2"

receivers:

# Scrape configuration for the Prometheus Receiver

prometheus:

config:

global:

scrape_interval: 15s

scrape_timeout: 10s

scrape_configs:

- job_name: kubernetes-apiservers

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- action: keep

regex: default;kubernetes;https

source_labels:

- __meta_kubernetes_namespace

- __meta_kubernetes_service_name

- __meta_kubernetes_endpoint_port_name

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: false

- job_name: kubernetes-nodes

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- replacement: kubernetes.default.svc:443

target_label: __address__

- regex: (.+)

replacement: /api/v1/nodes/$$1/proxy/metrics

source_labels:

- __meta_kubernetes_node_name

target_label: __metrics_path__

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: false

- job_name: kubernetes-nodes-cadvisor

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- replacement: kubernetes.default.svc:443

target_label: __address__

- regex: (.+)

replacement: /api/v1/nodes/$$1/proxy/metrics/cadvisor

source_labels:

- __meta_kubernetes_node_name

target_label: __metrics_path__

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: false

- job_name: kubernetes-service-endpoints

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- action: keep

regex: true

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scrape

- action: replace

regex: (https?)

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scheme

target_label: __scheme__

- action: replace

regex: (.+)

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_path

target_label: __metrics_path__

- action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $$1:$$2

source_labels:

- __address__

- __meta_kubernetes_service_annotation_prometheus_io_port

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_service_annotation_prometheus_io_param_(.+)

replacement: __param_$$1

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: kubernetes_namespace

- action: replace

source_labels:

- __meta_kubernetes_service_name

target_label: kubernetes_name

- action: replace

source_labels:

- __meta_kubernetes_pod_node_name

target_label: kubernetes_node

- job_name: kubernetes-service-endpoints-slow

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- action: keep

regex: true

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scrape_slow

- action: replace

regex: (https?)

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scheme

target_label: __scheme__

- action: replace

regex: (.+)

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_path

target_label: __metrics_path__

- action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $$1:$$2

source_labels:

- __address__

- __meta_kubernetes_service_annotation_prometheus_io_port

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_service_annotation_prometheus_io_param_(.+)

replacement: __param_$$1

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: kubernetes_namespace

- action: replace

source_labels:

- __meta_kubernetes_service_name

target_label: kubernetes_name

- action: replace

source_labels:

- __meta_kubernetes_pod_node_name

target_label: kubernetes_node

scrape_interval: 5m

scrape_timeout: 30s

- job_name: prometheus-pushgateway

kubernetes_sd_configs:

- role: service

relabel_configs:

- action: keep

regex: pushgateway

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_probe

- job_name: kubernetes-services

kubernetes_sd_configs:

- role: service

metrics_path: /probe

params:

module:

- http_2xx

relabel_configs:

- action: keep

regex: true

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_probe

- source_labels:

- __address__

target_label: __param_target

- replacement: blackbox

target_label: __address__

- source_labels:

- __param_target

target_label: instance

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels:

- __meta_kubernetes_namespace

target_label: kubernetes_namespace

- source_labels:

- __meta_kubernetes_service_name

target_label: kubernetes_name

- job_name: kubernetes-pods

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: keep

regex: true

source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_scrape

- action: replace

regex: (https?)

source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_scheme

target_label: __scheme__

- action: replace

regex: (.+)

source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_path

target_label: __metrics_path__

- action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $$1:$$2

source_labels:

- __address__

- __meta_kubernetes_pod_annotation_prometheus_io_port

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_annotation_prometheus_io_param_(.+)

replacement: __param_$$1

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: kubernetes_namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: kubernetes_pod_name

- action: drop

regex: Pending|Succeeded|Failed|Completed

source_labels:

- __meta_kubernetes_pod_phase

- job_name: kubernetes-pods-slow

scrape_interval: 5m

scrape_timeout: 30s

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: keep

regex: true

source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_scrape_slow

- action: replace

regex: (https?)

source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_scheme

target_label: __scheme__

- action: replace

regex: (.+)

source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_path

target_label: __metrics_path__

- action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $$1:$$2

source_labels:

- __address__

- __meta_kubernetes_pod_annotation_prometheus_io_port

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_annotation_prometheus_io_param_(.+)

replacement: __param_$1

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- action: drop

regex: Pending|Succeeded|Failed|Completed

source_labels:

- __meta_kubernetes_pod_phase

processors:

batch/metrics:

timeout: 60s

exporters:

logging:

logLevel: info

prometheusremotewrite:

endpoint: "<AMP-WORKSPACE>/api/v1/remote_write" # Replace with your AMP workspace

auth:

authenticator: sigv4auth

service:

extensions:

- health_check

- memory_ballast

- sigv4auth

pipelines:

metrics:

receivers: [prometheus]

processors: [batch/metrics]

exporters: [logging, prometheusremotewrite]

-

Bind additional roles to the service account pod-identity-webhook (created at step Create a cluster with IRSA

) by applying the following file in the cluster (using kubectl apply -f <file-name>). This is because pod-identity-webhook by design does not have sufficient permissions to scrape all Kubernetes targets listed in the ADOT config file above. If modifications are made to the Prometheus Receiver, make updates to the file below to add / remove additional permissions before applying the file.

Click to expand clusterrole and clusterrolebinding config

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: otel-prometheus-role

rules:

- apiGroups:

- ""

resources:

- nodes

- nodes/proxy

- services

- endpoints

- pods

verbs:

- get

- list

- watch

- apiGroups:

- extensions

resources:

- ingresses

verbs:

- get

- list

- watch

- nonResourceURLs:

- /metrics

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: otel-prometheus-role-binding

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: otel-prometheus-role

subjects:

- kind: ServiceAccount

name: pod-identity-webhook # replace with name of the service account created at step Create a cluster with IRSA

namespace: default # replace with namespace where the service account was created at step Create a cluster with IRSA

-

Use the ADOT package config file defined above to complete the ADOT installation. Refer to ADOT installation guide

for details.

ADOT Package Test

To ensure the ADOT package is installed correctly in the cluster, a user can perform the following tests.

Check pod logs

Check ADOT pod logs using kubectl logs <adot-pod-name> -n <namespace>. It should display logs similar to below.

...

2022-09-30T23:22:59.184Z info service/telemetry.go:103 Setting up own telemetry...

2022-09-30T23:22:59.184Z info service/telemetry.go:138 Serving Prometheus metrics {"address": "0.0.0.0:8888", "level": "basic"}

2022-09-30T23:22:59.185Z info components/components.go:30 In development component. May change in the future. {"kind": "exporter", "data_type": "metrics", "name": "logging", "stability": "in development"}

2022-09-30T23:22:59.186Z info extensions/extensions.go:42 Starting extensions...

2022-09-30T23:22:59.186Z info extensions/extensions.go:45 Extension is starting... {"kind": "extension", "name": "health_check"}

2022-09-30T23:22:59.186Z info healthcheckextension@v0.58.0/healthcheckextension.go:44 Starting health_check extension {"kind": "extension", "name": "health_check", "config": {"Endpoint":"0.0.0.0:13133","TLSSetting":null,"CORS":null,"Auth":null,"MaxRequestBodySize":0,"IncludeMetadata":false,"Path":"/","CheckCollectorPipeline":{"Enabled":false,"Interval":"5m","ExporterFailureThreshold":5}}}

2022-09-30T23:22:59.186Z info extensions/extensions.go:49 Extension started. {"kind": "extension", "name": "health_check"}

2022-09-30T23:22:59.186Z info extensions/extensions.go:45 Extension is starting... {"kind": "extension", "name": "memory_ballast"}

2022-09-30T23:22:59.187Z info ballastextension/memory_ballast.go:52 Setting memory ballast {"kind": "extension", "name": "memory_ballast", "MiBs": 0}

2022-09-30T23:22:59.187Z info extensions/extensions.go:49 Extension started. {"kind": "extension", "name": "memory_ballast"}

2022-09-30T23:22:59.187Z info extensions/extensions.go:45 Extension is starting... {"kind": "extension", "name": "sigv4auth"}

2022-09-30T23:22:59.187Z info extensions/extensions.go:49 Extension started. {"kind": "extension", "name": "sigv4auth"}

2022-09-30T23:22:59.187Z info pipelines/pipelines.go:74 Starting exporters...

2022-09-30T23:22:59.187Z info pipelines/pipelines.go:78 Exporter is starting... {"kind": "exporter", "data_type": "metrics", "name": "logging"}

2022-09-30T23:22:59.187Z info pipelines/pipelines.go:82 Exporter started. {"kind": "exporter", "data_type": "metrics", "name": "logging"}

2022-09-30T23:22:59.187Z info pipelines/pipelines.go:78 Exporter is starting... {"kind": "exporter", "data_type": "metrics", "name": "prometheusremotewrite"}

2022-09-30T23:22:59.187Z info pipelines/pipelines.go:82 Exporter started. {"kind": "exporter", "data_type": "metrics", "name": "prometheusremotewrite"}

2022-09-30T23:22:59.187Z info pipelines/pipelines.go:86 Starting processors...

2022-09-30T23:22:59.187Z info pipelines/pipelines.go:90 Processor is starting... {"kind": "processor", "name": "batch/metrics", "pipeline": "metrics"}

2022-09-30T23:22:59.187Z info pipelines/pipelines.go:94 Processor started. {"kind": "processor", "name": "batch/metrics", "pipeline": "metrics"}

2022-09-30T23:22:59.187Z info pipelines/pipelines.go:98 Starting receivers...

2022-09-30T23:22:59.187Z info pipelines/pipelines.go:102 Receiver is starting... {"kind": "receiver", "name": "prometheus", "pipeline": "metrics"}

2022-09-30T23:22:59.187Z info kubernetes/kubernetes.go:326 Using pod service account via in-cluster config {"kind": "receiver", "name": "prometheus", "pipeline": "metrics", "discovery": "kubernetes"}

2022-09-30T23:22:59.188Z info kubernetes/kubernetes.go:326 Using pod service account via in-cluster config {"kind": "receiver", "name": "prometheus", "pipeline": "metrics", "discovery": "kubernetes"}

2022-09-30T23:22:59.188Z info kubernetes/kubernetes.go:326 Using pod service account via in-cluster config {"kind": "receiver", "name": "prometheus", "pipeline": "metrics", "discovery": "kubernetes"}

2022-09-30T23:22:59.188Z info kubernetes/kubernetes.go:326 Using pod service account via in-cluster config {"kind": "receiver", "name": "prometheus", "pipeline": "metrics", "discovery": "kubernetes"}

2022-09-30T23:22:59.189Z info pipelines/pipelines.go:106 Receiver started. {"kind": "receiver", "name": "prometheus", "pipeline": "metrics"}

2022-09-30T23:22:59.189Z info healthcheck/handler.go:129 Health Check state change {"kind": "extension", "name": "health_check", "status": "ready"}

2022-09-30T23:22:59.189Z info service/collector.go:215 Starting aws-otel-collector... {"Version": "v0.21.1", "NumCPU": 2}

2022-09-30T23:22:59.189Z info service/collector.go:128 Everything is ready. Begin running and processing data.

...

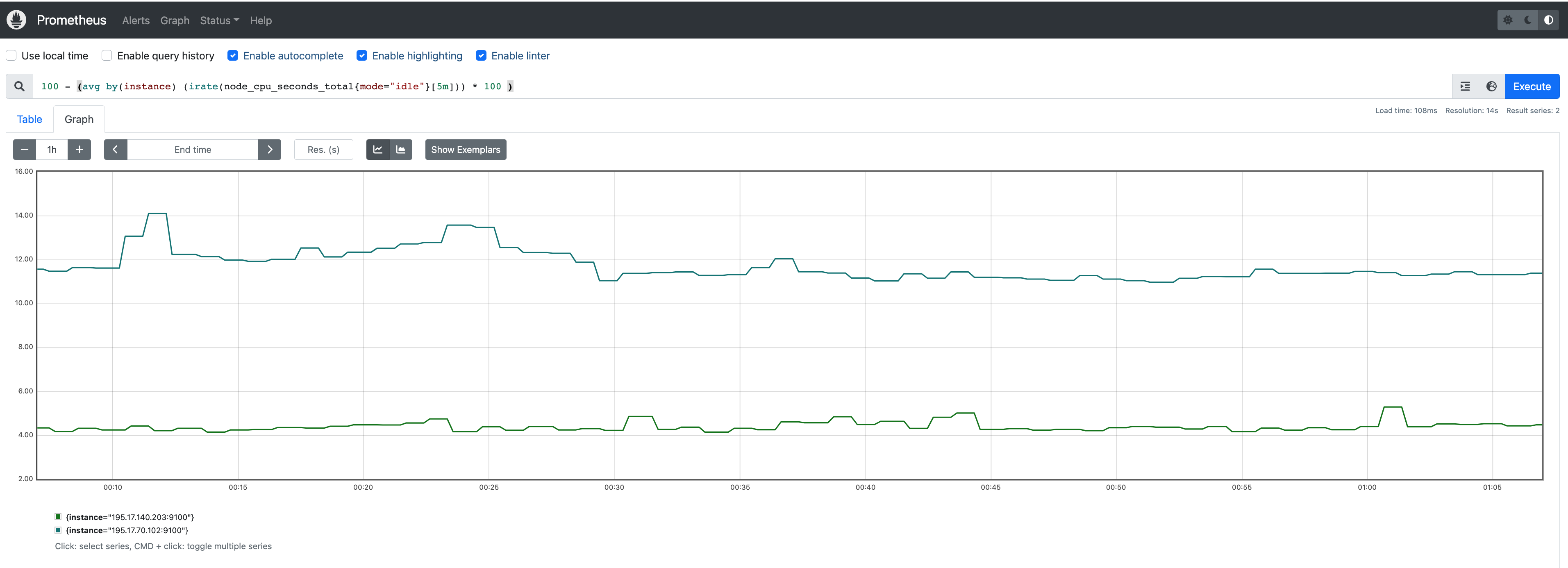

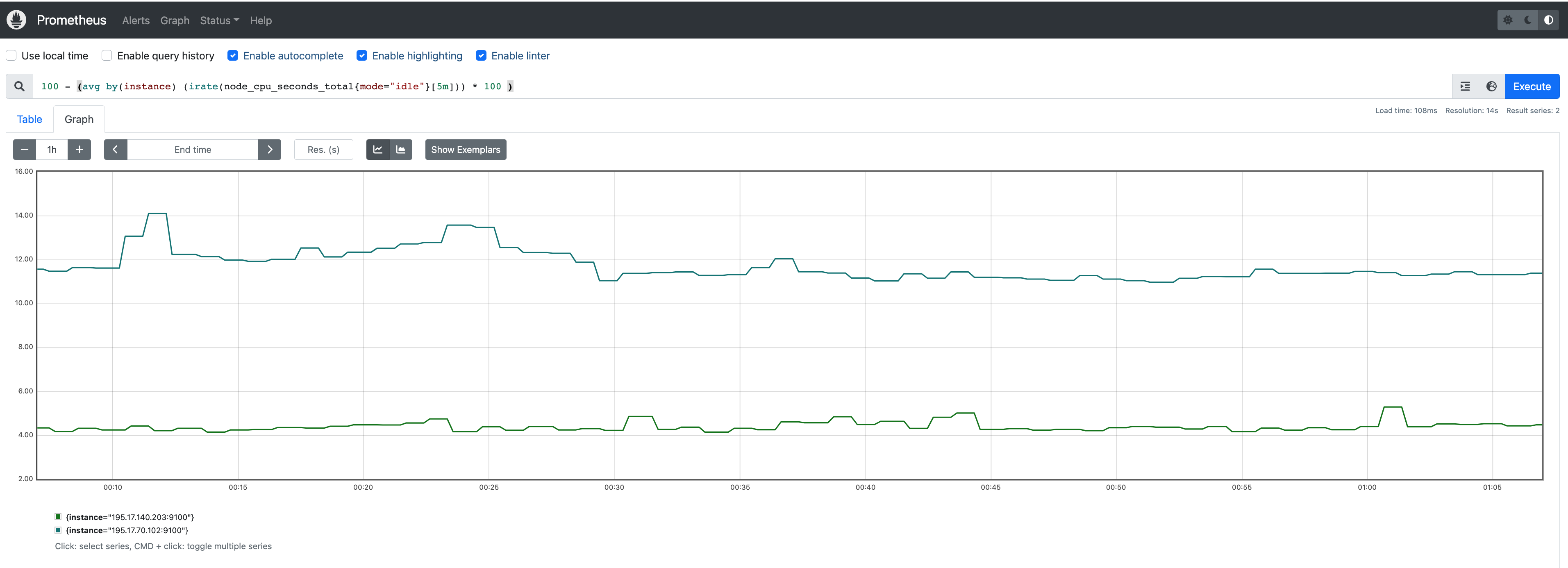

Check AMP endpoint using awscurl

Use awscurl commands below to check if AMP received the metrics data sent by ADOT. The awscurl tool is a curl like tool with AWS Signature Version 4 request signing. The command below should return a status code success.

pip install awscurl

awscurl -X POST --region us-west-2 --service aps "<amp-query-endpoint>?query=up"

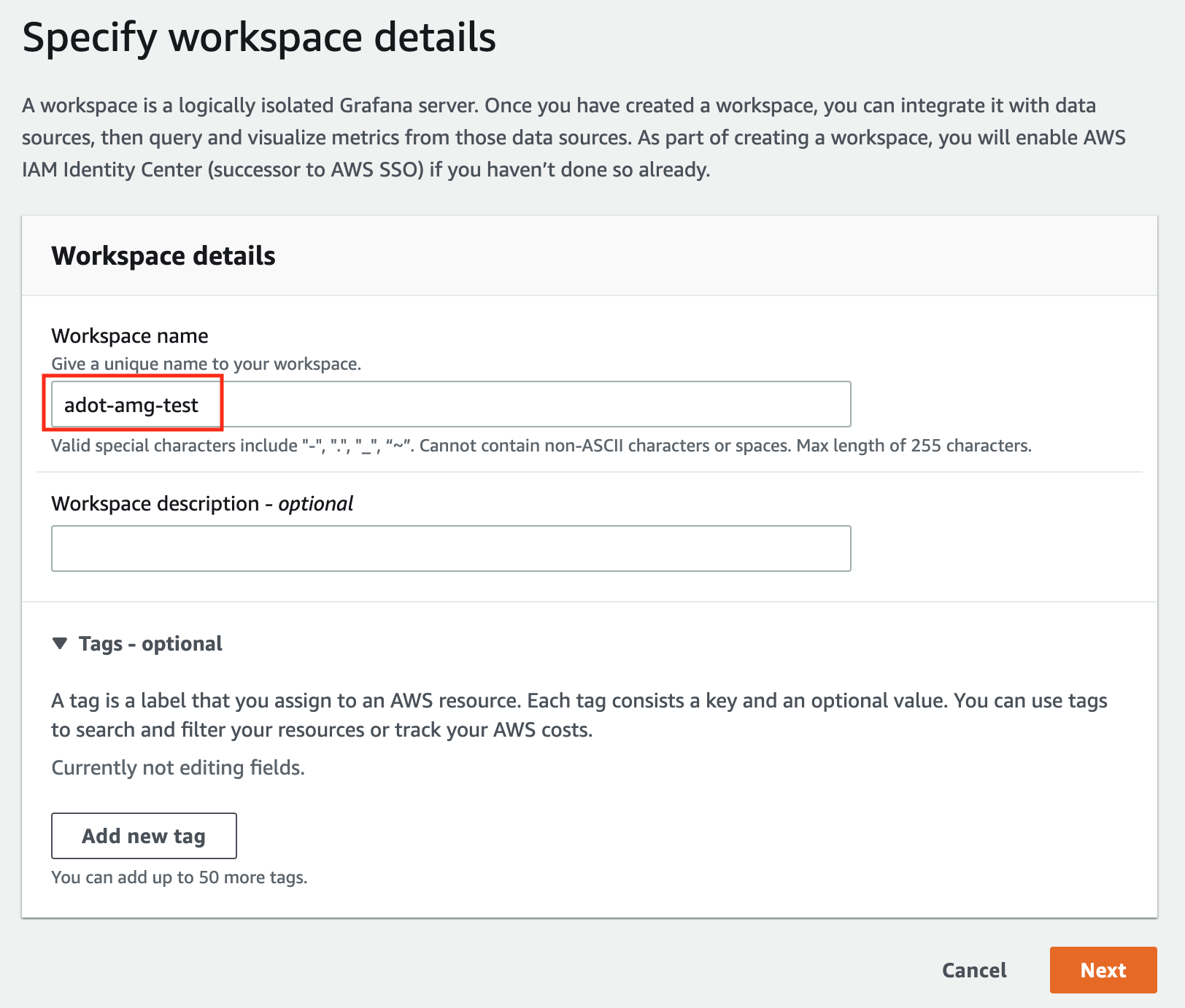

Create an AMG workspace and connect to the AMP workspace

An AMG workspace is created to query metrics from the AMP workspace and visualize the metrics in user-selected or user-built dashboards.

Follow steps below to create the AMG workspace:

-

Enable AWS Single-Sign-on (AWS SSO). Refer to IAM Identity Center

for details.

-

Open the Amazon Managed Grafana console at https://console.aws.amazon.com/grafana/.

-

Choose Create workspace.

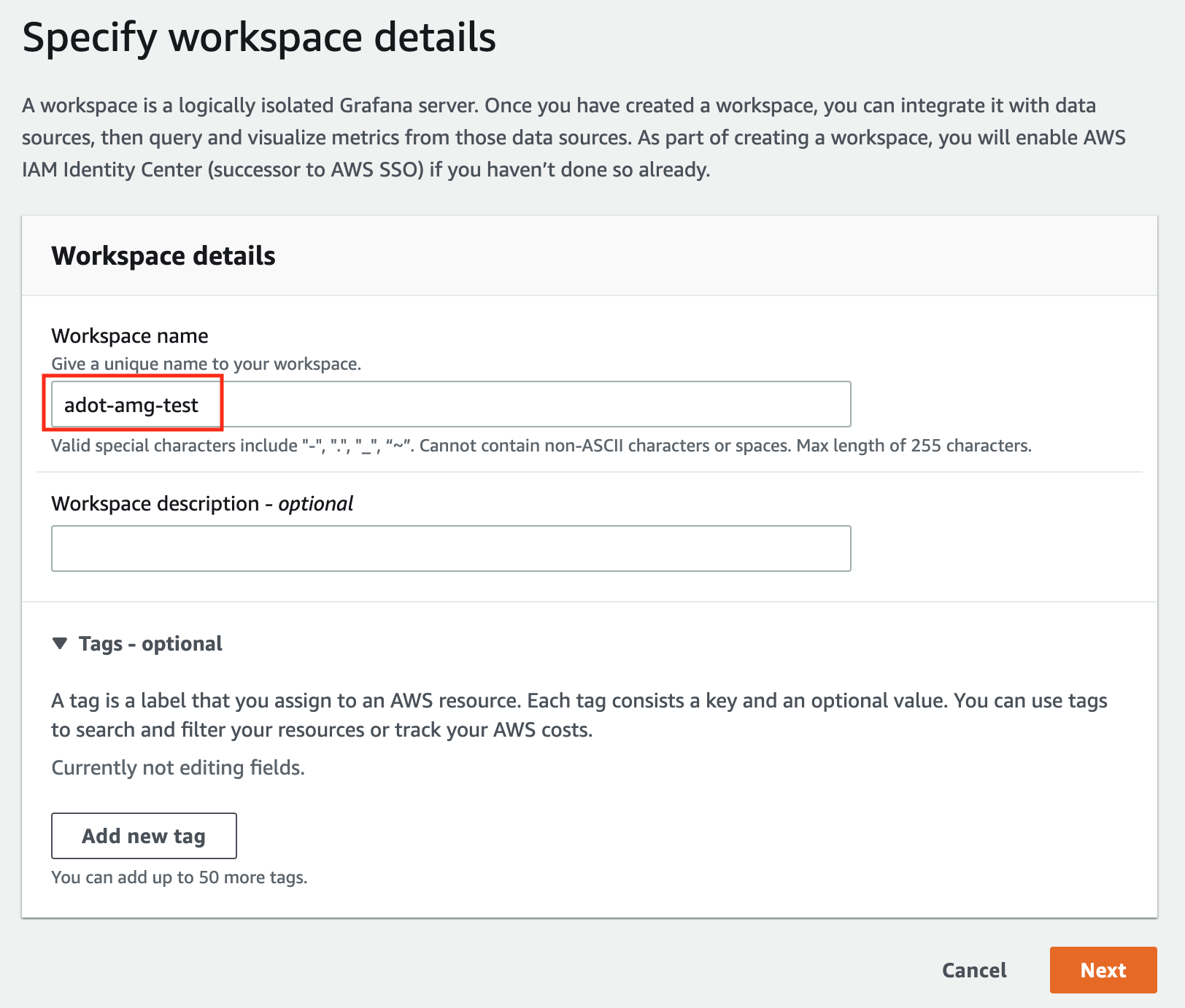

-

In the Workspace details window, for Workspace name, enter a name for the workspace.

-

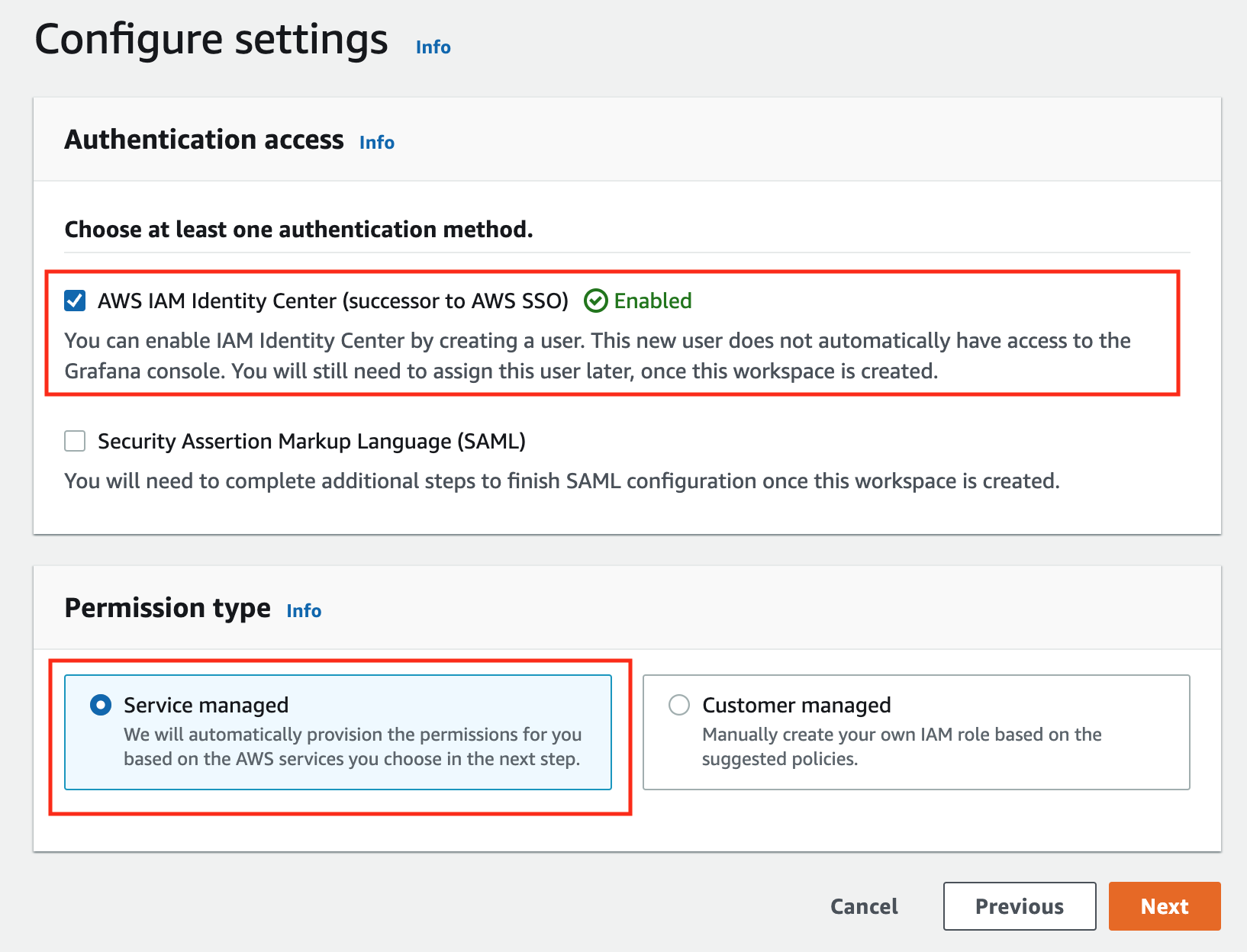

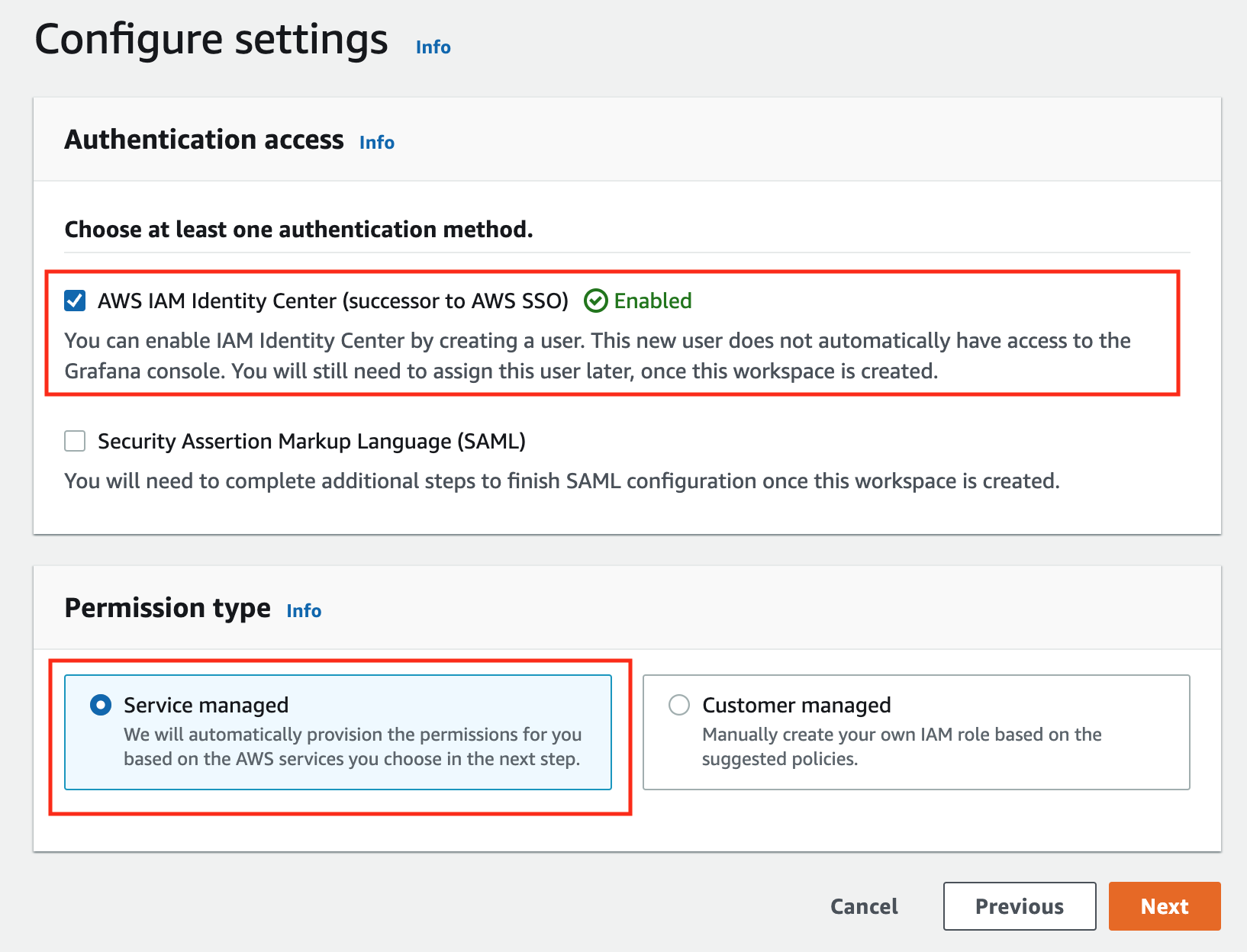

In the config settings window, choose Authentication access by AWS IAM Identity Center, and Permission type of Service managed.

-

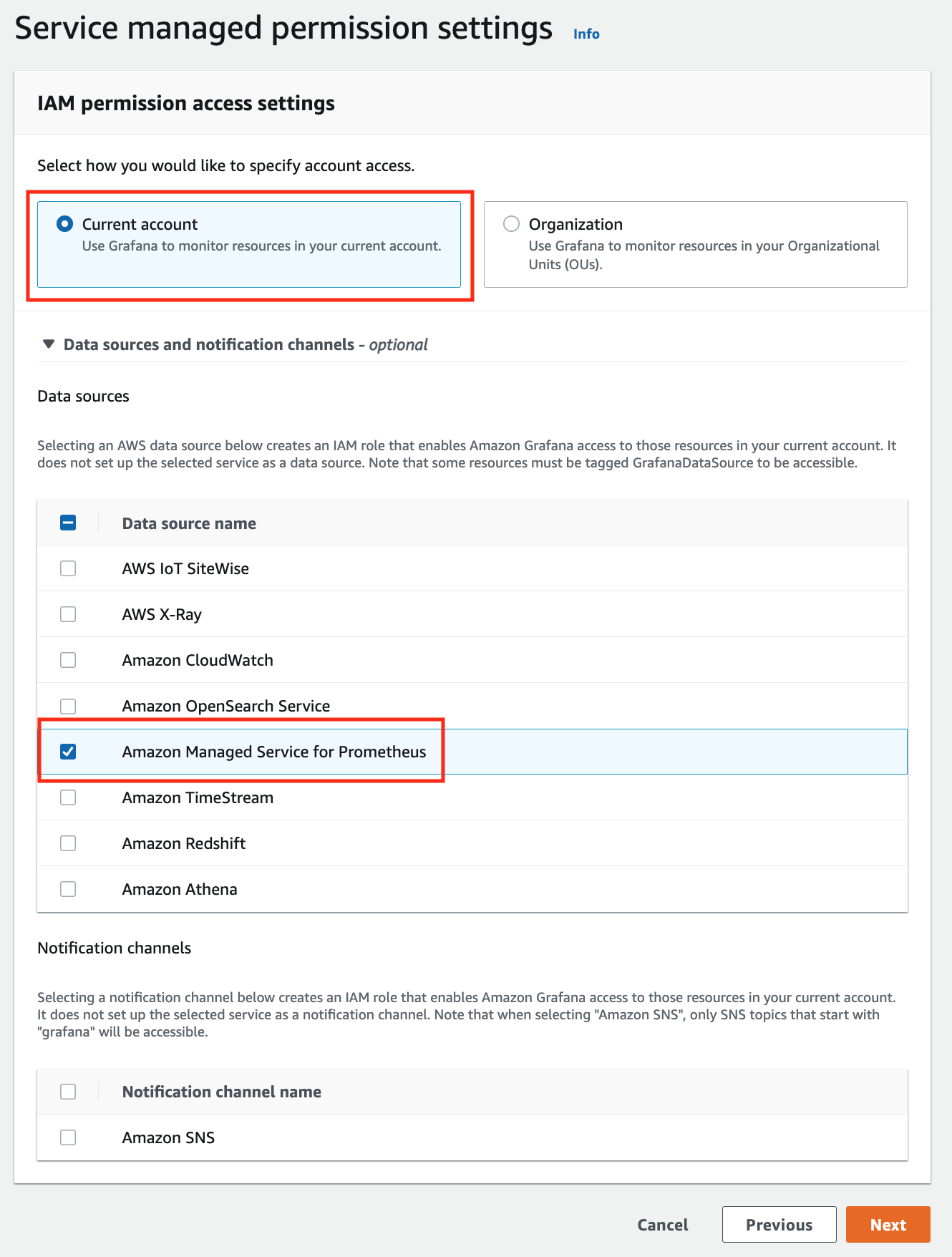

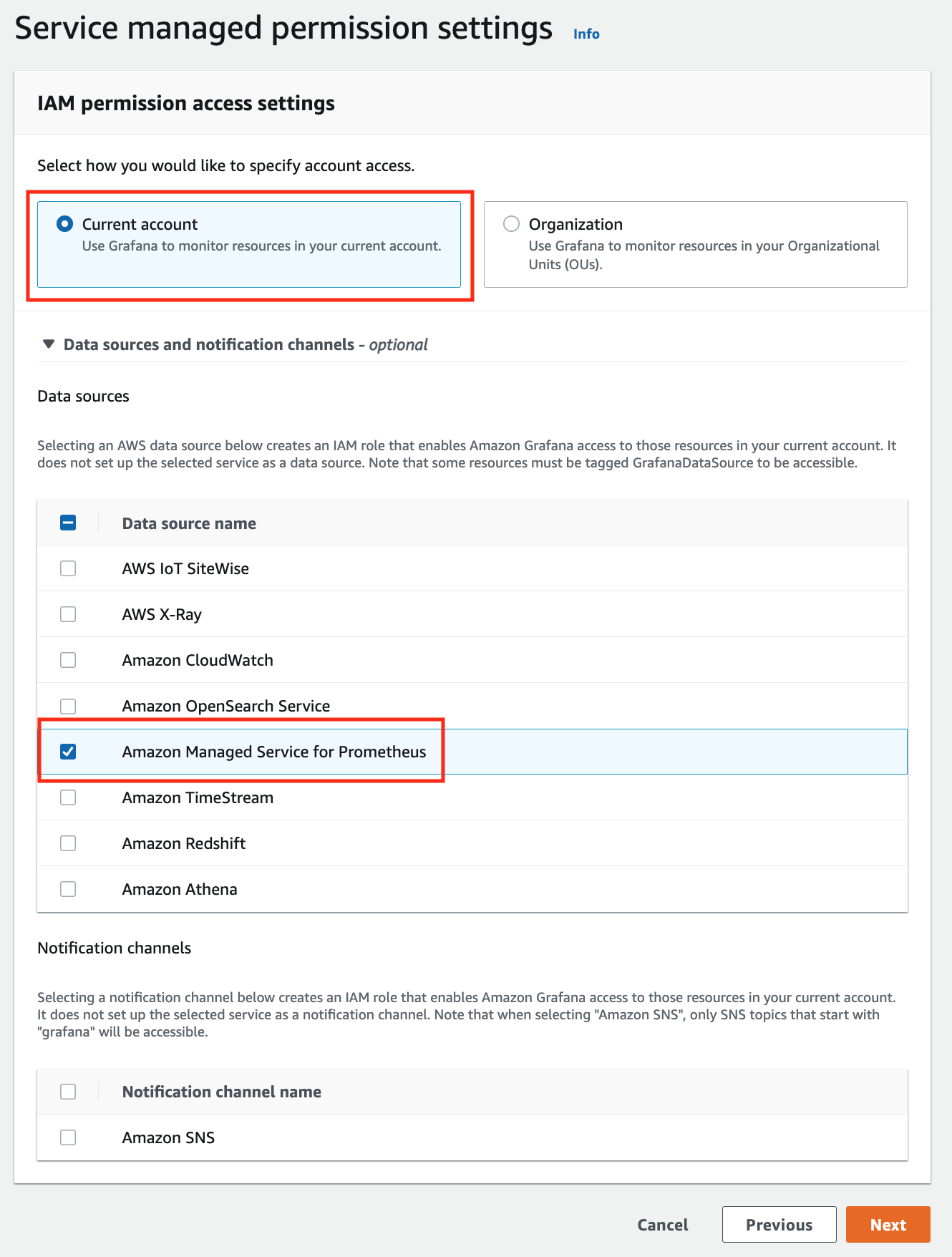

In the IAM permission access setting window, choose Current account access, and Amazon Managed Service for Prometheus as data source.

-

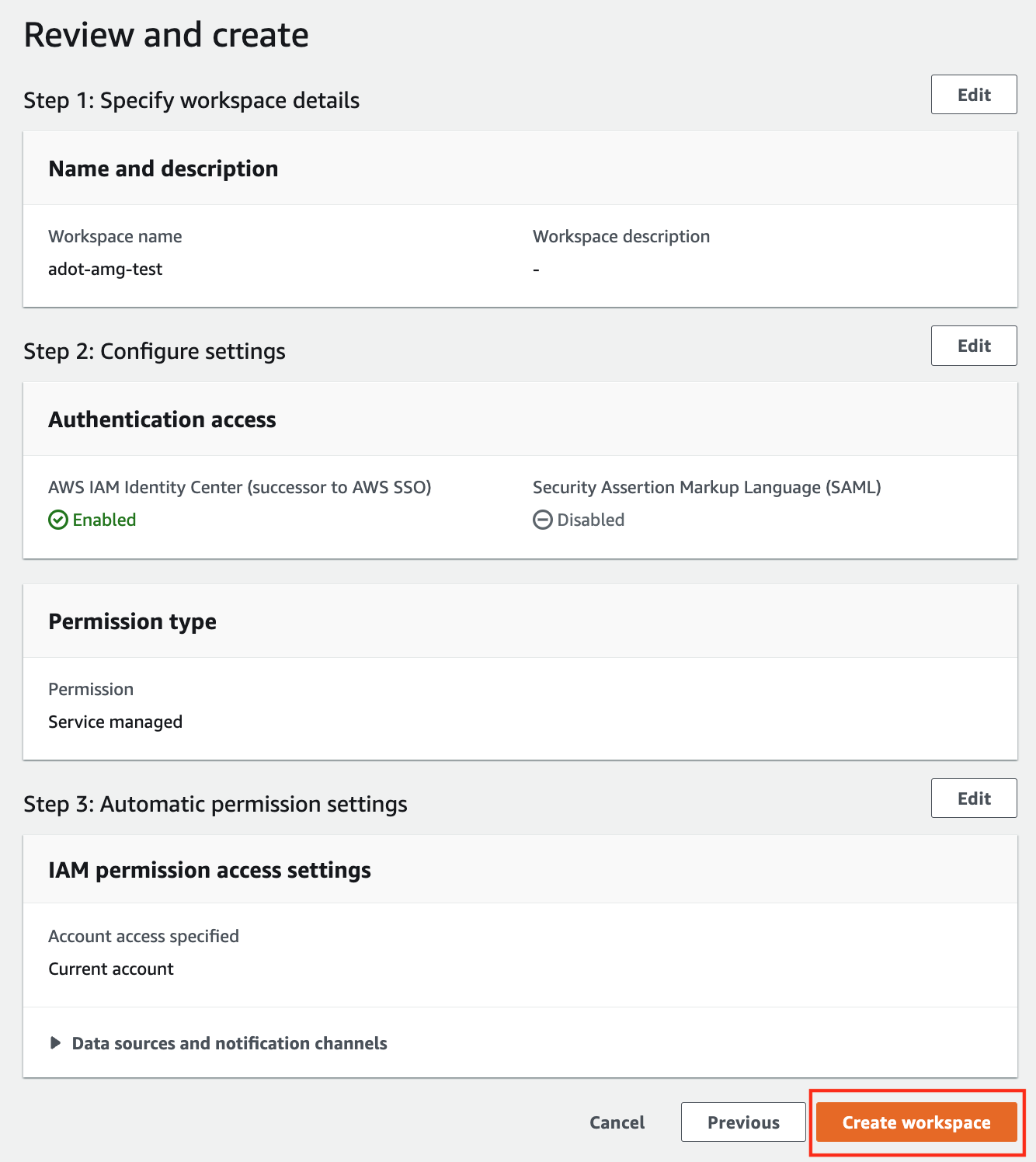

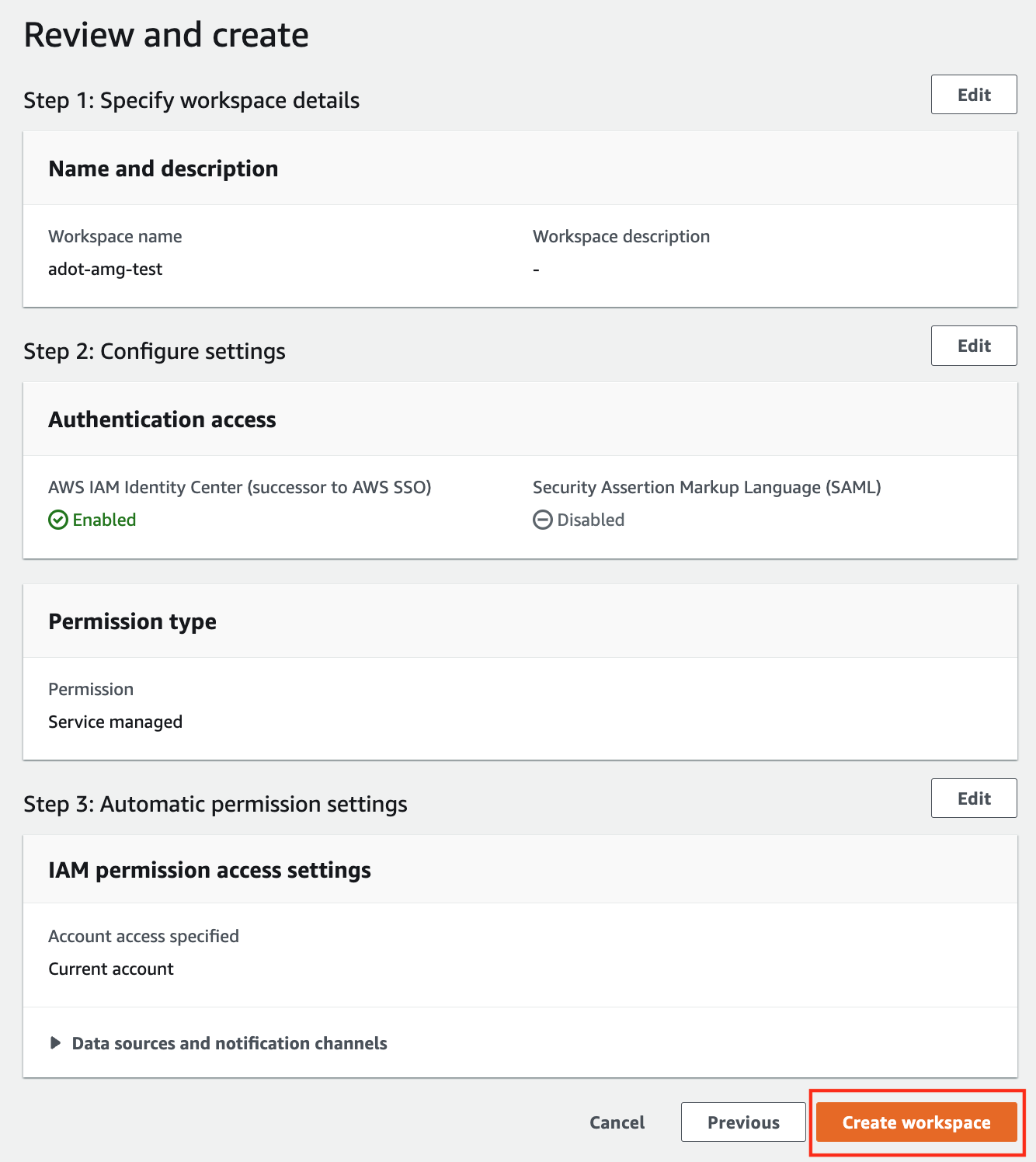

Review all settings and click on Create workspace.

-

Once the workspace shows a Status of Active, you can access it by clicking the Grafana workspace URL. Click on Sign in with AWS IAM Identity Center to finish the authentication.

Follow steps below to add the AMP workspace to AMG.

-

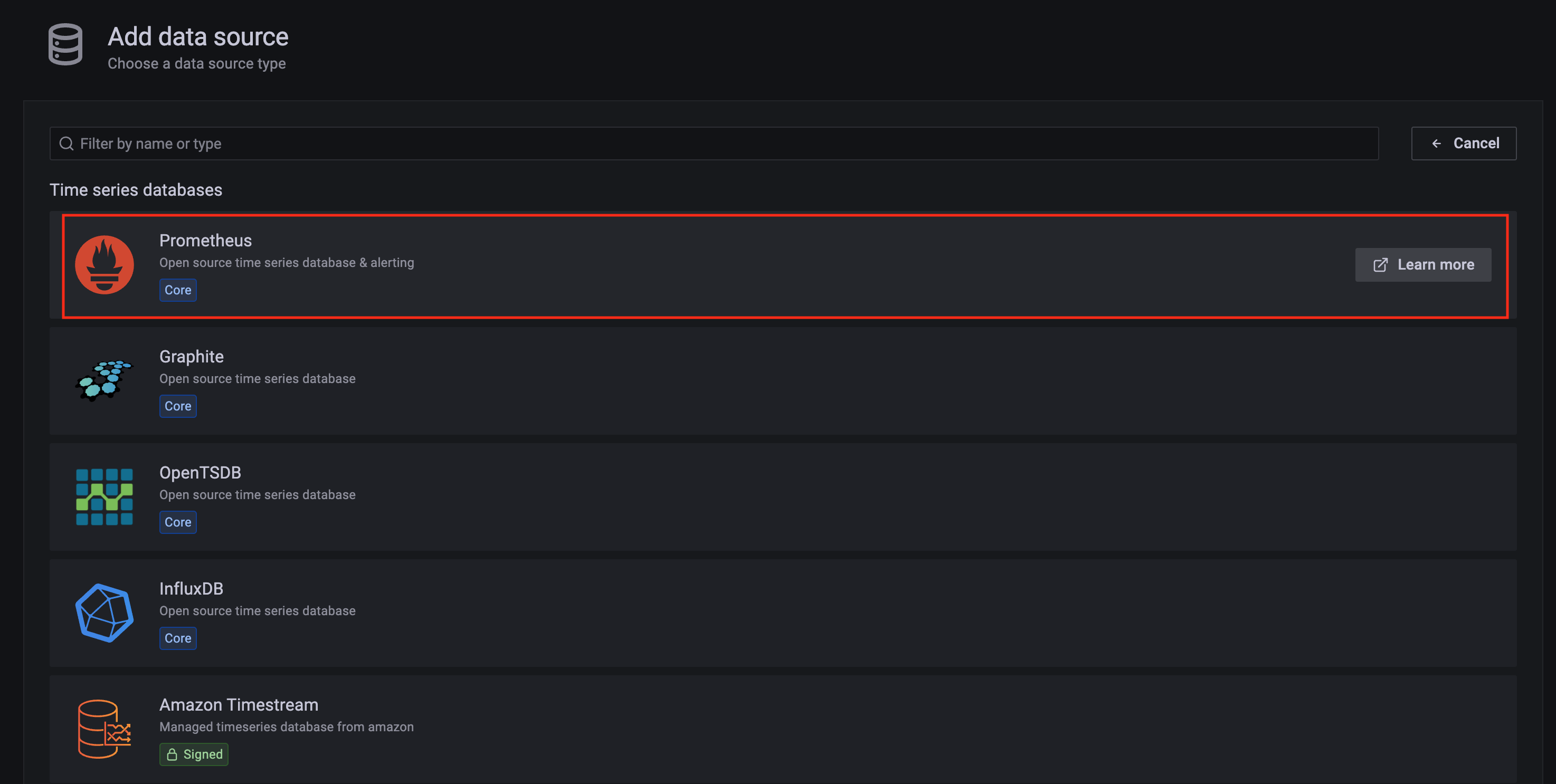

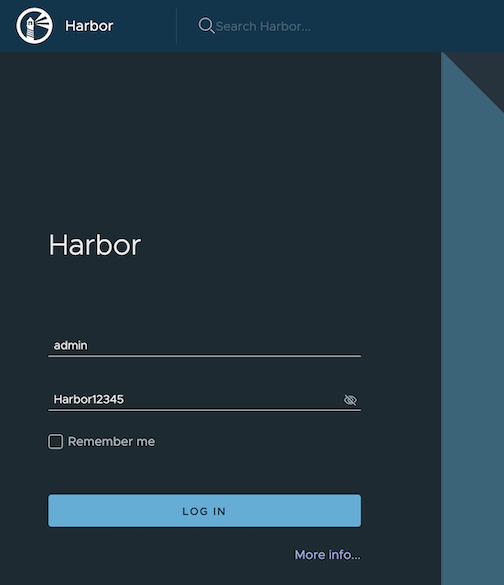

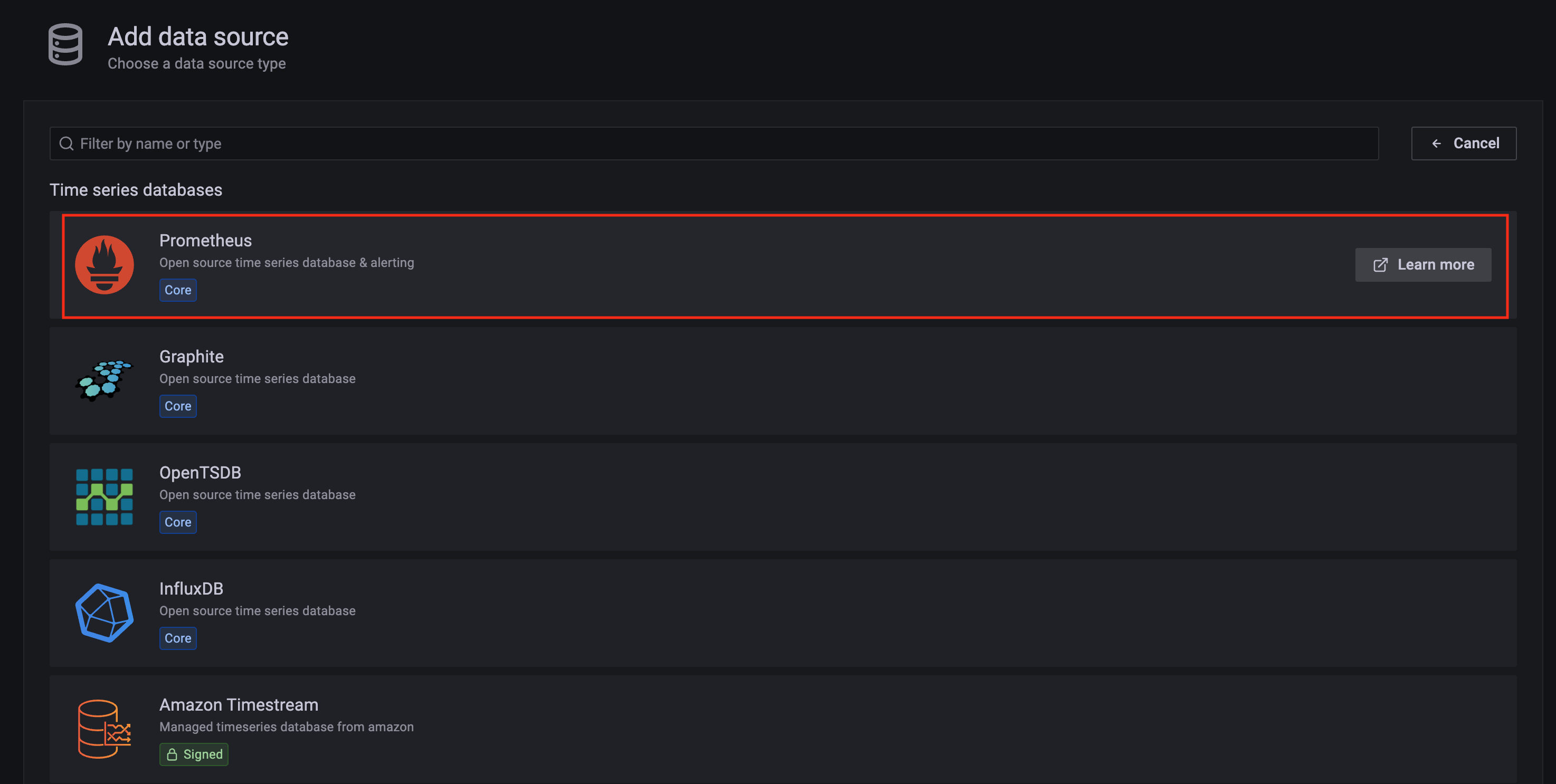

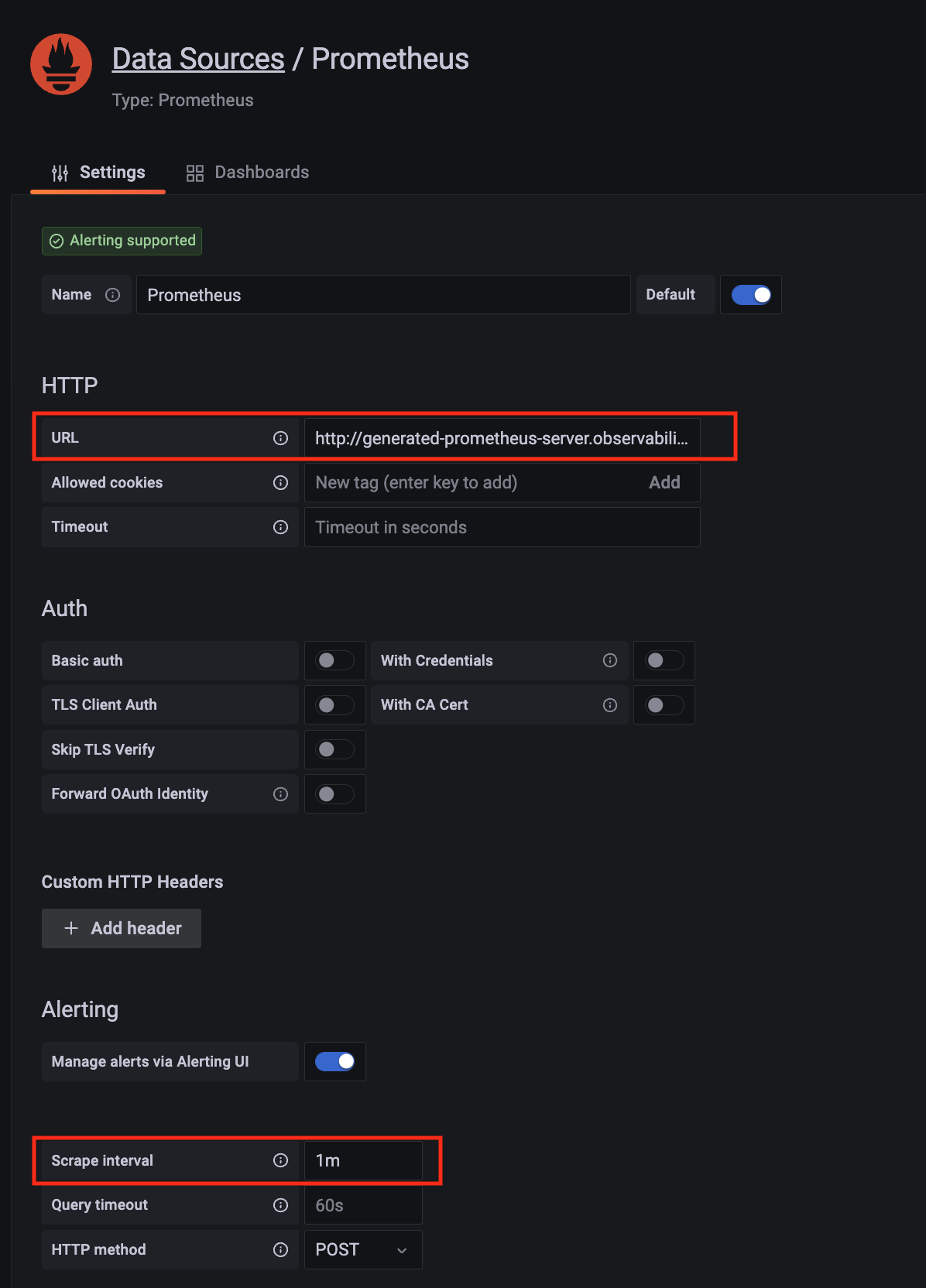

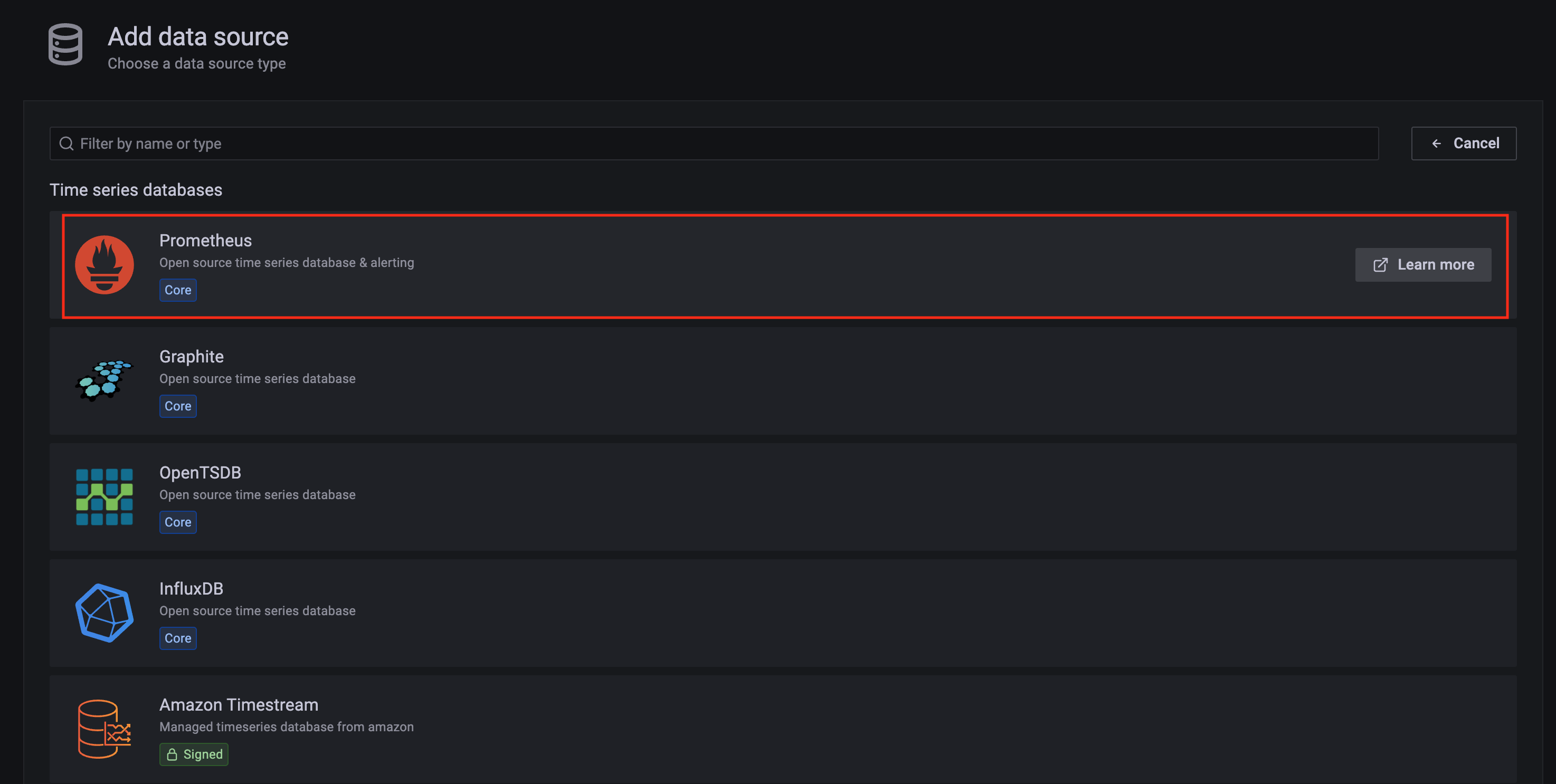

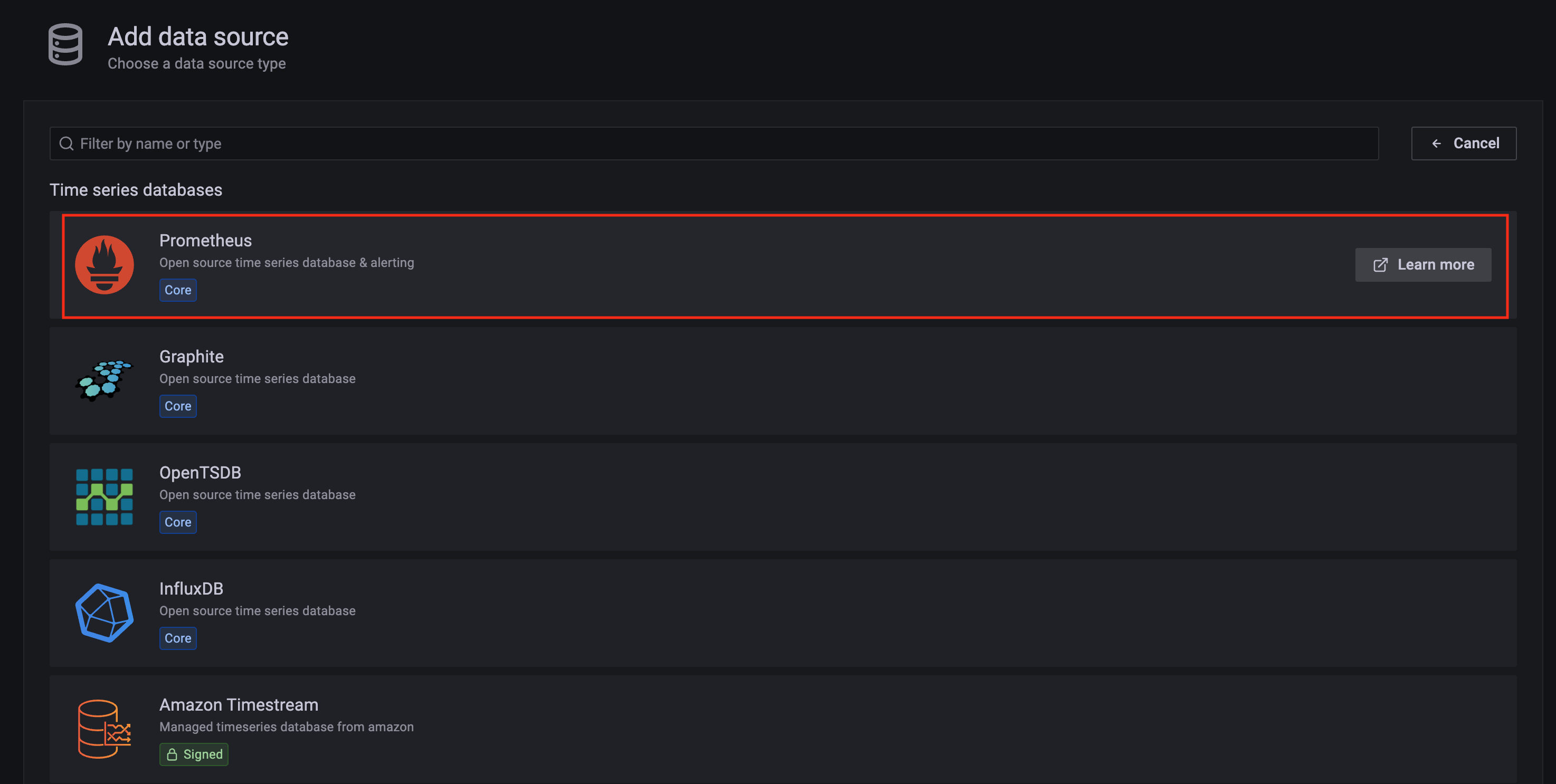

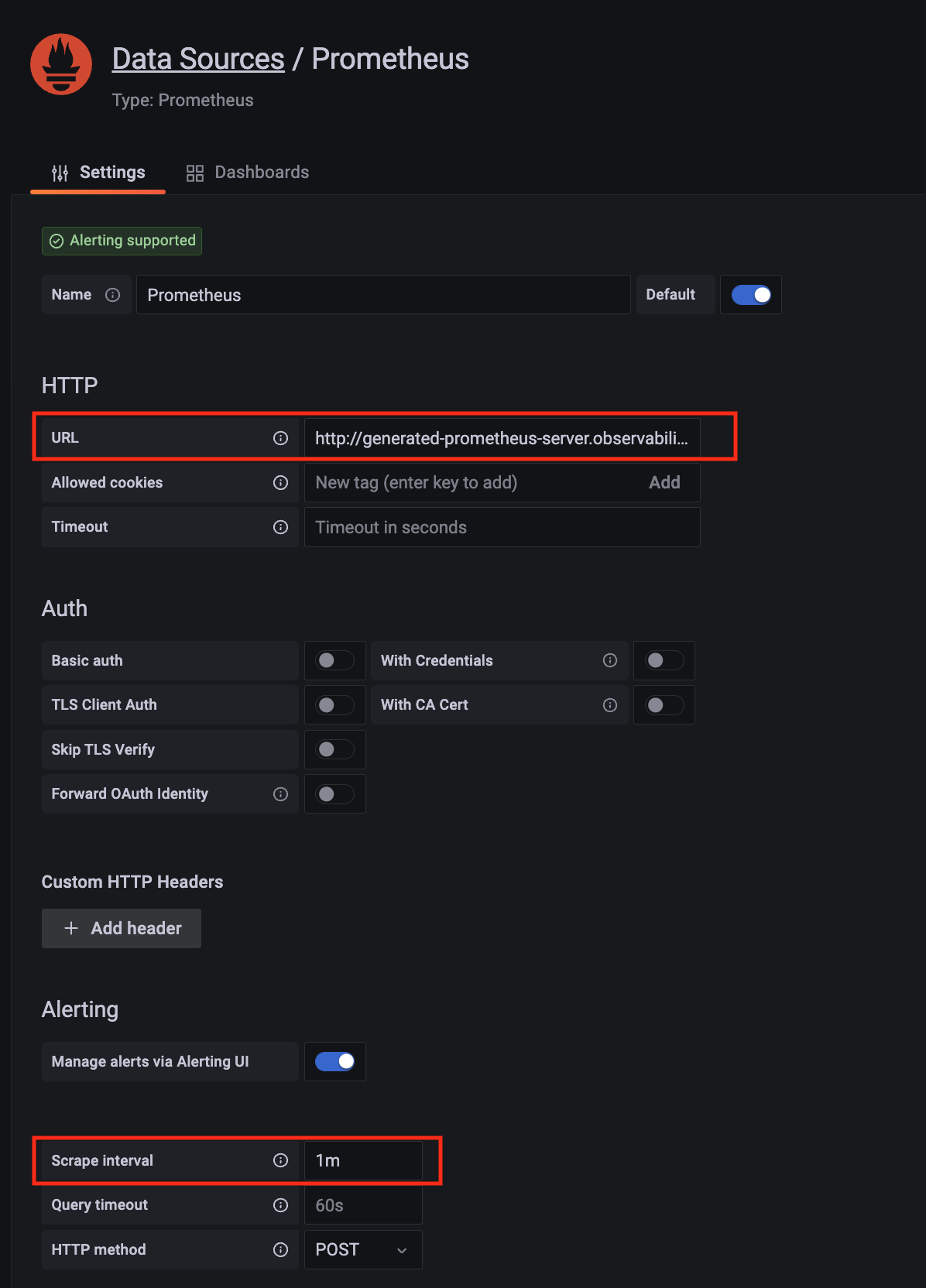

Click on the config sign on the left navigation bar, select Data sources, then choose Prometheus as the Data source.

-

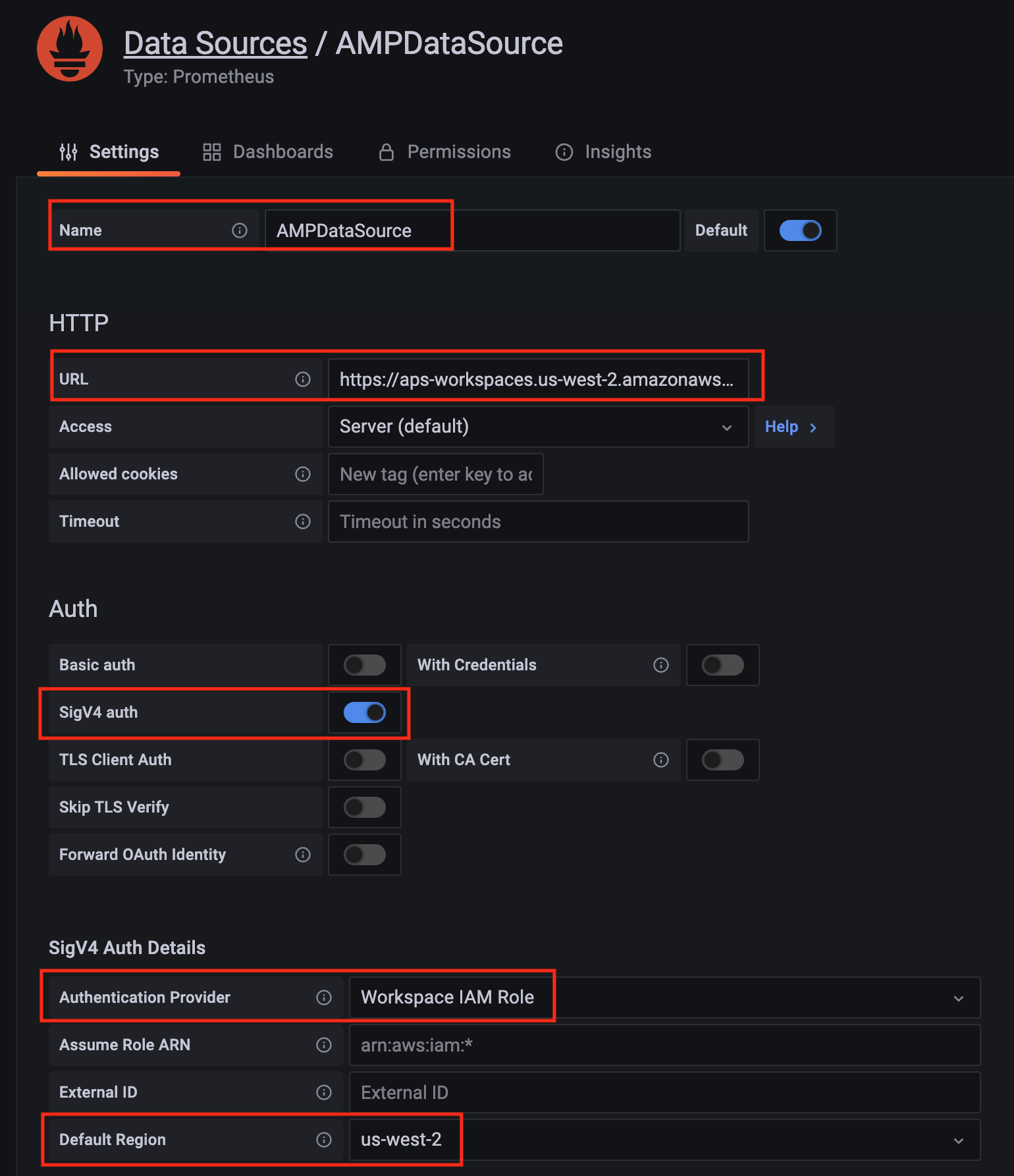

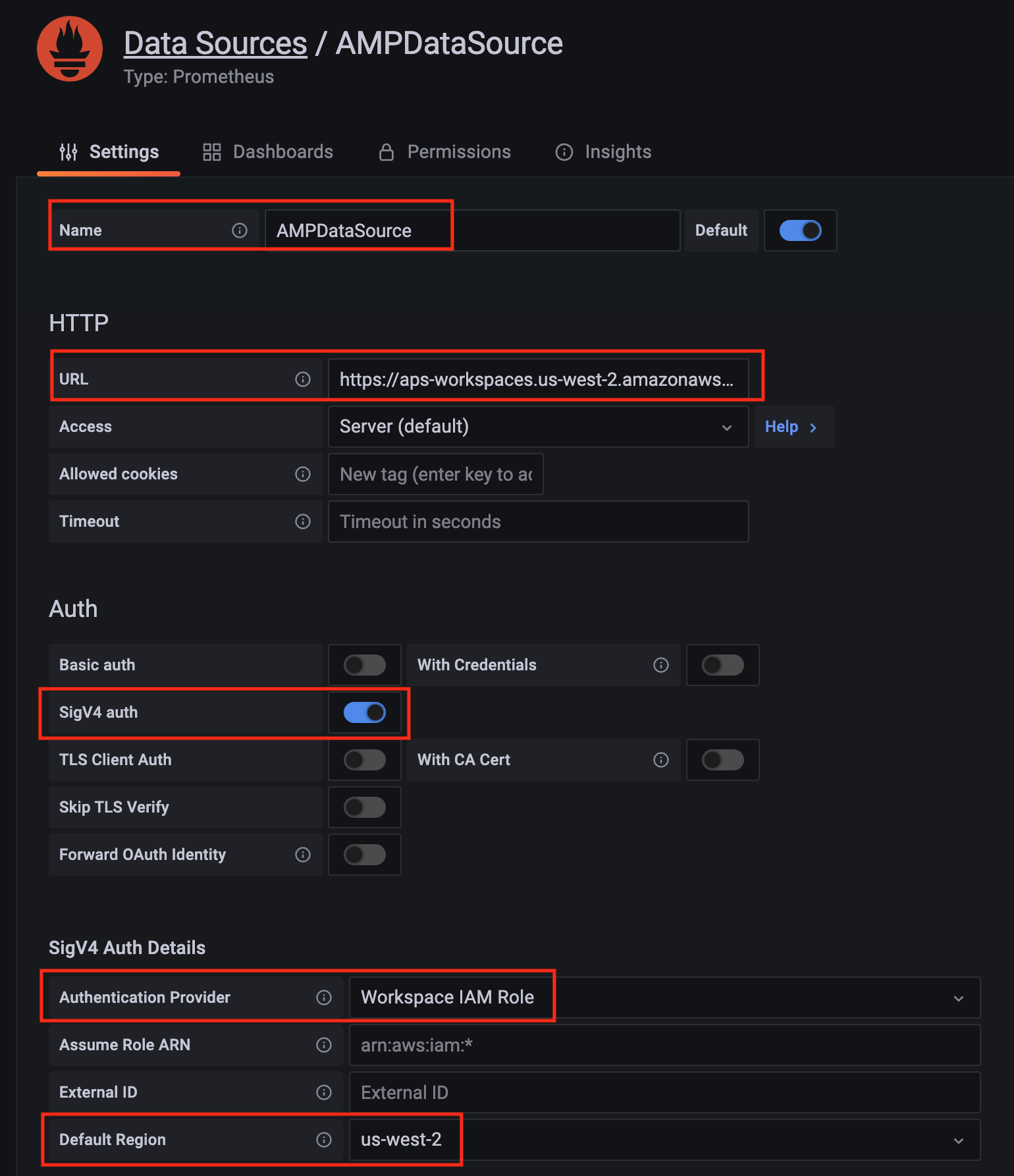

Configure Prometheus data source with the following details:

- Name:

AMPDataSource as an example.

- URL: add the AMP workspace remote write URL without the

api/v1/remote_write at the end.

- SigV4 auth: enable.

- Under the SigV4 Auth Details section:

- Authentication Provider: choose

Workspace IAM Role;

- Default Region: choose

us-west-2 (where you created the AMP workspace)

- Select the

Save and test, and a notification data source is working should be displayed.

-

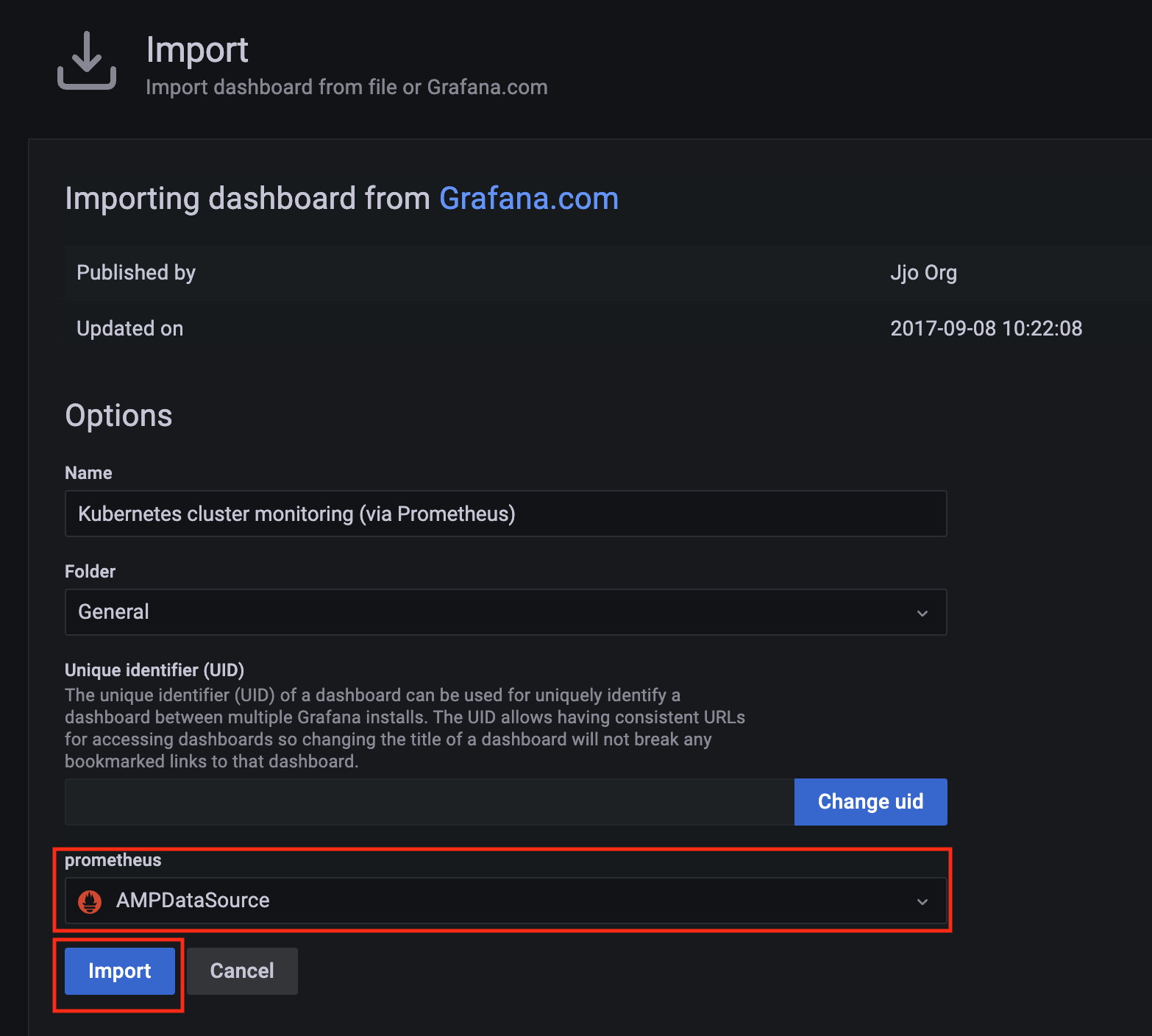

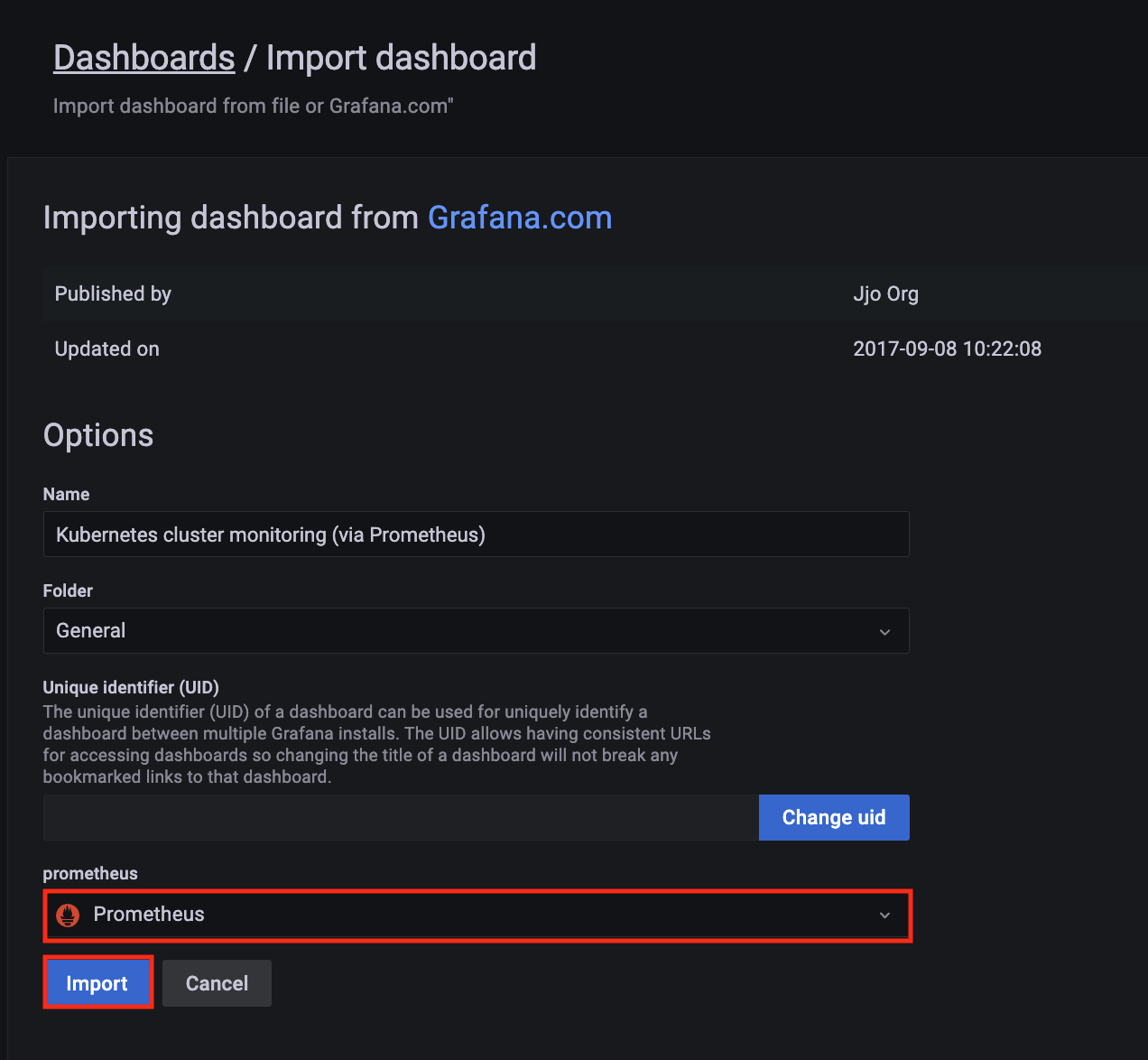

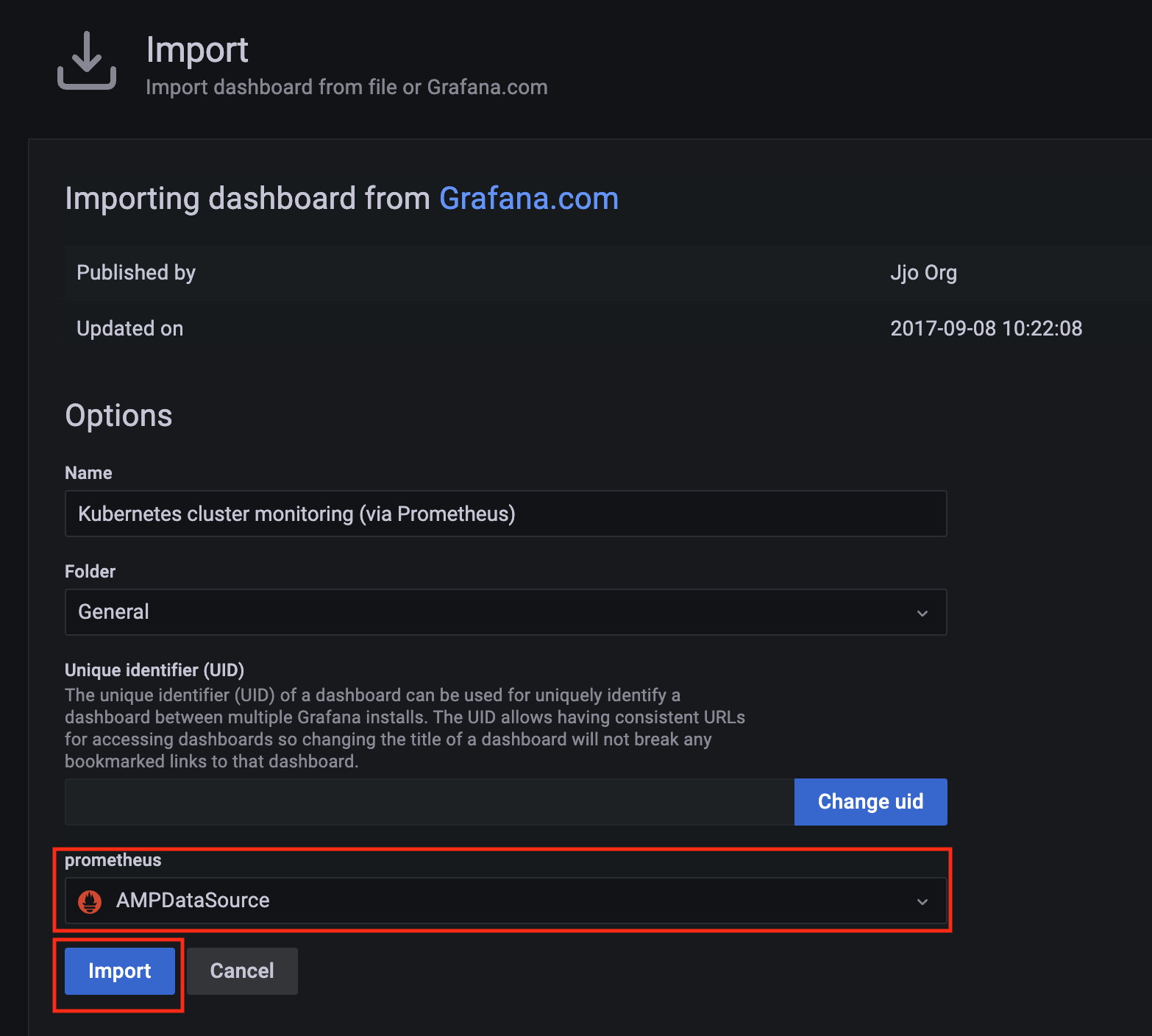

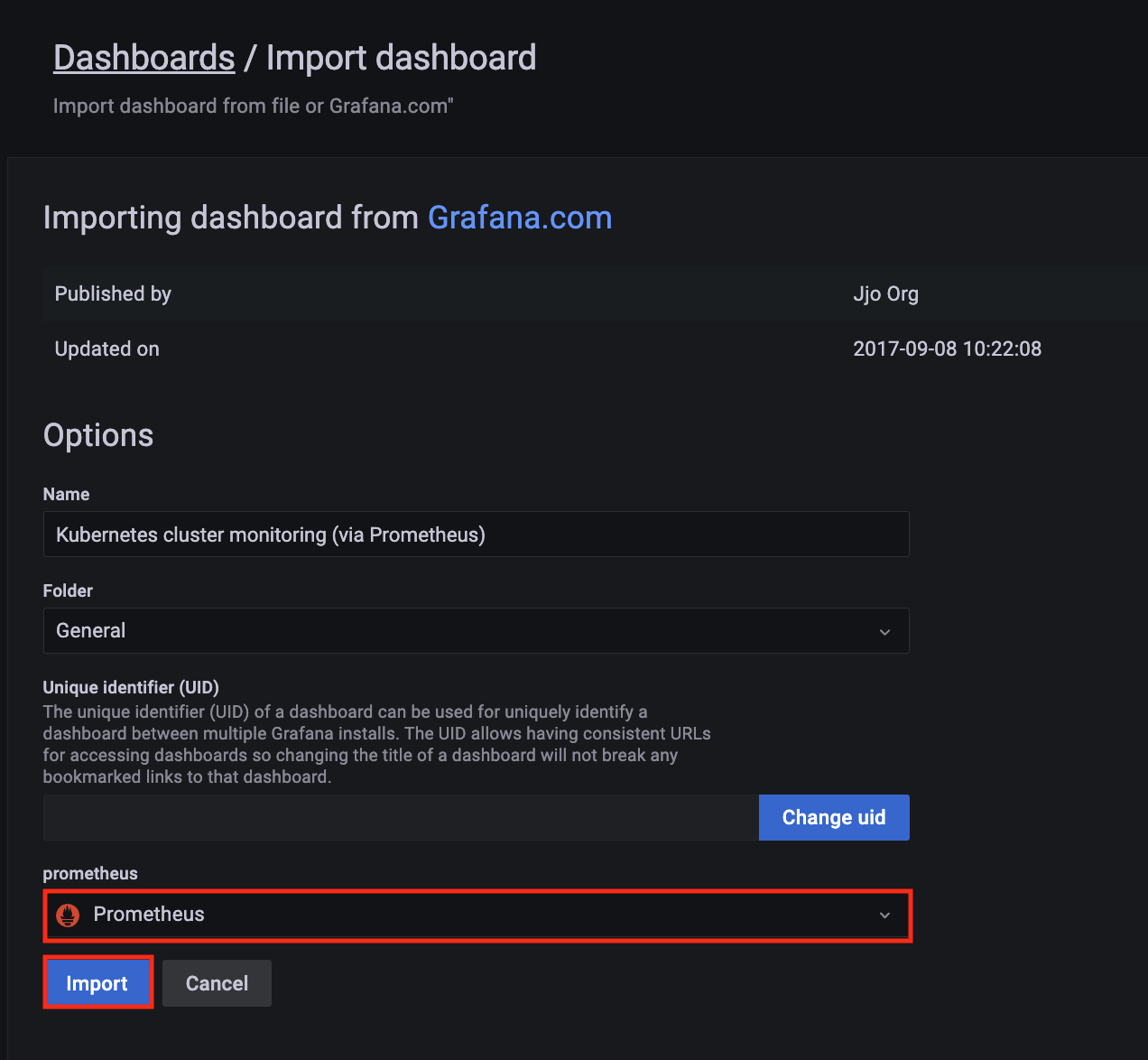

Import a dashboard template by clicking on the plus (+) sign on the left navigation bar. In the Import screen, type 3119 in the Import via grafana.com textbox and select Import.

From the dropdown at the bottom, select AMPDataSource and select Import.

-

A Kubernetes cluster monitoring (via Prometheus) dashboard will be displayed.

10.2 - AWS Distro for OpenTelemetry (ADOT)

Install/upgrade/uninstall ADOT

If you have not already done so, make sure your cluster meets the package prerequisites.

Be sure to refer to the troubleshooting guide

in the event of a problem.

Important

- Starting at

eksctl anywhere version v0.12.0, packages on workload clusters are remotely managed by the management cluster.

- While following this guide to install packages on a workload cluster, please make sure the

kubeconfig is pointing to the management cluster that was used to create the workload cluster. The only exception is the kubectl create namespace command below, which should be run with kubeconfig pointing to the workload cluster.

Install

-

Generate the package configuration

eksctl anywhere generate package adot --cluster <cluster-name> > adot.yaml

-

Add the desired configuration to adot.yaml

Please see complete configuration options

for all configuration options and their default values.

Example package file with daemonSet mode and default configuration:

apiVersion: packages.eks.amazonaws.com/v1alpha1

kind: Package

metadata:

name: my-adot

namespace: eksa-packages-<cluster-name>

spec:

packageName: adot

targetNamespace: observability

config: |

mode: daemonset

Example package file with deployment mode and customized collector components to scrap

ADOT collector’s own metrics:

apiVersion: packages.eks.amazonaws.com/v1alpha1

kind: Package

metadata:

name: my-adot

namespace: eksa-packages-<cluster-name>

spec:

packageName: adot

targetNamespace: observability

config: |

mode: deployment

replicaCount: 2

config:

receivers:

prometheus:

config:

scrape_configs:

- job_name: opentelemetry-collector

scrape_interval: 10s

static_configs:

- targets:

- ${MY_POD_IP}:8888

processors:

batch: {}

memory_limiter: null

exporters:

logging:

loglevel: debug

prometheusremotewrite:

endpoint: "<prometheus-remote-write-end-point>"

extensions:

health_check: {}

memory_ballast: {}

service:

pipelines:

metrics: