This is the multi-page printable view of this section.

Click here to print.

Return to the regular view of this page.

Amazon EKS Anywhere

EKS Anywhere documentation homepage

EKS Anywhere is container management software built by AWS that makes it easier to run and manage Kubernetes clusters on-premises and at the edge. EKS Anywhere is built on EKS Distro

, which is the same reliable and secure Kubernetes distribution used by Amazon Elastic Kubernetes Service (EKS)

in AWS Cloud. EKS Anywhere simplifies Kubernetes cluster management through the automation of undifferentiated heavy lifting such as infrastructure setup and Kubernetes cluster lifecycle operations.

Unlike Amazon EKS in AWS Cloud, EKS Anywhere is a user-managed product that runs on user-managed infrastructure. You are responsible for cluster lifecycle operations and maintenance of your EKS Anywhere clusters. EKS Anywhere does not have any strict dependencies on AWS regional services and is a fit for isolated or air-gapped environments.

If you have on-premises or edge environments with reliable connectivity to an AWS Region, consider using EKS Hybrid Nodes

or EKS on Outposts

to benefit from AWS-managed EKS control planes and a consistent experience with EKS in the AWS Cloud.

The tenets of the EKS Anywhere project are:

- Simple: Make using a Kubernetes distribution simple and boring (reliable and secure).

- Opinionated Modularity: Provide opinionated defaults about the best components to include with Kubernetes, but give customers the ability to swap them out

- Open: Provide open source tooling backed, validated and maintained by Amazon

- Ubiquitous: Enable customers and partners to integrate a Kubernetes distribution in the most common tooling.

- Stand Alone: Provided for use anywhere without AWS dependencies

- Better with AWS: Enable AWS customers to easily adopt additional AWS services

1 - Overview

What is EKS Anywhere?

EKS Anywhere is container management software built by AWS that makes it easier to run and manage Kubernetes clusters on-premises and at the edge. EKS Anywhere is built on EKS Distro

, which is the same reliable and secure Kubernetes distribution used by Amazon Elastic Kubernetes Service (EKS)

in AWS Cloud. EKS Anywhere simplifies Kubernetes cluster management through the automation of undifferentiated heavy lifting such as infrastructure setup and Kubernetes cluster lifecycle operations.

Unlike Amazon EKS in AWS Cloud, EKS Anywhere is a user-managed product that runs on user-managed infrastructure. You are responsible for cluster lifecycle operations and maintenance of your EKS Anywhere clusters. EKS Anywhere is open source and free to use at no cost. You can optionally purchase EKS Anywhere Enterprise Subscriptions

to receive support for your EKS Anywhere clusters and for access to EKS Anywhere Curated Packages

and extended support for Kubernetes versions.

EKS Anywhere Curated Packages are software packages that are built, tested, and supported by AWS and extend the core functionalities of Kubernetes on your EKS Anywhere clusters.

EKS Anywhere supports many different types of infrastructure including VMWare vSphere, Bare Metal, Nutanix, Apache CloudStack, and AWS Snow. You can run EKS Anywhere without a connection to AWS Cloud and in air-gapped environments, or you can optionally connect to AWS Cloud to integrate with other AWS services. You can use the EKS Connector

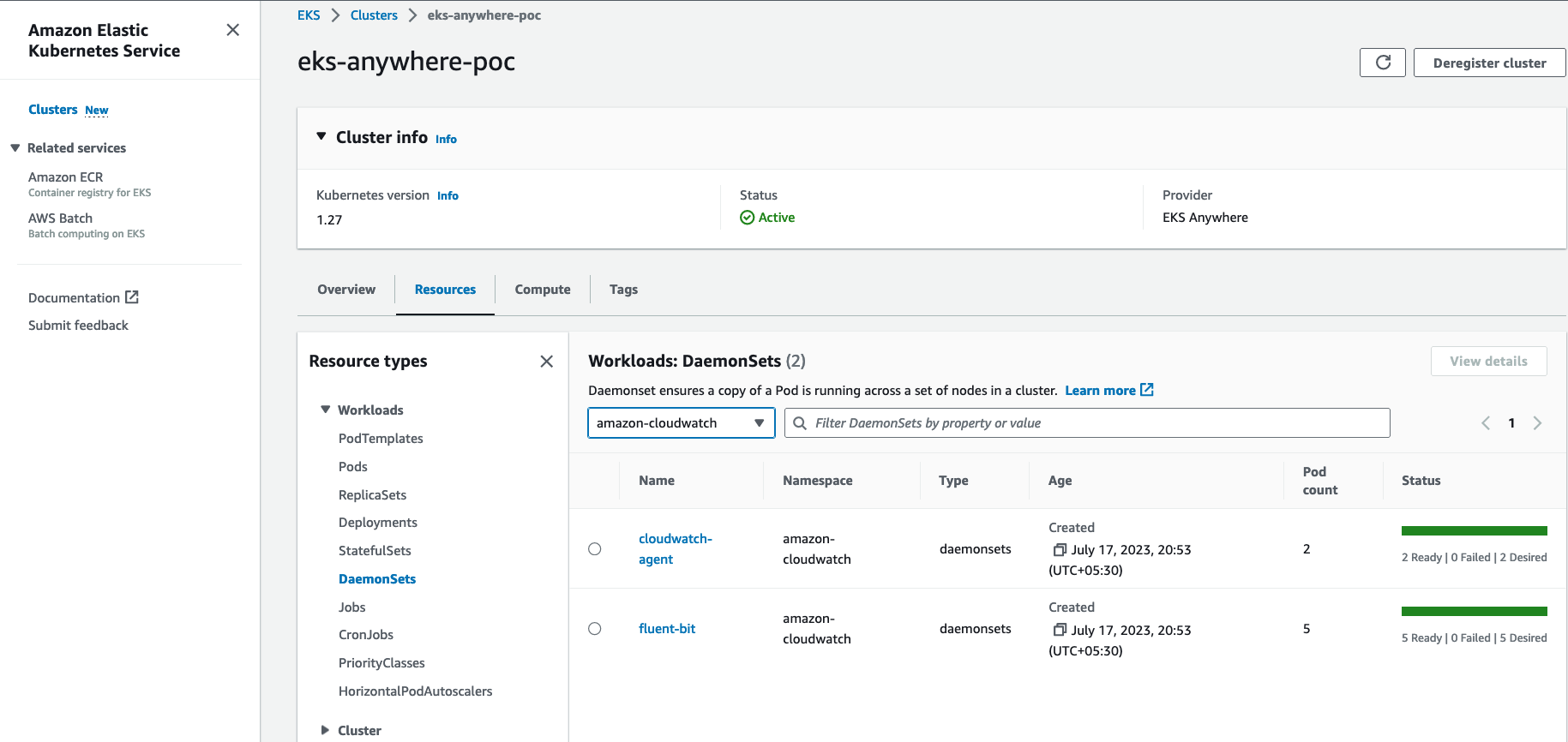

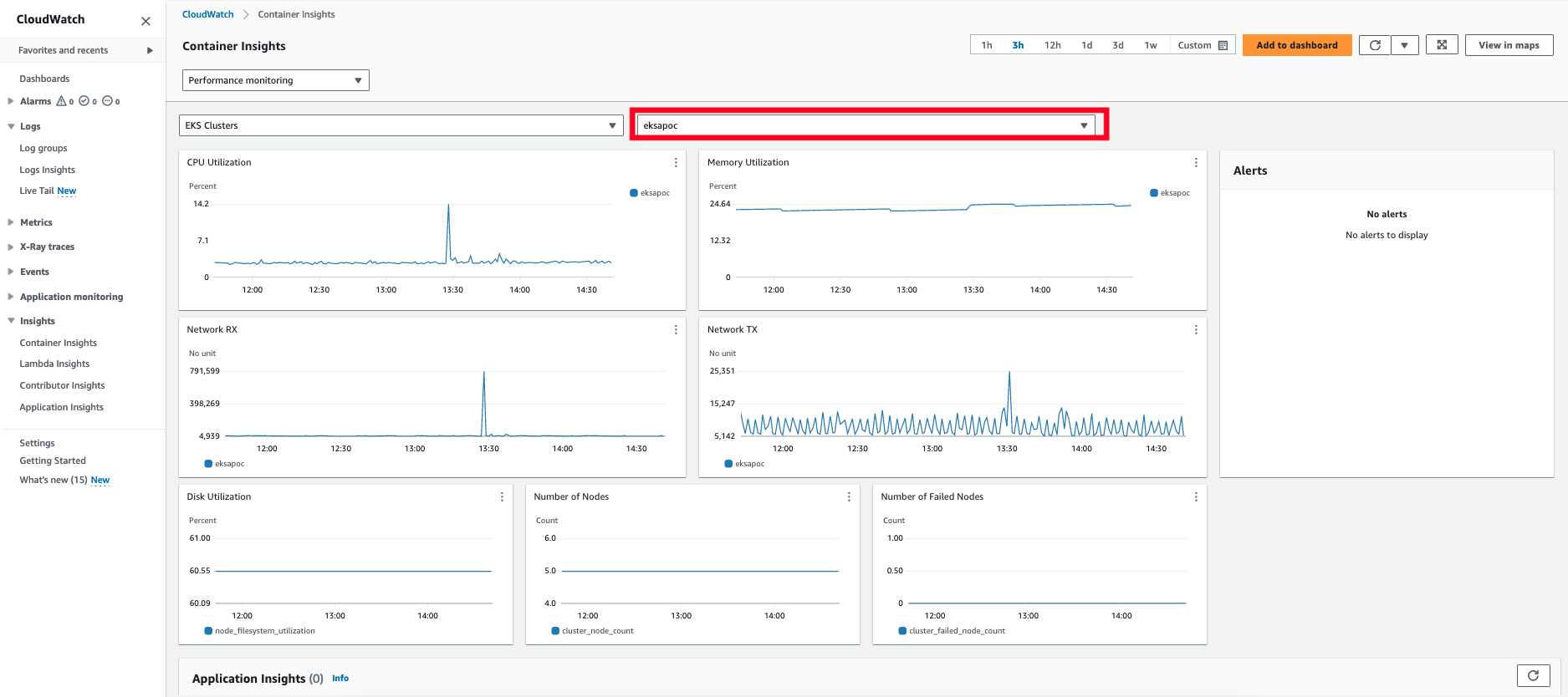

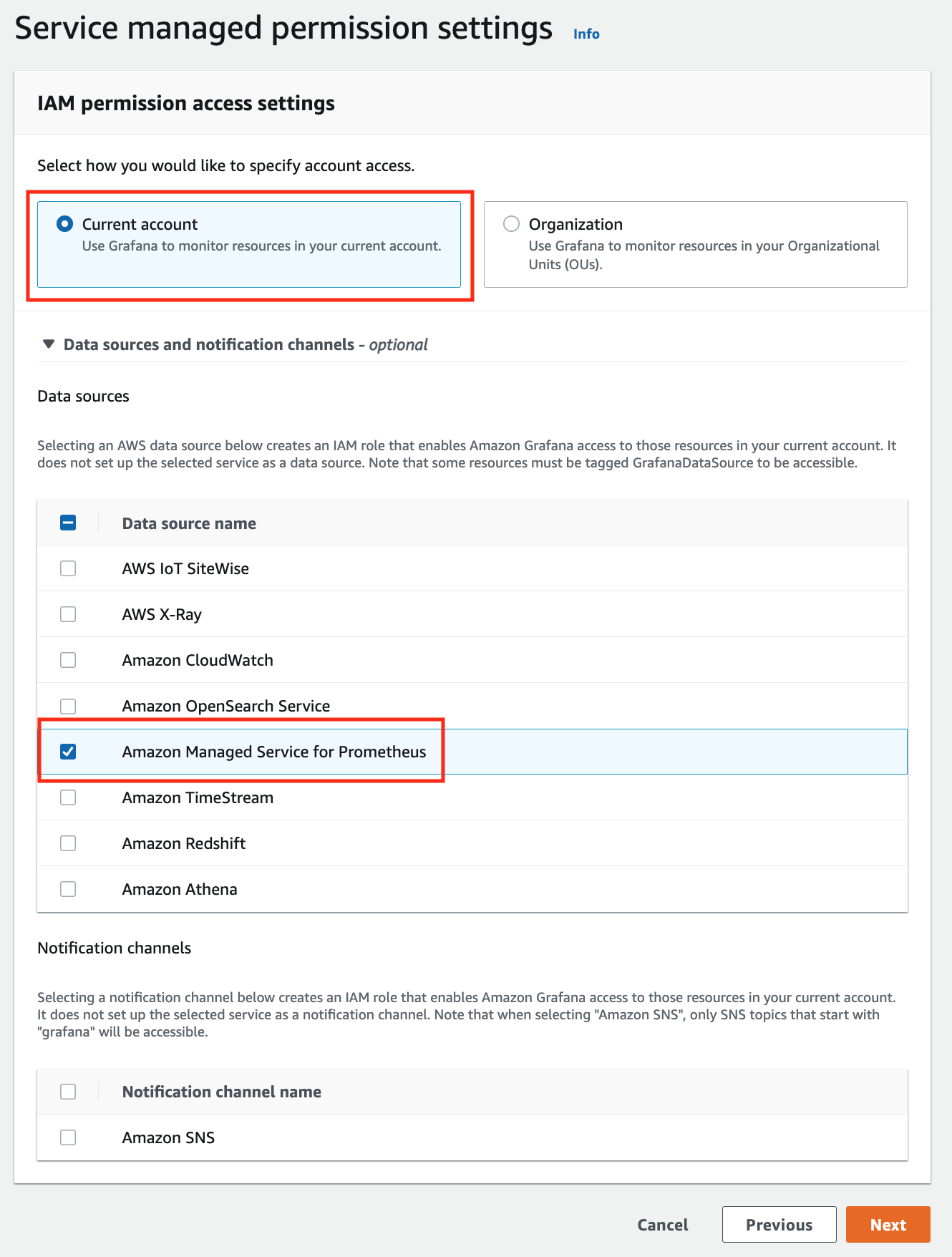

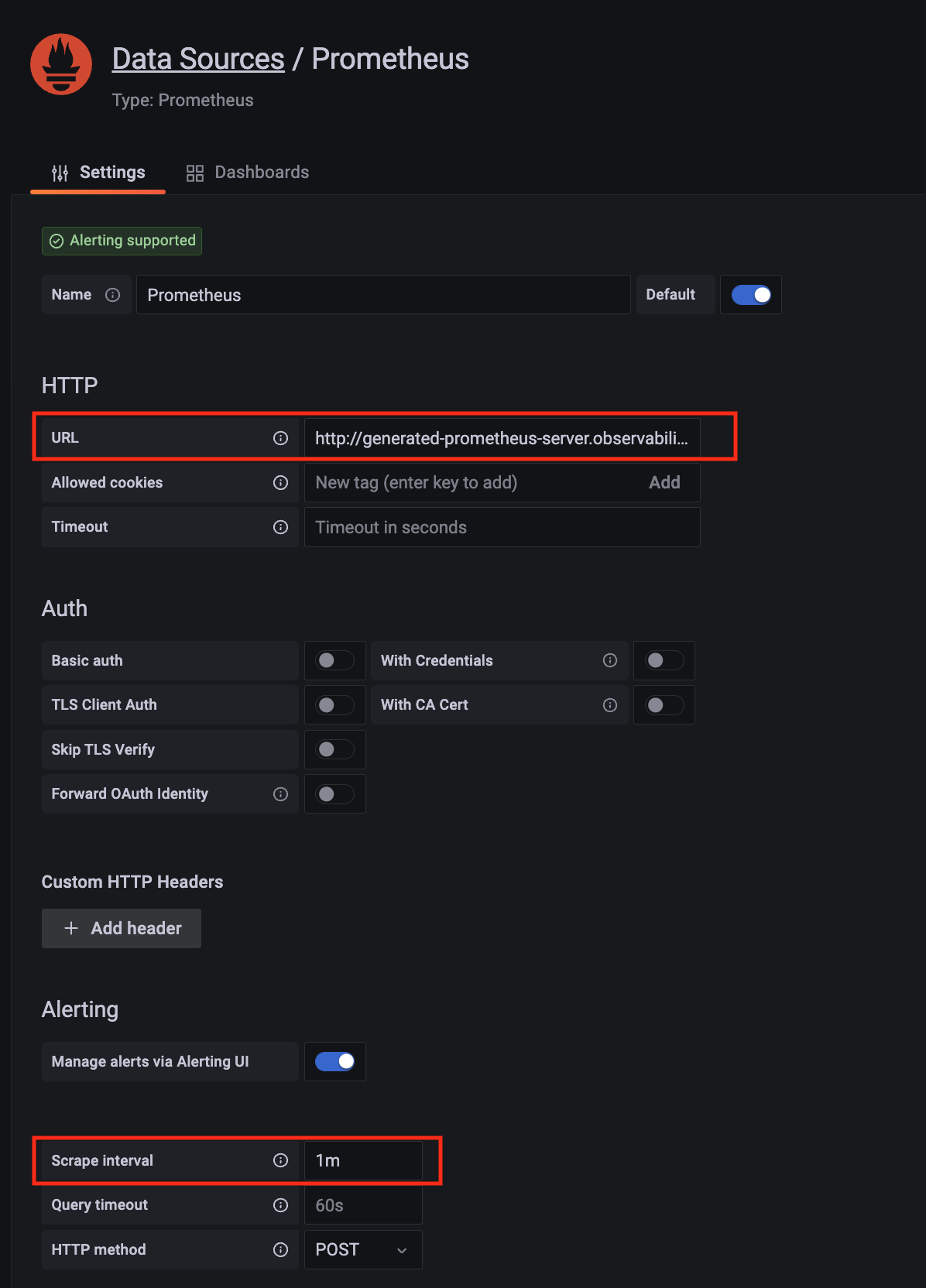

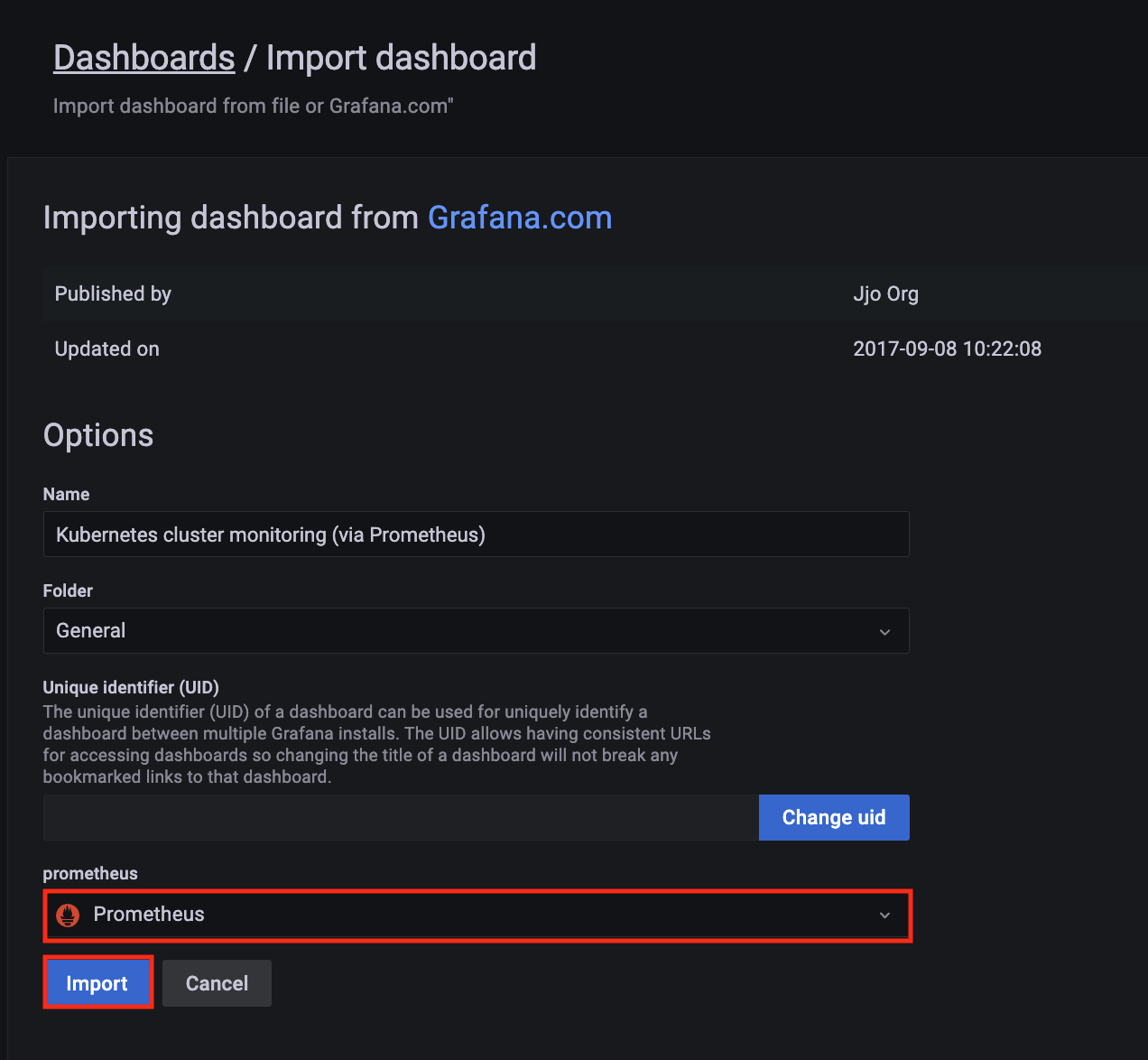

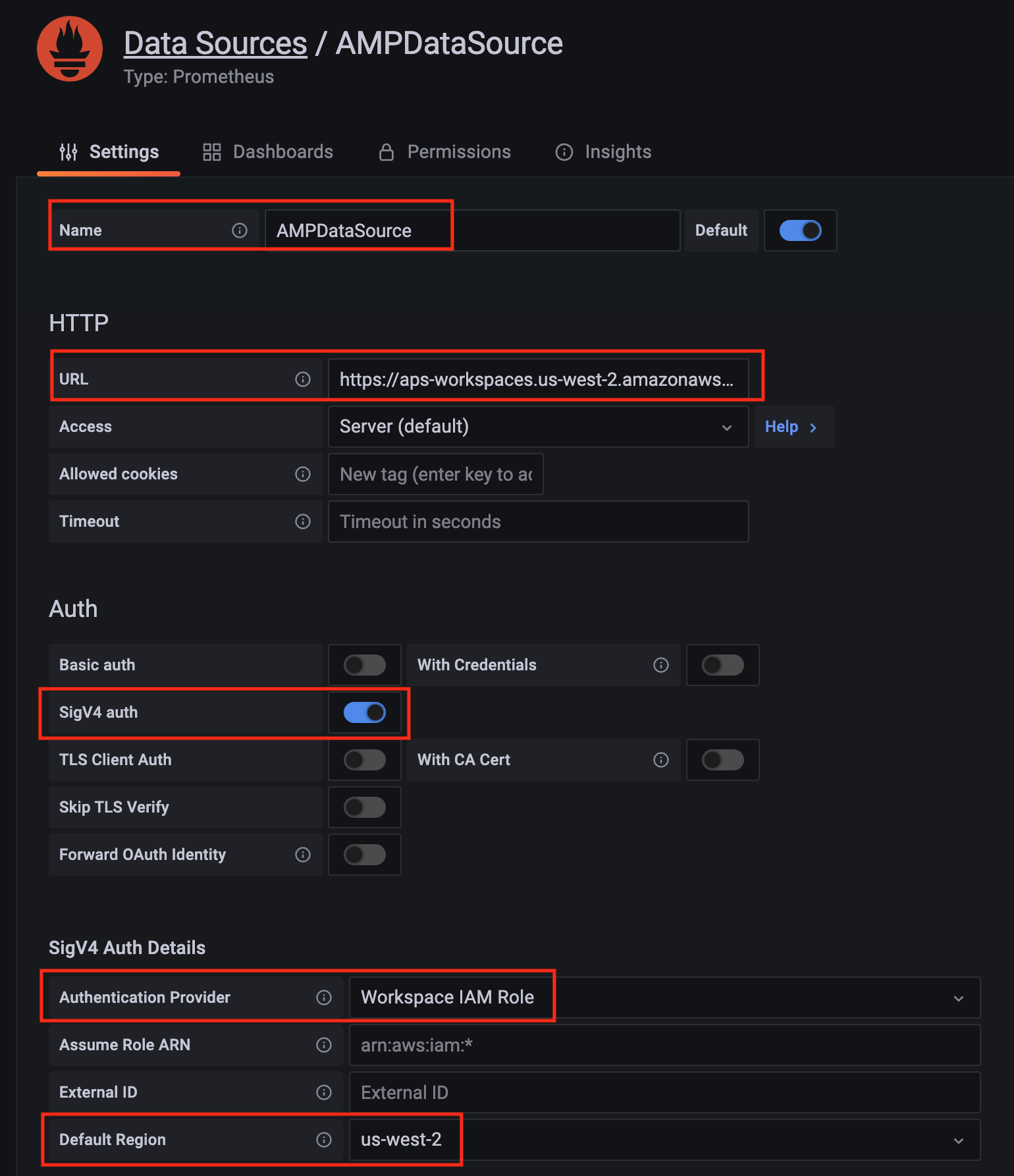

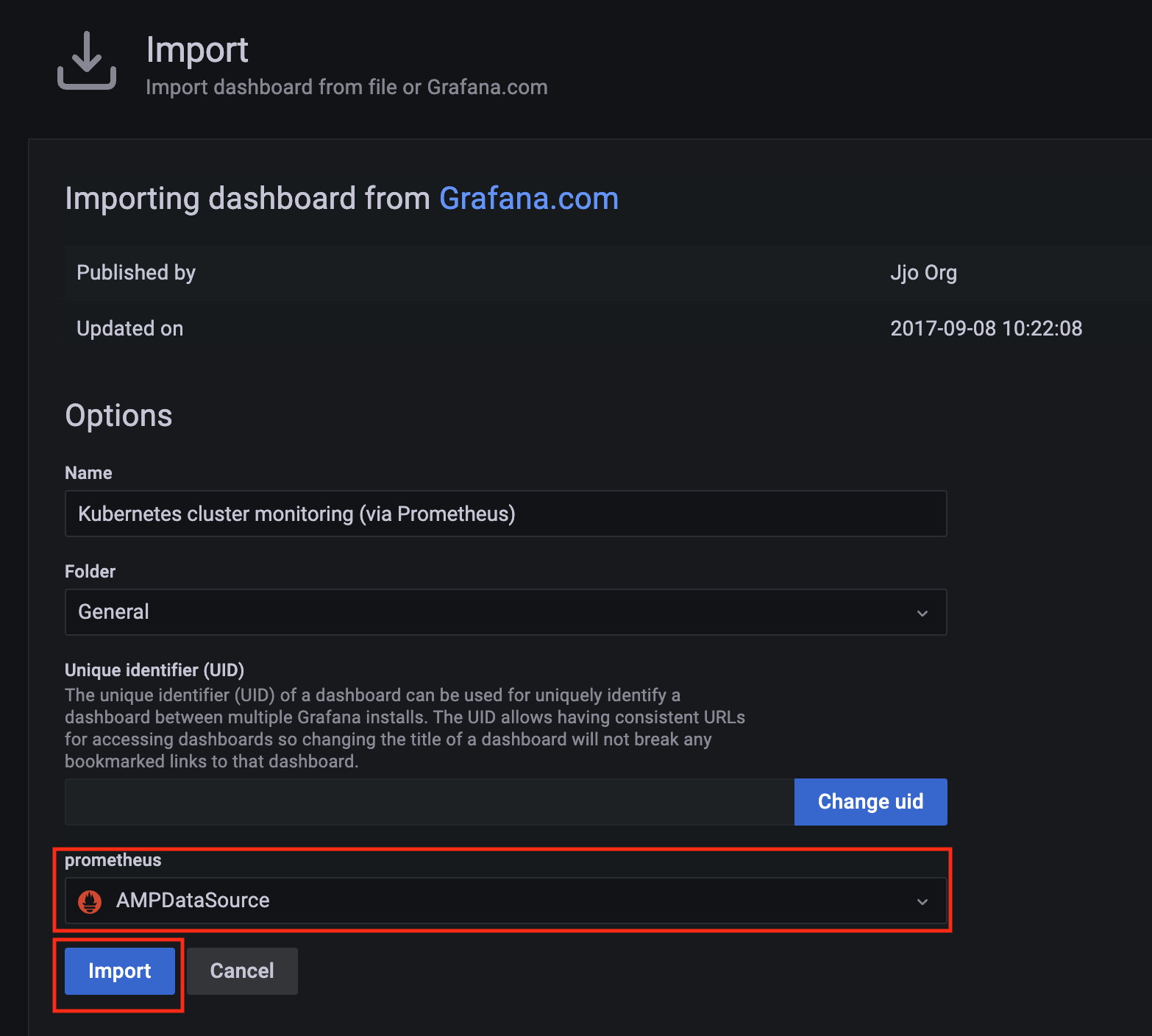

to view your EKS Anywhere clusters in the Amazon EKS console, AWS IAM to authenticate to your EKS Anywhere clusters, IAM Roles for Service Accounts (IRSA) to authenticate Pods with other AWS services, and AWS Distro for OpenTelemetry to send metrics to Amazon Managed Prometheus for monitoring cluster resources.

If you have on-premises or edge environments with reliable connectivity to an AWS Region, consider using EKS Hybrid Nodes

or EKS on Outposts

to benefit from the AWS-managed EKS control plane and consistent experience with EKS in AWS Cloud.

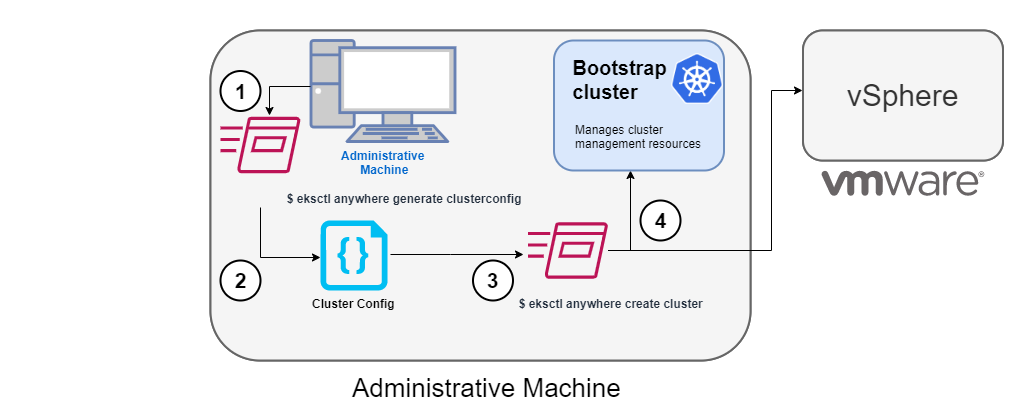

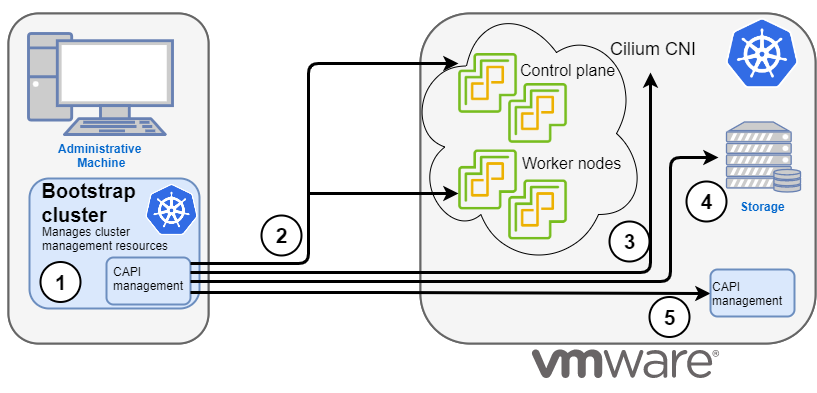

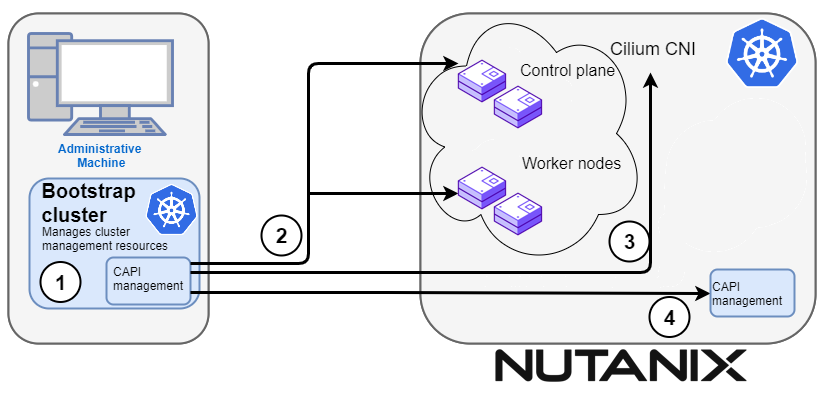

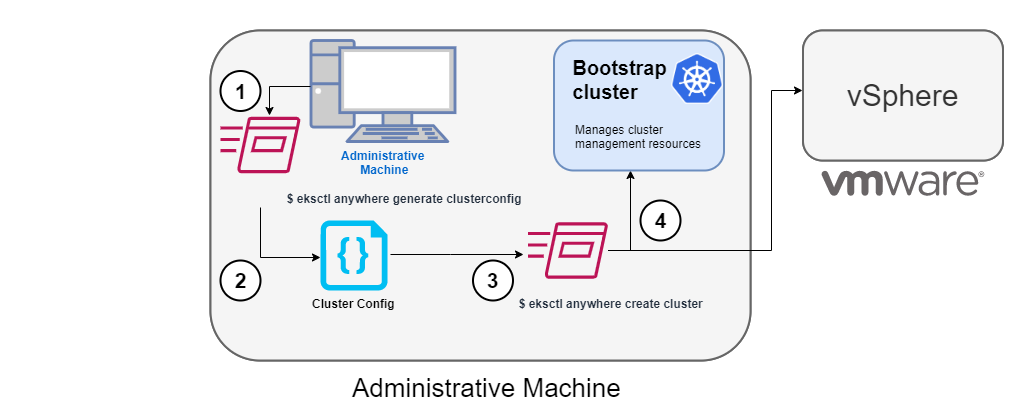

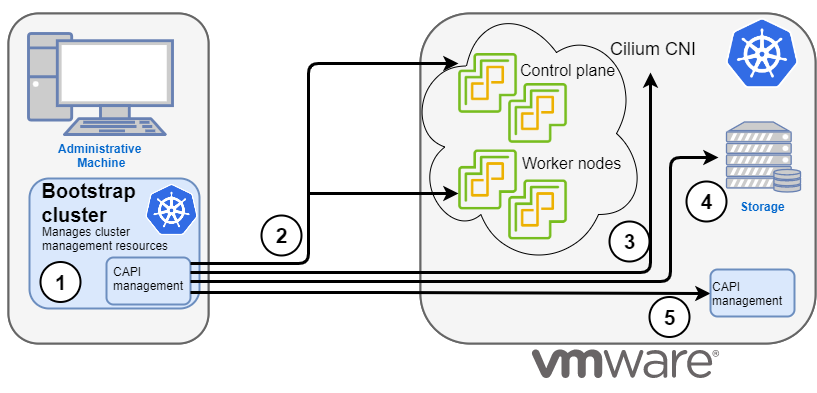

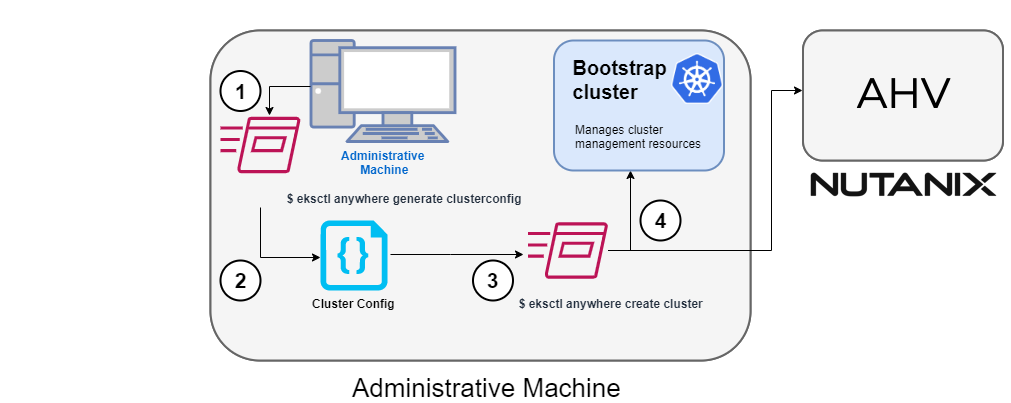

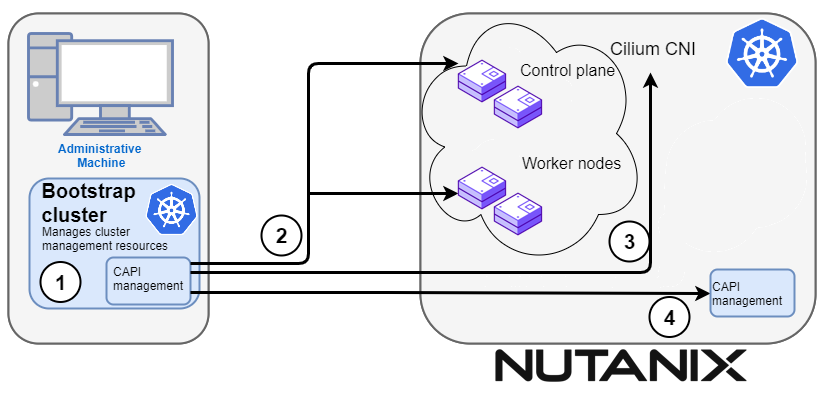

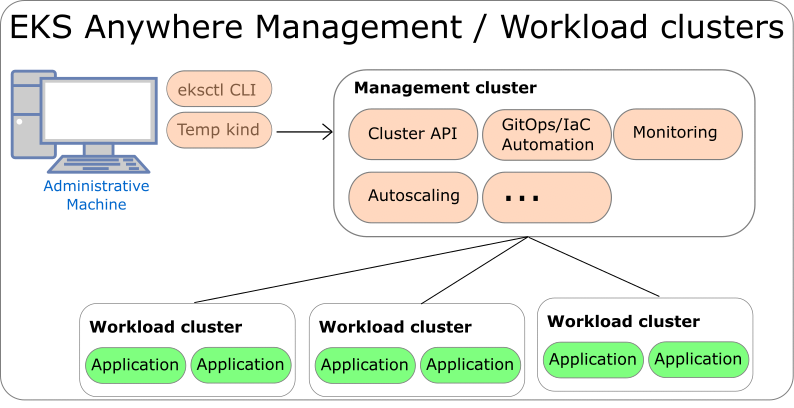

EKS Anywhere is built on the Kubernetes sub-project called Cluster API

(CAPI), which is focused on providing declarative APIs and tooling to simplify the provisioning, upgrading, and operating of multiple Kubernetes clusters. While EKS Anywhere simplifies and abstracts the CAPI primitives, it is useful to understand the basics of CAPI when using EKS Anywhere.

Why EKS Anywhere?

- Simplify and automate Kubernetes management on-premises

- Unify Kubernetes distribution and support across on-premises, edge, and cloud environments

- Run in isolated or air-gapped on-premises environments

- Adopt modern operational practices and tools on-premises

- Build on open source standards

Common Use Cases

- Modernize on-premises applications from virtual machines to containers

- Internal development platforms to standardize how teams consume Kubernetes across the organization

- Telco 5G Radio Access Networks (RAN) and Core workloads

- Regulated services in private data centers on-premises

What’s Next?

1.1 - Frequently Asked Questions

Frequently asked questions about EKS Anywhere

General

Where can I deploy EKS Anywhere?

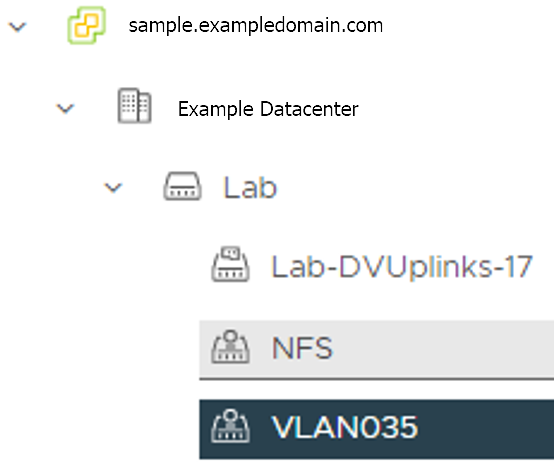

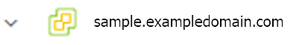

EKS Anywhere is designed to run on user-managed infrastructure in user-managed environments. EKS Anywhere supports different types of infrastructure including VMware vSphere, bare metal, Nutanix, AWS Snowball Edge, and Apache CloudStack.

Can I run EKS Anywhere in the cloud?

No, EKS Anywhere is not supported to run in AWS or other clouds, and EC2 instances cannot be used as the infrastructure for EKS Anywhere clusters. This includes EC2 instances running in AWS Regions, Local Zones, and Outposts.

What operating systems can I use with EKS Anywhere?

EKS Anywhere provides Bottlerocket, a Linux-based container-native operating system built by AWS, as the default node operating system for clusters on VMware vSphere. You can alternatively use Ubuntu and Red Hat Enterprise Linux (RHEL) as the node operating system. You can only use a single node operating system per cluster. Bottlerocket is the only operating system distributed and fully supported by AWS. If you are using the other operating systems, you must build the operating system images and configure EKS Anywhere to use the images you built when installing or updating clusters. AWS will assist with troubleshooting and configuration guidance for Ubuntu and RHEL as part of EKS Anywhere Enterprise Subscriptions. For official support for Ubuntu and RHEL operating systems, you must purchase support through their respective vendors.

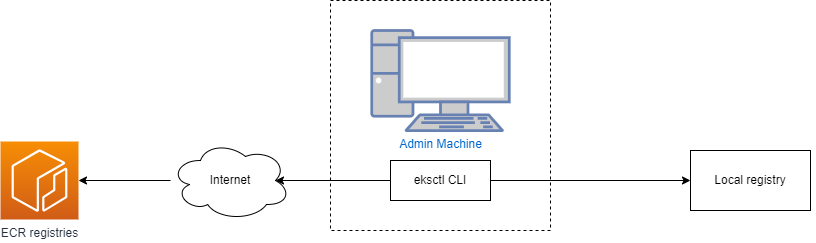

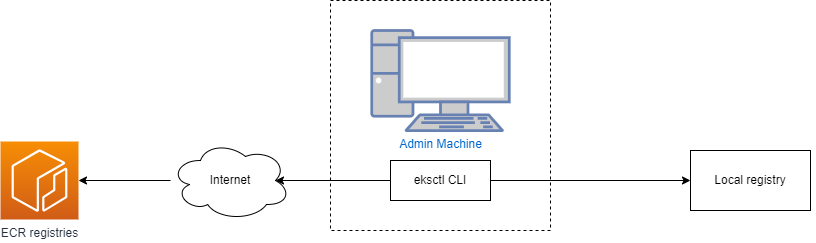

Does EKS Anywhere require a connection to AWS?

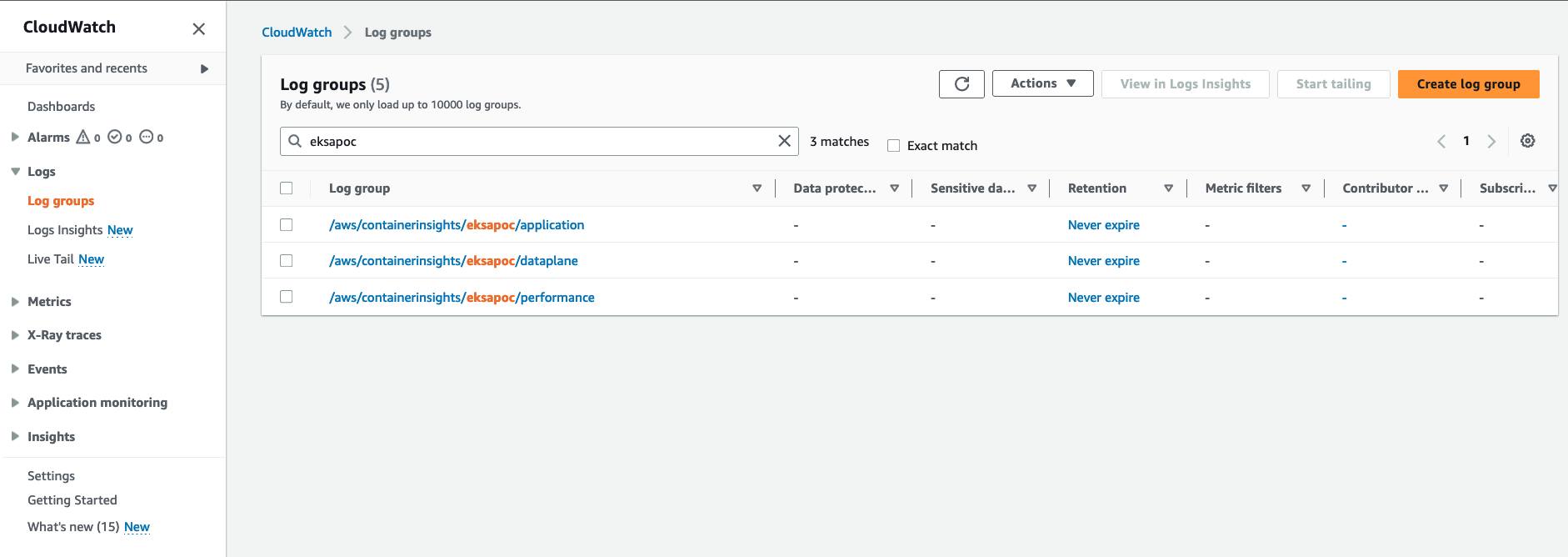

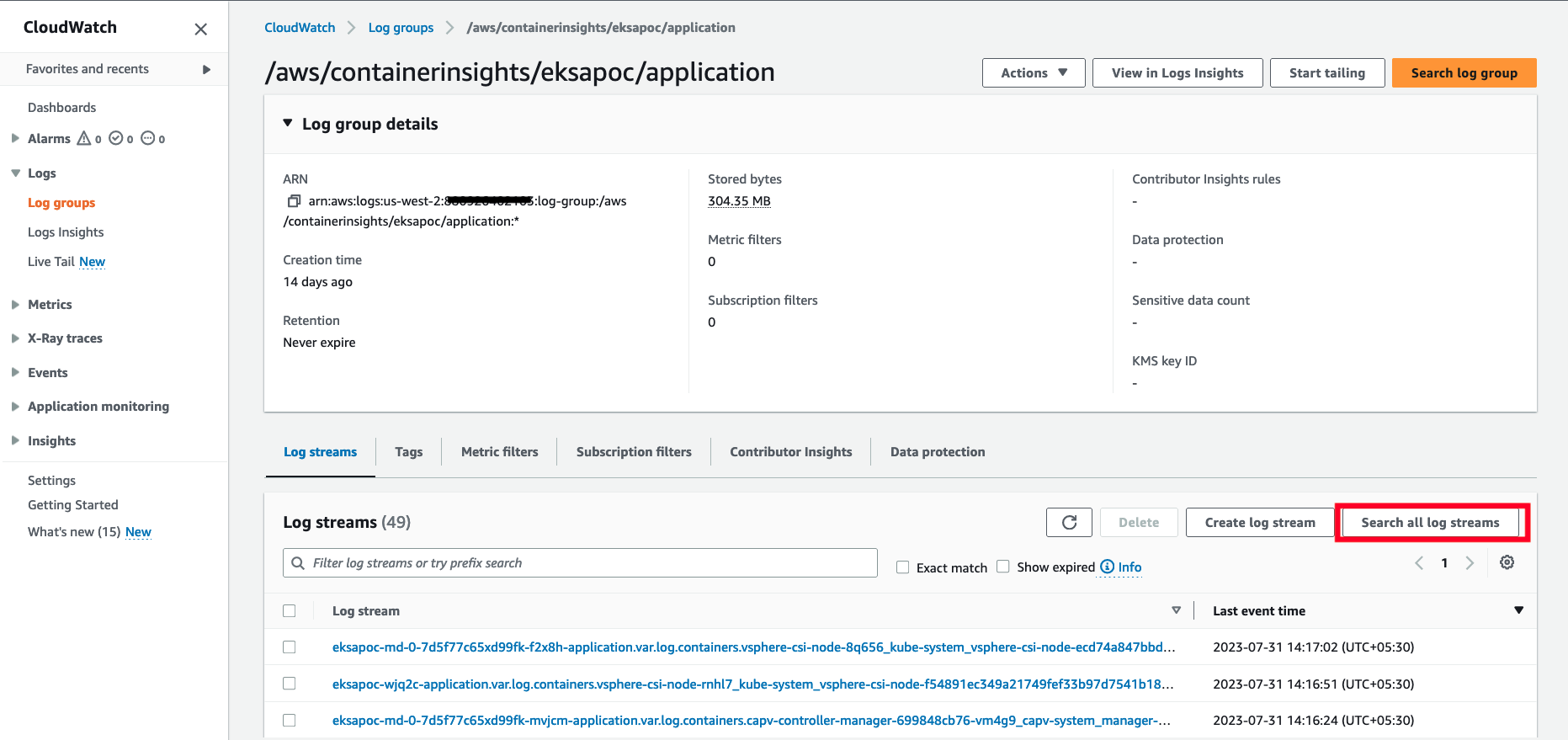

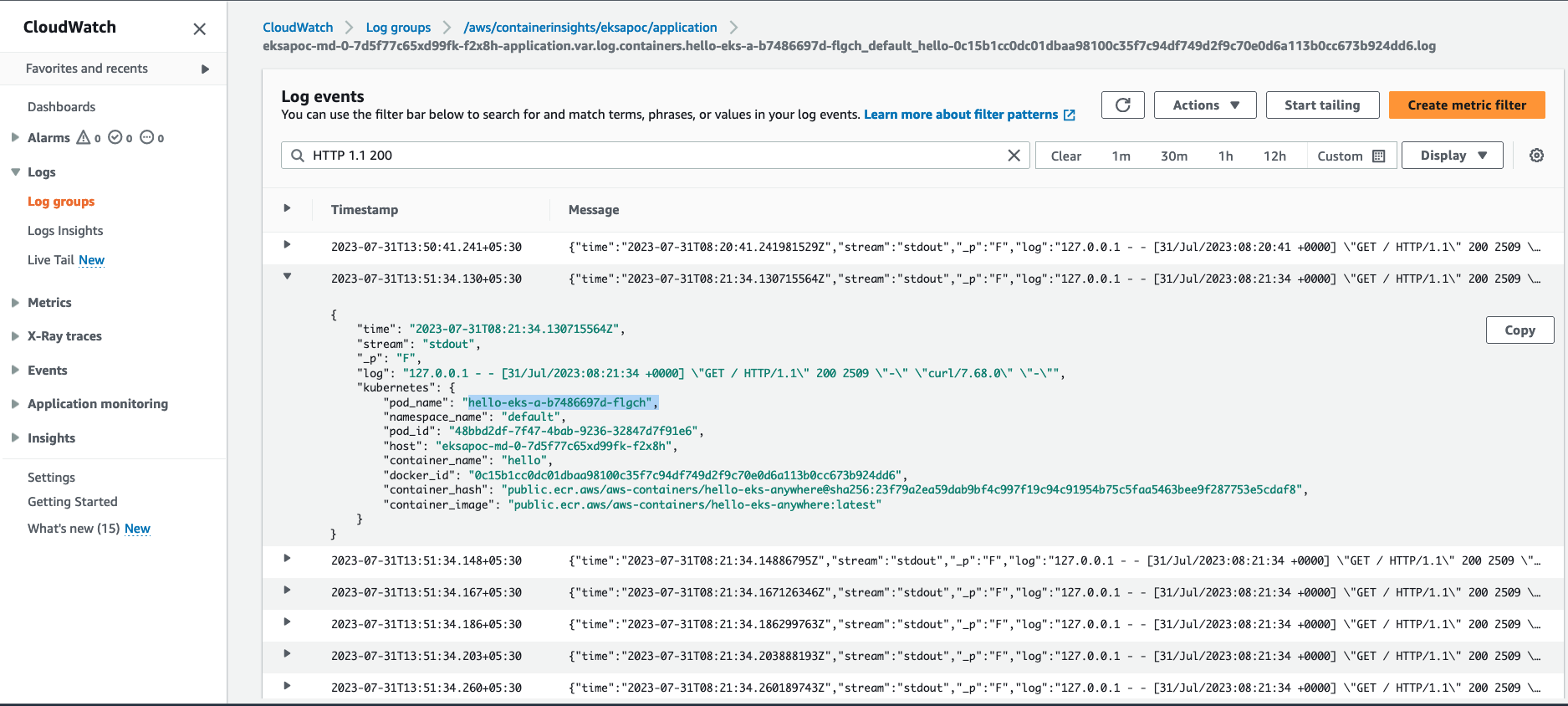

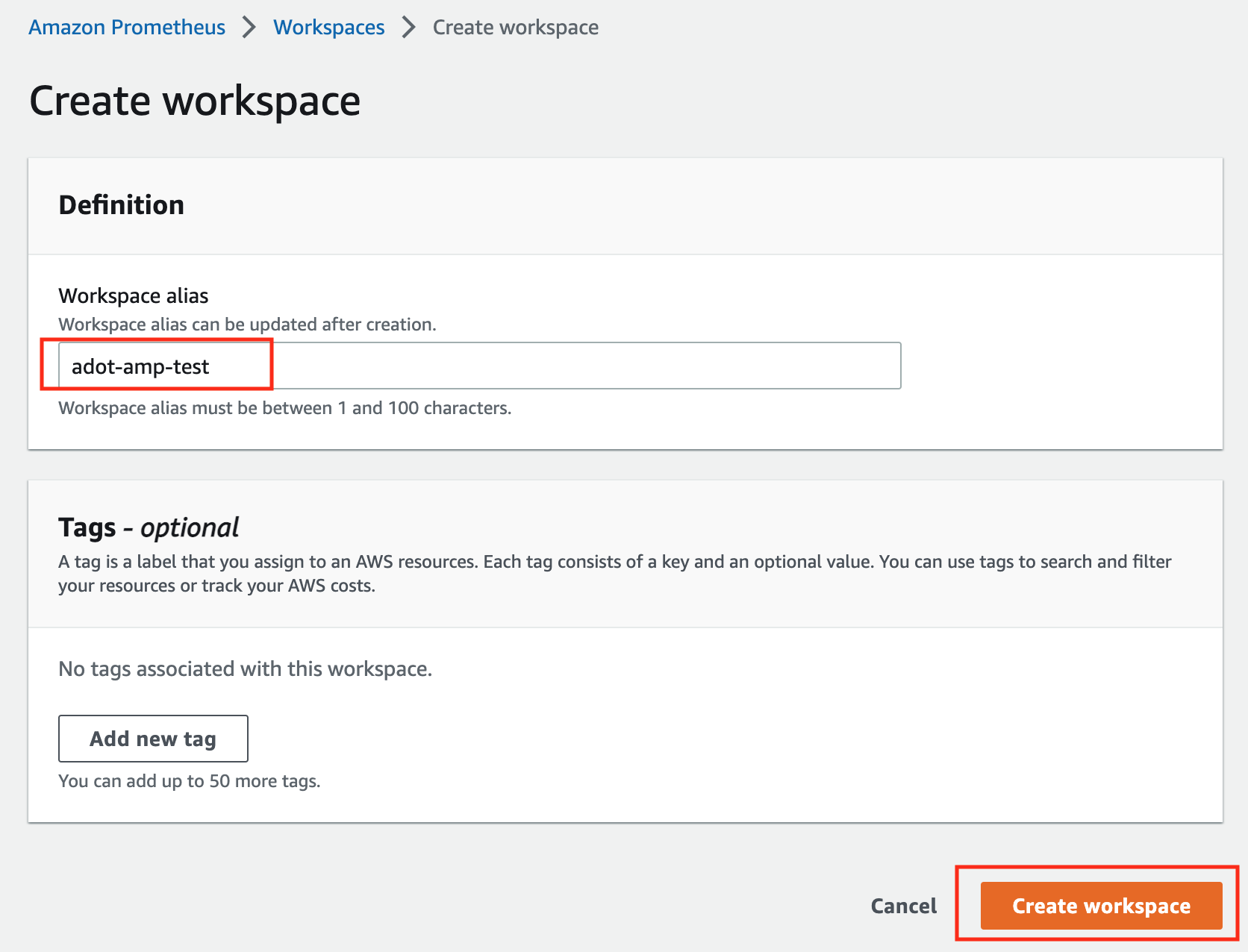

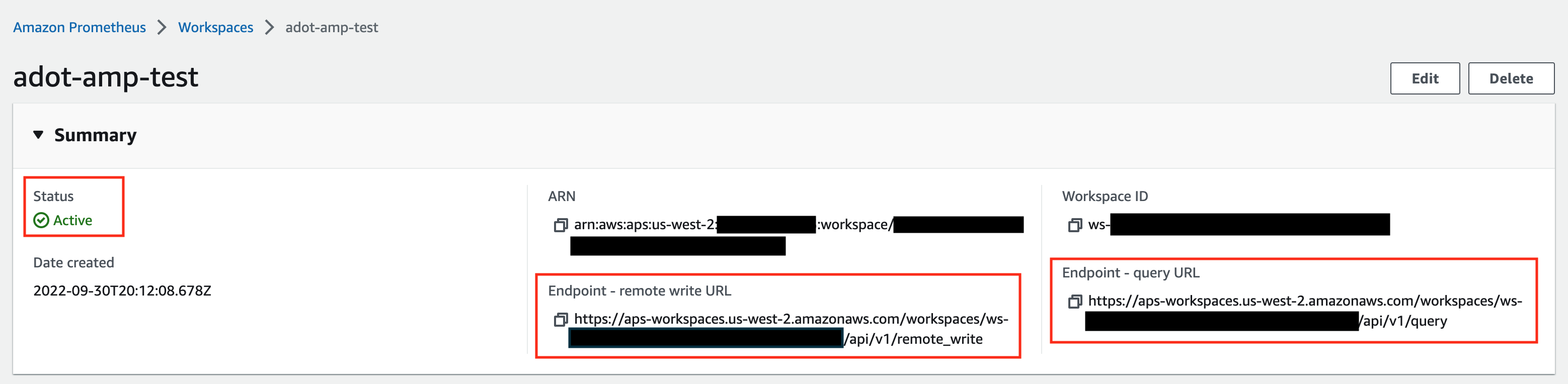

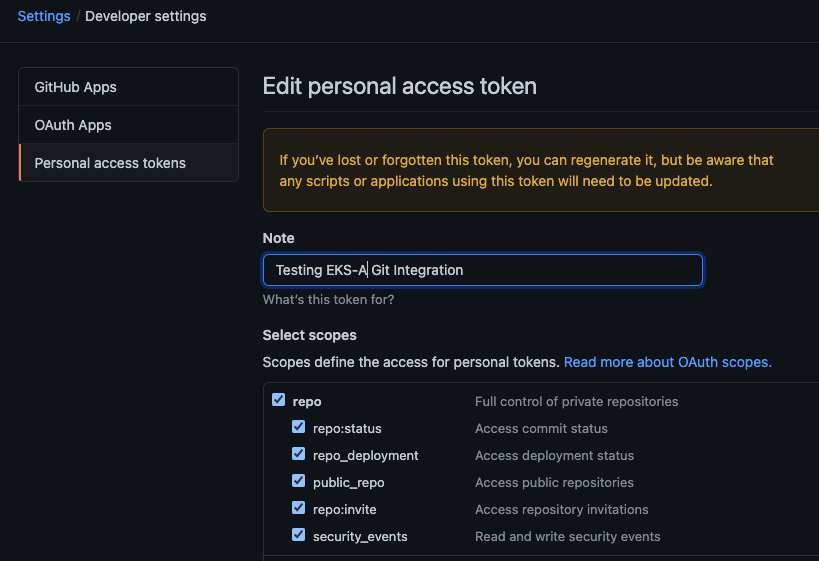

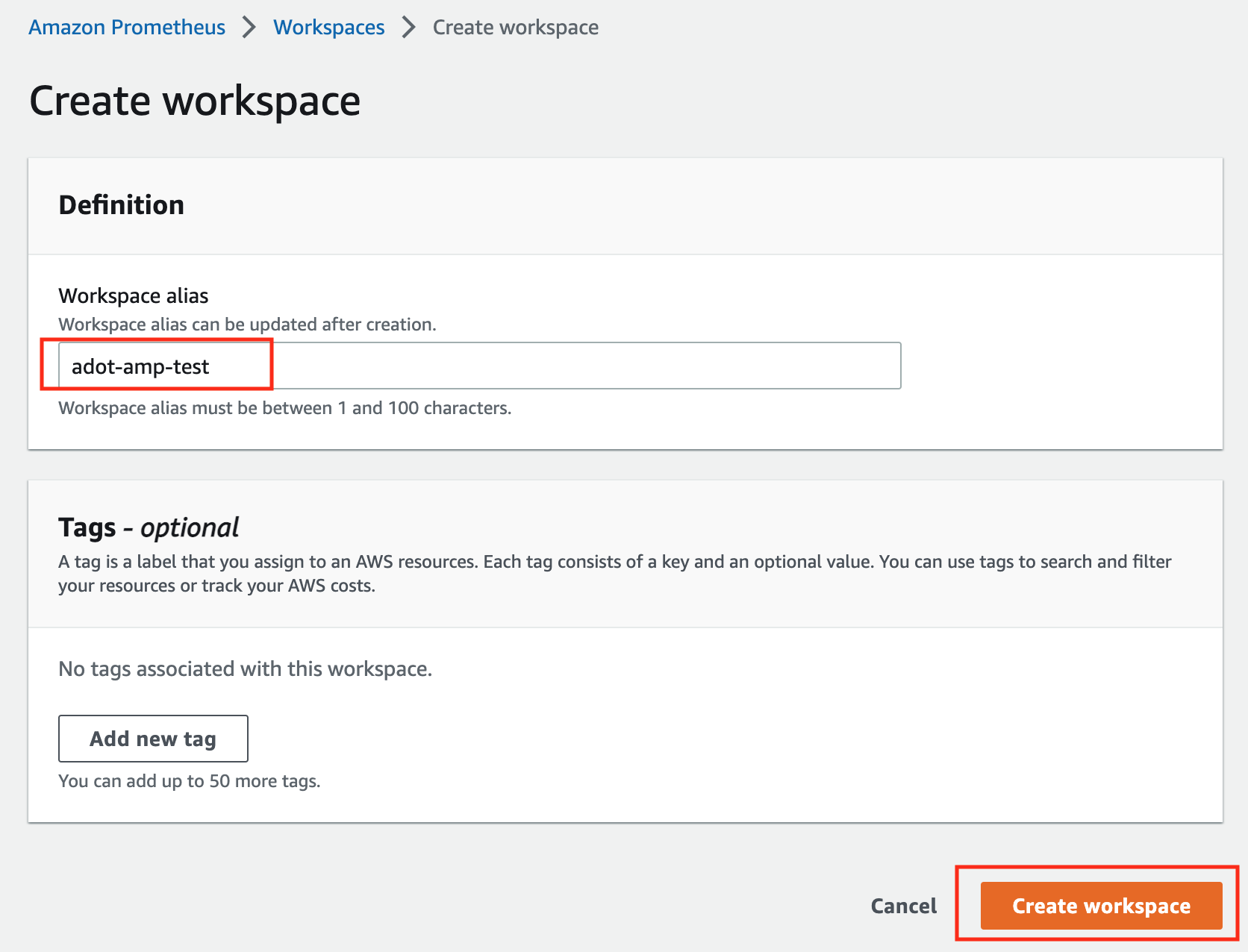

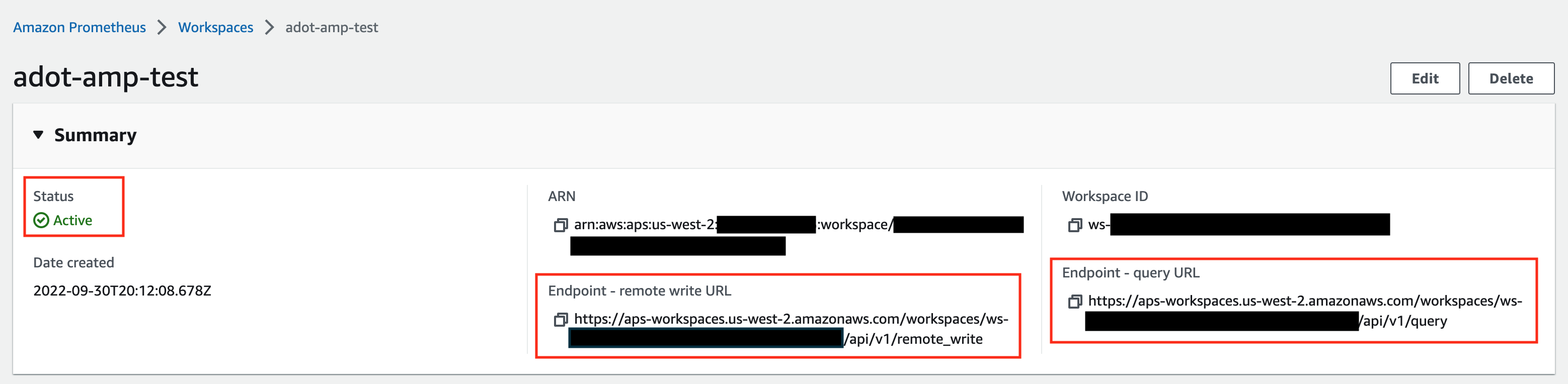

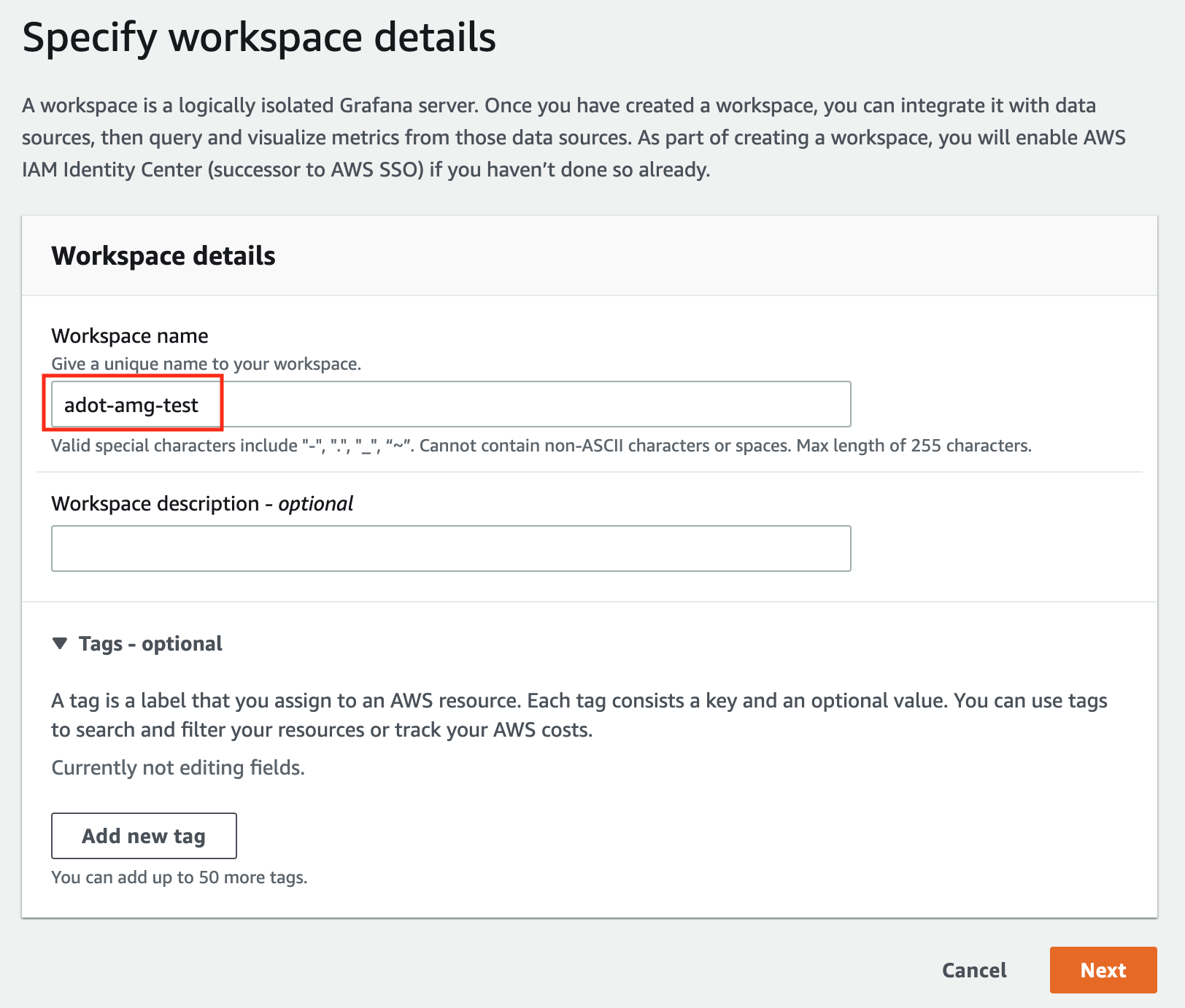

EKS Anywhere can run connected to an AWS Region or disconnected from an AWS Region, including in air-gapped environments. If you run EKS Anywhere connected to an AWS Region, you can view your clusters in the Amazon EKS console with the EKS Connector and can optionally use AWS IAM for cluster authentication, AWS IAM Roles for Service Accounts (IRSA), cert-manager with Amazon Certificate Manager, the AWS Distro for OpenTelemetry (ADOT) collector with Amazon Managed Prometheus, and FluentBit with Amazon CloudWatch Logs.

What are the differences between EKS Anywhere and EKS Hybrid Nodes?

EKS Hybrid Nodes

is a feature of Amazon EKS, a managed Kubernetes service, whereas EKS Anywhere is AWS-supported Kubernetes management software that you manage. EKS Hybrid Nodes is a fit for customers with on-premises environments that can be connected to the cloud, whereas EKS Anywhere is a fit for customers with isolated or air-gapped on-premises environments.

With EKS Hybrid Nodes, AWS manages the security, availability, and scalability of the Kubernetes control plane, which is hosted in AWS Cloud, and only nodes run on your infrastructure. With EKS Anywhere, you are responsible for managing the Kubernetes clusters that run entirely on your infrastructure. EKS Hybrid Nodes uses a “bring-your-own-infrastructure” approach where you are responsible for the provisioning and management of the infrastructure used for your hybrid nodes compute with your own choice of tooling whereas EKS Anywhere integrates with Cluster API (CAPI) to provision and manage the infrastructure used as nodes in EKS Anywhere clusters.

With EKS Hybrid Nodes, there are no upfront commitments or minimum fees and you pay for the hourly use of your cluster and nodes as you use them. The EKS Hybrid Nodes fee is based on vCPU of the connected hybrid nodes. With EKS Anywhere, you can purchase EKS Anywhere Enterprise Subscriptions for a one-year or three-year term on a per cluster basis.

What are the differences between EKS Anywhere and ECS Anywhere?

ECS Anywhere

is a feature of Amazon ECS that can be used to run containers on your on-premises infrastructure. ECS Anywhere is similar to EKS Hybrid Nodes, where the ECS control plane runs in an AWS Region, managed by ECS, and you connect your on-premises hosts as instances to your ECS clusters to enable tasks to be scheduled on your on-premises ECS instances. With EKS Anywhere, you are responsible for managing the Kubernetes clusters that run entirely on your infrastructure.

Architecture

What infrastructure do I need to get started with EKS Anywhere?

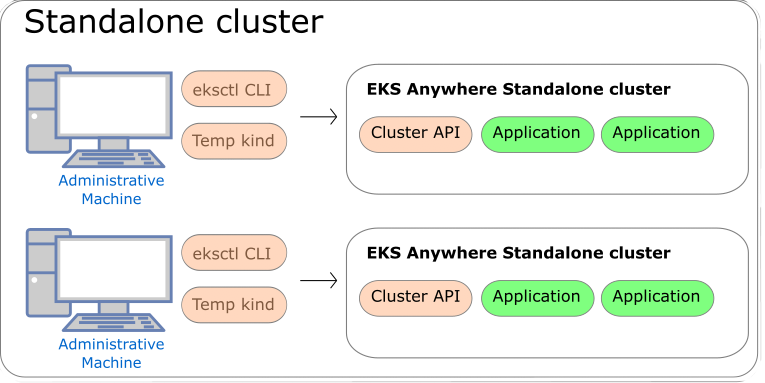

To get started with EKS Anywhere, you need 1 admin machine

and at least 1 VM for the control plane and 1 VM for the worker node if you are running on VMware vSphere, Nutanix, AWS Snowball Edge, or Apache CloudStack. If you are running on bare metal, you need at least 1 admin machine

and 1 physical server for the co-located control plane and worker node.

What infrastructure do I need to run EKS Anywhere in production?

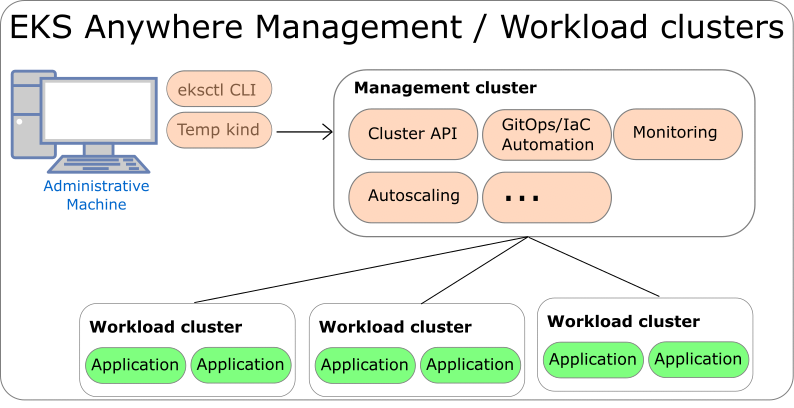

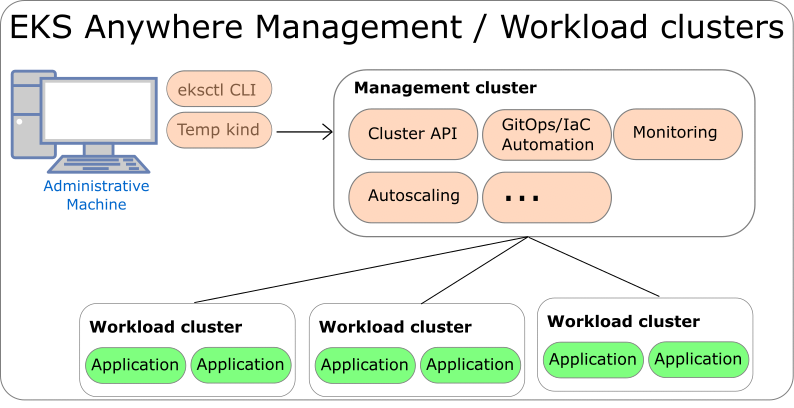

To use EKS Anywhere in production, it is generally recommended to run separate management and workload clusters, see EKS Anywhere Architecture

for more information. It is recommended to run both the management cluster and workload clusters in a highly available fashion with the Kubernetes control plane instances spread across multiple virtual or physical hosts. For management clusters, management components are run on worker nodes that are separate from the Kubernetes control plane machines. For workload clusters, application workloads are run on worker nodes that are separate from the Kubernetes control plane machines, unless you are running on bare metal which allows for co-locating the Kubernetes control plane and worker nodes on the same physical machines.

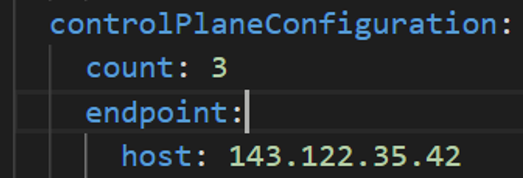

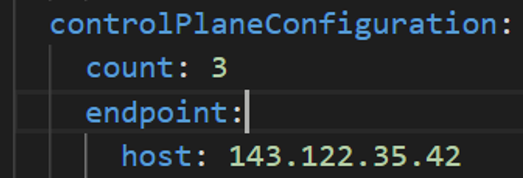

If you are using VMware vSphere, Nutanix, AWS Snowball Edge, or Apache CloudStack for your infrastructure, it is recommended to run at least 3 separate virtual machines for the etcd instances of the Kubernetes control plane, which can be configured with the externalEctdConfiguration setting of the EKS Anywhere cluster specification. For more information, see the installation Overview

and the requirements for using EKS Anywhere for each infrastructure provider below.

What permissions does EKS Anywhere need to manage infrastructure used for the cluster?

EKS Anywhere needs permissions to create the virtual machines that are used as nodes in EKS Anywhere clusters. If you are running EKS Anywhere on bare metal, EKS Anywhere needs to be able to remotely manage your bare metal servers for network booting. You must configure these permissions before creating EKS Anywhere clusters.

What components does EKS Anywhere use?

EKS Anywhere is built on the Kubernetes sub-project called Cluster API

(CAPI), which is focused on providing declarative APIs and tooling to simplify the provisioning, upgrading, and operating of multiple Kubernetes clusters. EKS Anywhere inherits many of the same architectural patterns and concepts that exist in CAPI. Reference the CAPI documentation

to learn more about the core CAPI concepts.

EKS Anywhere has four categories of components, all based on open source software:

- Administrative / CLI components: Responsible for lifecycle operations of management or standalone clusters, building images, and collecting support diagnostics. Admin / CLI components run on Admin machines or image building machines.

- Management components: Responsible for infrastructure and cluster lifecycle management (create, update, upgrade, scale, delete). Management components run on standalone or management clusters.

- Cluster components: Components that make up a Kubernetes cluster where applications run. Cluster components run on standalone, management, and workload clusters.

- Curated packages: Amazon-curated software packages that extend the core functionalities of Kubernetes on your EKS Anywhere clusters

For more information on EKS Anywhere components, reference EKS Anywhere Architecture.

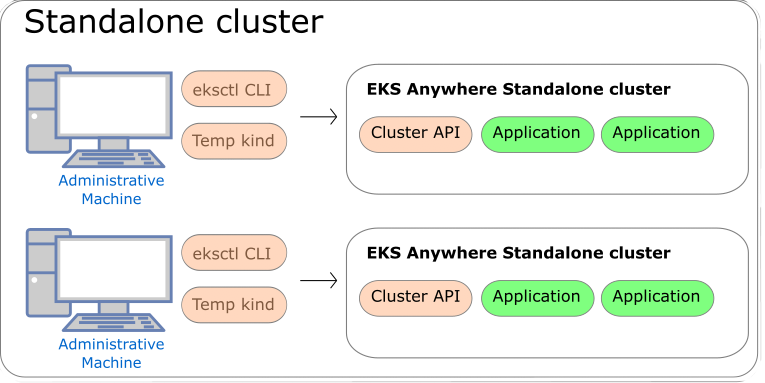

What interfaces can I use to manage EKS Anywhere clusters?

The tools available for cluster lifecycle operations (create, update, upgrade, scale, delete) vary based on the EKS Anywhere architecture you run. You must use the eksctl CLI for cluster lifecycle operations with standalone clusters and management clusters. If you are running a management / workload cluster architecture, you can use the management cluster to manage one-to-many downstream workload clusters. With the management cluster architecture, you can use the eksctl CLI, any Kubernetes API-compatible client, or Infrastructure as Code (IAC) tooling such as Terraform and GitOps to manage the lifecycle of workload clusters. For details on the differences between the architecture options, reference the Architecture page .

To perform cluster lifecycle operations for standalone, management, or workload clusters, you modify the EKS Anywhere Cluster specification, which is a Kubernetes Custom Resource for EKS Anywhere clusters. When you modify a field in an existing Cluster specification, EKS Anywhere reconciles the infrastructure and Kubernetes components until they match the new desired state you defined.

EKS Anywhere Enterprise Subscriptions

For more information on EKS Anywhere Enterprise Subscriptions, see the Overview of support for EKS Anywhere.

What is included in EKS Anywhere Enterprise Subscriptions?

EKS Anywhere Enterprise Subscriptions include support for EKS Anywhere clusters, access to EKS Anywhere Curated Packages, and access to extended support for Kubernetes versions. If you do not have an EKS Anywhere Enterprise Subscription, you cannot get support for EKS Anywhere clusters through AWS Support.

How much do EKS Anywhere Enterprise Subscriptions cost?

For pricing information, visit the EKS Anywhere Pricing

page.

How can I purchase an EKS Anywhere Enterprise Subscription?

Reference the Purchase Subscriptions

documentation for instructions on how to purchase.

Is there a free trial for EKS Anywhere Enterprise Subscriptions?

Free trial access to EKS Anywhere Curated Packages is available upon request. Free trial access to EKS Anywhere Curated Packages does not include troubleshooting support for your EKS Anywhere deployments. Contact your AWS account team for more information.

1.2 - Partners

EKS Anywhere validated partners

Amazon EKS Anywhere maintains relationships with third-party vendors to provide add-on solutions for EKS Anywhere clusters.

A complete list of these partners is maintained on the Amazon EKS Anywhere Partners

page.

See Conformitron: Validate third-party software with Amazon EKS and Amazon EKS Anywhere

for information on how conformance testing and quality assurance is done on this software.

The following shows validated EKS Anywhere partners whose products have passed conformance test for specific EKS Anywhere providers and versions:

Kubernetes Version : 1.31

Date of Conformance Test : 2024-12-17

Following ISV Partners have Validated their Conformance :

VENDOR_PRODUCT VENDOR_PRODUCT_TYPE VENDOR_PRODUCT_VERSION

aqua aqua-enforcer 2022.4.20

dynatrace dynatrace 1.3.0

kong kong-enterprise 2.27.0

accuknox kubearmor v1.3.2

kubecost cost-analyzer 2.4.3

nirmata enterprise-kyverno 1.6.10

lacework polygraph 6.11.0

newrelic nri-bundle 5.0.101

perfectscale perfectscale v0.0.38

pulumi pulumi-kubernetes-operator 0.3.0

solo.io solo-istiod 1.18.3-eks-a

stormforge optimize-live 2.16.1

tetrate.io tetrate-istio-distribution 1.18.1

hashicorp vault 0.25.0

STCLab wave-autoscale 1.10.0

vSphere provider validated partners

Kubernetes Version : 1.31

Date of Conformance Test : 2024-12-17

Following ISV Partners have Validated their Conformance :

VENDOR_PRODUCT VENDOR_PRODUCT_TYPE VENDOR_PRODUCT_VERSION

aqua aqua-enforcer 2022.4.20

dynatrace dynatrace 1.3.0

kong kong-enterprise 2.27.0

accuknox kubearmor v1.3.2

kubecost cost-analyzer 2.4.3

nirmata enterprise-kyverno 1.6.10

lacework polygraph 6.11.0

newrelic nri-bundle 5.0.101

perfectscale perfectscale v0.0.38

pulumi pulumi-kubernetes-operator 0.3.0

solo.io solo-istiod 1.18.3-eks-a

stormforge optimize-live 2.16.1

tetrate.io tetrate-istio-distribution 1.18.1

hashicorp vault 0.25.0

STCLab wave-autoscale 1.10.0

AWS Hybrid Nodes provider validated partners

Kubernetes Version : 1.30

Date of Conformance Test : 2024-12-17

Following ISV Partners have Validated their Conformance :

VENDOR_PRODUCT VENDOR_PRODUCT_TYPE VENDOR_PRODUCT_VERSION

aqua aqua-enforcer 2022.4.20

dynatrace dynatrace 1.3.0

kong kong-enterprise 2.27.0

accuknox kubearmor v1.3.2

kubecost cost-analyzer 2.4.3

nirmata enterprise-kyverno 1.6.10

lacework polygraph 6.11.0

newrelic nri-bundle 5.0.95

perfectscale perfectscale v0.0.38

pulumi pulumi-kubernetes-operator 0.3.0

solo.io solo-istiod 1.18.3-eks-a

stormforge optimize-live 2.16.1

tetrate.io tetrate-istio-distribution 1.18.1

hashicorp vault 0.25.0

STCLab wave-autoscale 1.10.0

AWS Auto Mode provider validated partners

Kubernetes Version : 1.31

Date of Conformance Test : 2024-12-17

Following ISV Partners have Validated their Conformance :

VENDOR_PRODUCT VENDOR_PRODUCT_TYPE VENDOR_PRODUCT_VERSION

aqua aqua-enforcer 2022.4.20

dynatrace dynatrace 1.3.0

kong kong-enterprise 2.27.0

accuknox kubearmor v1.3.2

kubecost cost-analyzer 2.4.3

nirmata enterprise-kyverno 1.6.10

lacework polygraph 6.11.0

perfectscale perfectscale v0.0.38

pulumi pulumi-kubernetes-operator 0.3.0

solo.io solo-istiod 1.18.3-eks-a

stormforge optimize-live 2.16.1

tetrate.io tetrate-istio-distribution 1.18.1

hashicorp vault 0.25.0

STCLab wave-autoscale 1.10.0

AWS Cloud provider validated partners

Kubernetes Version : 1.28

Date of Conformance Test : 2024-12-17

Following ISV Partners have Validated their Conformance :

VENDOR_PRODUCT VENDOR_PRODUCT_TYPE VENDOR_PRODUCT_VERSION

aqua aqua-enforcer 2022.4.20

dynatrace dynatrace 1.3.0

kong kong-enterprise 2.27.0

accuknox kubearmor v1.3.2

kubecost cost-analyzer 2.4.3

nirmata enterprise-kyverno 1.6.10

lacework polygraph 6.11.0

newrelic nri-bundle 5.0.101

perfectscale perfectscale v0.0.38

pulumi pulumi-kubernetes-operator 0.3.0

solo.io solo-istiod 1.18.3-eks-a

stormforge optimize-live 2.16.1

tetrate.io tetrate-istio-distribution 1.18.1

hashicorp vault 0.25.0

STCLab wave-autoscale 1.10.0

AWS Snow provider validated partners

Kubernetes Version : 1.29

Date of Conformance Test : 2024-12-17

Following ISV Partners have Validated their Conformance :

VENDOR_PRODUCT VENDOR_PRODUCT_TYPE VENDOR_PRODUCT_VERSION

aqua aqua-enforcer 2022.4.20

dynatrace dynatrace 1.3.0

kong kong-enterprise 2.27.0

accuknox kubearmor v1.3.2

kubecost cost-analyzer 2.4.3

nirmata enterprise-kyverno 1.6.10

lacework polygraph 6.11.0

newrelic nri-bundle 5.0.101

perfectscale perfectscale v0.0.38

pulumi pulumi-kubernetes-operator 0.3.0

solo.io solo-istiod 1.18.3-eks-a

stormforge optimize-live 2.16.1

tetrate.io tetrate-istio-distribution 1.18.1

hashicorp vault 0.25.0

STCLab wave-autoscale 1.10.0

2 - What's New

New EKS Anywhere releases, features, and fixes

2.1 - Changelog

Changelog for EKS Anywhere releases

Announcements

- If you are upgrading your management cluster to

v0.22.x patch versions prior to v0.22.3, you may encounter a bug related to extended Kubernetes versions support that blocks lifecycle management (LCM) operations on workload clusters running versions prior to v0.22.0. To avoid this issue, we recommend upgrading your management cluster directly to v0.22.3 before performing any workload cluster LCM operations.

- If you are running EKS Anywhere versions

v0.22.0 or v0.22.1 in an air-gapped environment with proxy enabled, you may be affected by a Helm v3.17.1 bug that impacts proxy functionality in air-gapped environments. To resolve this, we recommend upgrading to EKS Anywhere v0.22.2 or above. More details can be found here

- Due to a bug in Cilium introduced in 1.14, which is present in

v0.21.0-v0.21.6, we recommend that you upgrade to v0.21.7 or above to fix an issue when using hostport. More details listed here

- Due to a bug in the

sigs.k8s.io/yaml module that EKS Anywhere uses, Kubernetes versions whose minor versions are multiples of 10, such as 1.30, 1.40, etc, will be parsed as float64 instead of string if specified without quotes in the cluster config file. This causes the trailing zero to get dropped and be evaluated as 1.3 and 1.4 respectively. This issue has been fixed in EKS Anywhere release v0.21.5 so we recommend you to upgrade to that version for a better user experience. If you are unable to upgrade to v0.21.5, you must use single or double quotes around Kubernetes version(s) whose minor versions are multiples of 10.

Refer to the following links for more information regarding this issue:

- EKS Anywhere release

v0.19.0 introduces support for creating Kubernetes version v1.29 clusters. A conformance test was promoted in Kubernetes v1.29 that verifies that Services serving different L4 protocols with the same port number can co-exist in a Kubernetes cluster. This is not supported in Cilium, the CNI deployed on EKS Anywhere clusters, because Cilium currently does not differentiate between TCP and UDP protocols for Kubernetes Services. Hence EKS Anywhere v1.29 and above clusters will not pass this specific conformance test. This service protocol differentiation is being tracked in an upstream Cilium issue and will be supported in a future Cilium release. A future release of EKS Anywhere will include the patched Cilium version when it is available.

Refer to the following links for more information regarding the conformance test:

- The Bottlerocket project will not be releasing bare metal variants for Kubernetes versions v1.29 and beyond. Hence Bottlerocket is not a supported operating system for creating EKS Anywhere bare metal clusters with Kubernetes versions v1.29 and above. However, Bottlerocket is still supported for bare metal clusters running Kubernetes versions v1.28 and below.

Refer to the following links for more information regarding the deprecation:

- On January 31, 2024, a High-severity vulnerability CVE-2024-21626 was published affecting all

runc versions <= v1.1.11. This CVE has been fixed in runc version v1.1.12, which has been included in EKS Anywhere release v0.18.6. In order to fix this CVE in your new/existing EKS-A cluster, you MUST build or download new OS images pertaining to version v0.18.6 and create/upgrade your cluster with these images.

Refer to the following links for more information on the steps to mitigate the CVE.

- On October 11, 2024, a security issue CVE-2024-9594 was discovered in the Kubernetes Image Builder where default credentials are enabled during the image build process when using the Nutanix, OVA, QEMU or raw providers. The credentials can be used to gain root access. The credentials are disabled at the conclusion of the image build process. Kubernetes clusters are only affected if their nodes use VM images created via the Image Builder project. Clusters using virtual machine images built with Kubernetes Image Builder

version v0.1.37 or earlier are affected if built with the Nutanix, OVA, QEMU or raw providers. These images built using previous versions of image-builder will be vulnerable only during the image build process, if an attacker was able to reach the VM where the image build was happening, login using these default credentials and modify the image at the time the image build was occurring. This CVE has been fixed in image-builder versions >= v0.1.38, which has been included in EKS Anywhere releases v0.19.11 and v0.20.8.

General Information

- When upgrading to a new minor version, a new OS image must be created using the new image-builder CLI pertaining to that release.

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.33.0 |

✔ |

— |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

✔ |

✔ |

✔ |

✔ |

— |

Added

Changed

- Added EKS-D for 1-33::

- Cert-manager:

v1.16.5 to v1.17.2

- Cluster API:

v1.9.6 to v1.10.2

- Cluster API Provider Cloudstack:

v0.5.0 to v0.6.0

- Cluster API Provider Nutanix:

v1.5.4 to v1.6.1

- Cluster API Provider Tinkerbell:

v0.6.4 to v0.6.5

- Cluster API Provider vSphere:

v1.12.0 to v1.13.0

- Controller-runtime:

v0.16.5 to v0.20.4

- Kube-rbac-proxy:

v0.19.0 to v0.19.1

- Cri-tools:

v1.32.0 to v1.33.0

- Flux:

- Cli:

v2.5.1 to v2.6.0

- Helm Controller:

v1.2.0 to v1.3.0

- Kustomize Controller:

v1.5.1 to v1.6.0

- Notification Controller:

v1.5.0 to v1.6.0

- Source Controller:

v1.5.0 to v1.6.0

- Govmomi:

v0.48.1 to v0.50.0

- Image builder:

v0.1.42 to v0.1.44

- Kind:

v0.26.0 to v0.29.0

- Kube-vip:

v0.8.10 to v0.9.1

- Troubleshoot:

v0.117.0 to v0.119.0

Removed

- With CAPI diagnostics enabled, removed the redundant kube-rbac-proxy metrics server from the CloudStack provider controller.

- Removed vSphere failure domain feature gate

VSPHERE_FAILURE_DOMAIN_ENABLED which gradated to GA in this version(#9827

)

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.32.0 |

✔ |

— |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

✔ |

✔ |

✔ |

✔ |

— |

Changed

- EKS Distro:

- Cert-manager:

v1.16.4 to v1.16.5

- Cluster API Provider Nutanix:

v1.5.3 to v1.5.4

- Cluster API Provider Tinkerbell:

v0.6.4 to v0.6.5

- Cilium:

v1.15.14-eksa.1 to v1.15.16-eksa.1

- Kube-rbac-proxy:

v0.19.0 to v0.19.1

Fixed

- Tinkerbell workflow updates running into Rate limit issues during concurrent provisioning (#4616

)

- Some Tinkerbell workflows getting stuck at STATE_PENDING (#4616

)

- Honor the –no-timeouts flag during BMC checks (#9786

)

- Improve latency for BMC interactions (#9791

)

- Add retries around mount action to address race conditions in device becoming available (#4639

)

- Validate Eks-distro manifest signature for extended kubernetes version support (#9801

)

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.32.0 |

✔ |

— |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

✔ |

✔ |

✔ |

✔ |

— |

Changed

- EKS Distro:

- kube-vip:

v0.8.9 to v0.8.10

- New base images with CVE fixes for Amazon Linux 2

Fixed

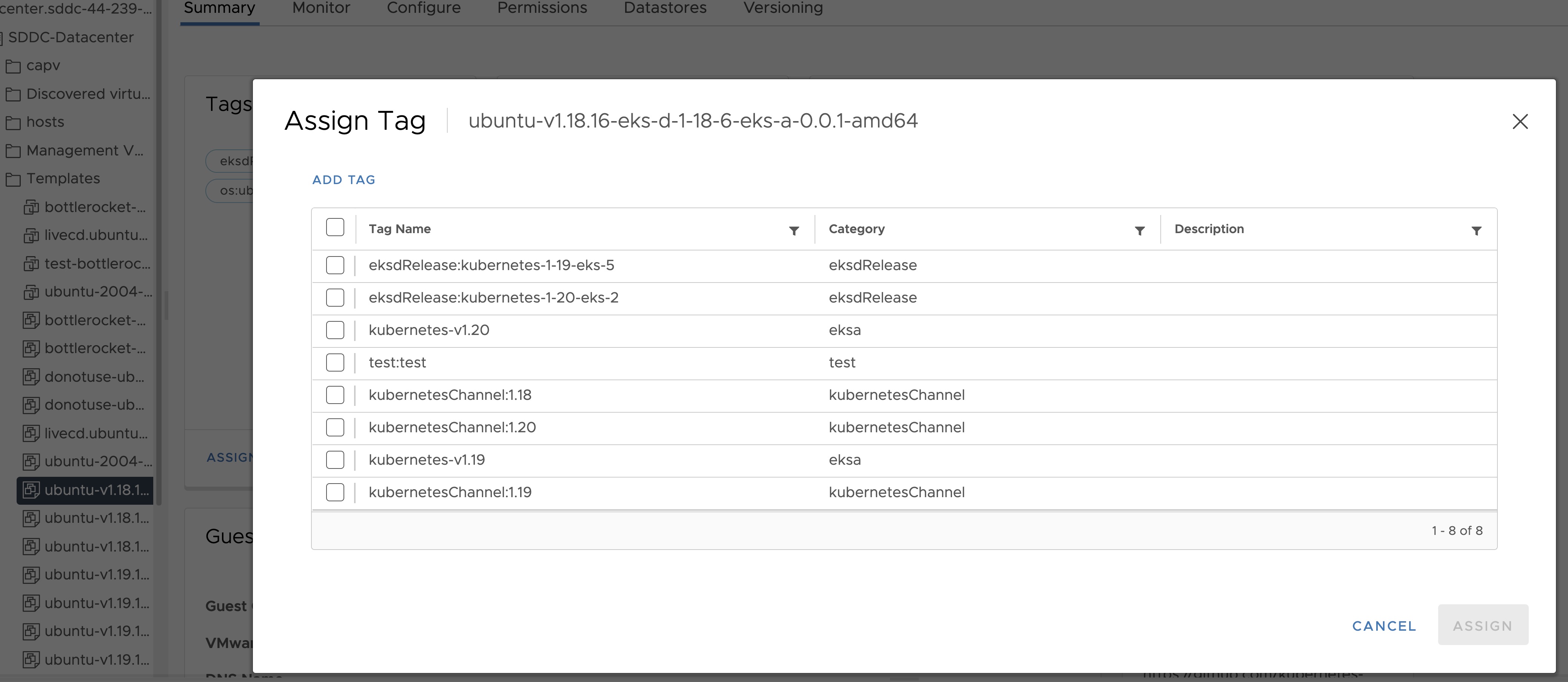

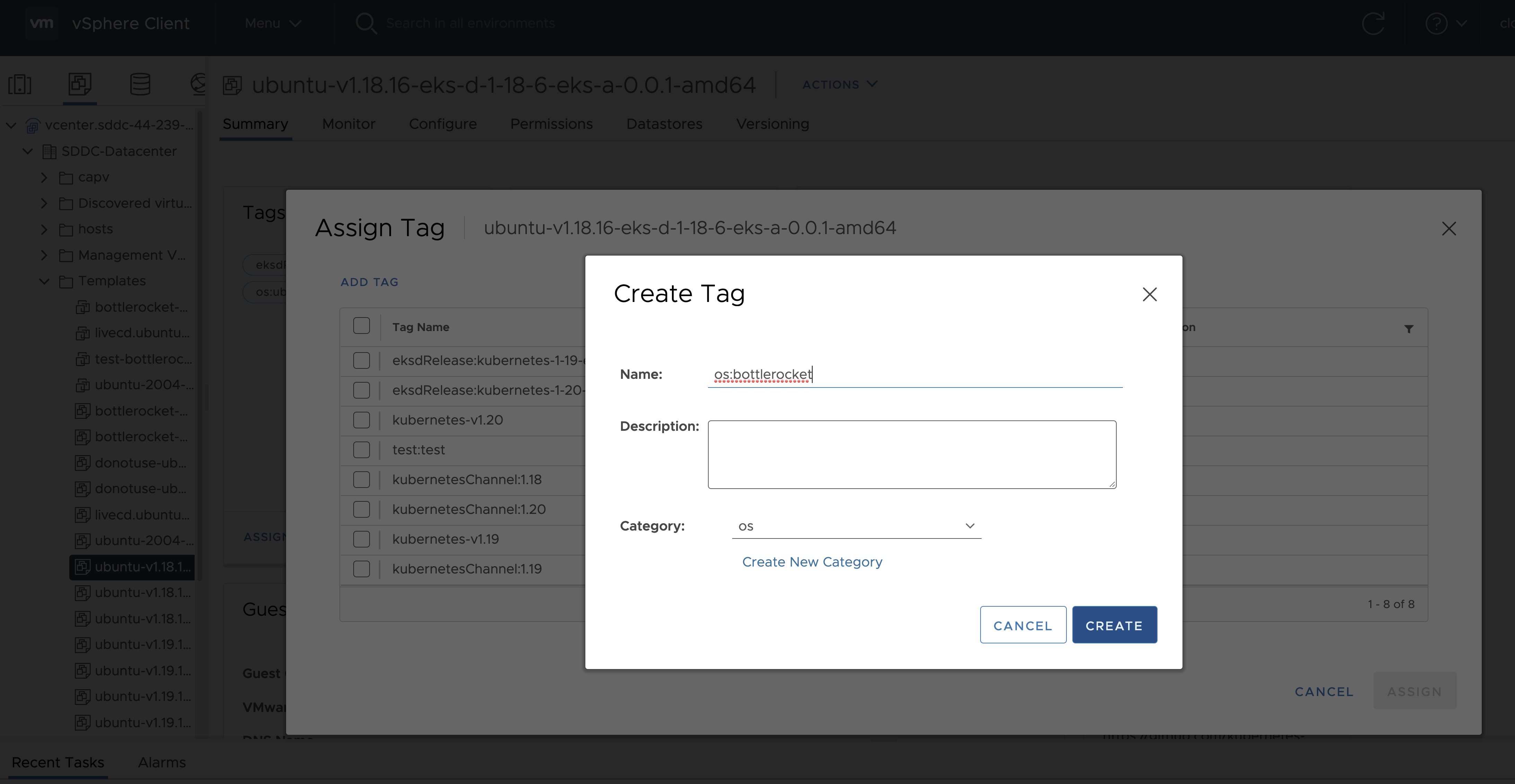

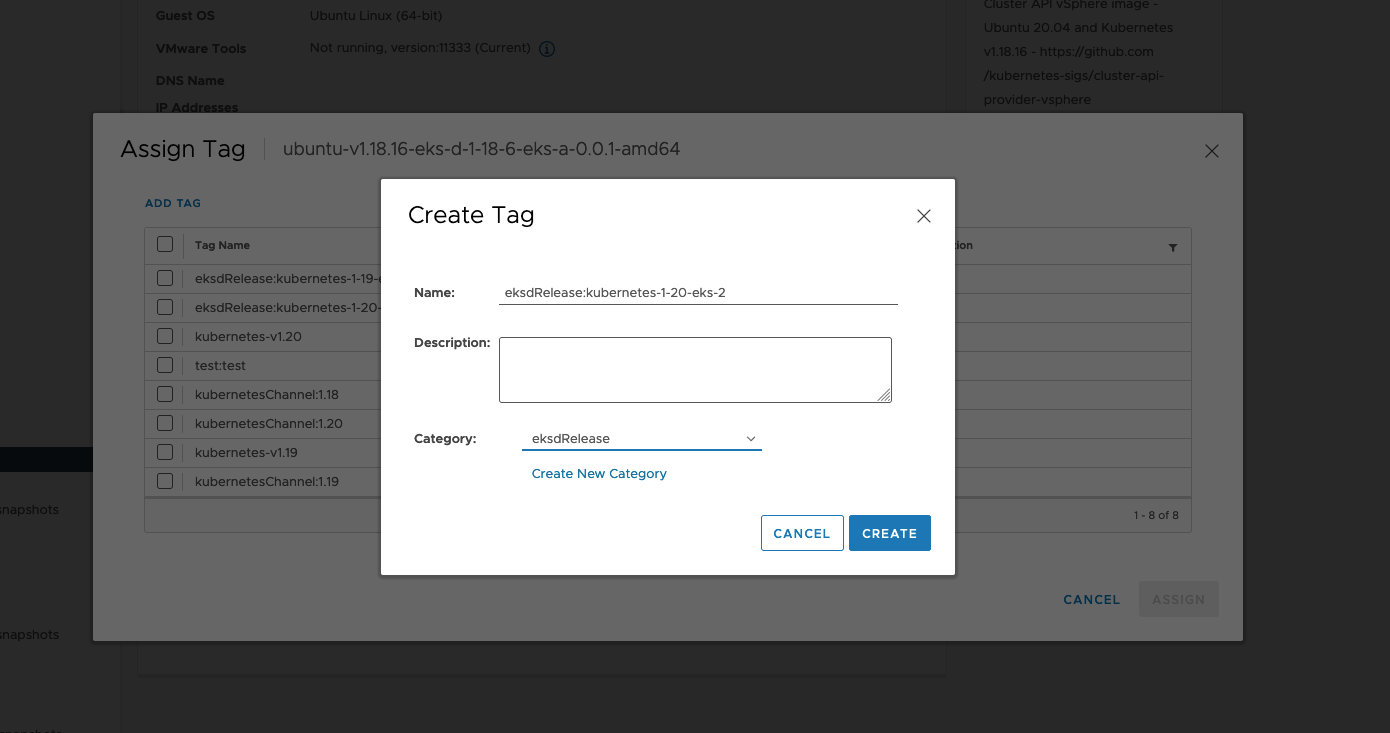

- Assign multiple vcenter tags to a machine (#9707

)

- Fix an issue when creating vSphere group via EKS-A CLI (#9458

)

- Expose CLI flag on Smee to bind interface (#9720

)

- VSphere failure domain delete cluster bug fix (#9711

)

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.32.0 |

✔ |

— |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

✔ |

✔ |

✔ |

✔ |

— |

Changed

- EKS Distro:

- New base images with CVE fixes for Amazon Linux 2

Fixed

- Fix bundles override flag issue for upgrade cluster command (#9672

)

- Address vulnerability GO-2025-3595

in golang.org/x/net package v0.37.0 (#9668

)

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.32.0 |

✔ |

— |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

✔ |

✔ |

✔ |

✔ |

— |

Added

- Add support for specifying vm_version in the image builder config (#4510

)

Fixed

- Skip bundle signature validation for EKS-A versions prior to v0.22.0 (#9587

)

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.32.0 |

✔ |

— |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

✔ |

✔ |

✔ |

✔ |

— |

Changed

- EKS Distro:

- Helm:

v3.17.1 to v3.16.4

- Rufio:

v0.6.4 to v0.6.5

- Cilium:

v1.15.13-eksa.2 to v1.15.14-eksa.1

- Curated package controller:

v0.4.5 to v0.4.6

- Capas:

v0.2.0 to v0.2.1

- Containerd:

v1.7.26 to v1.7.27

Added

- Support for RHEL 9 for vSphere provider

Fixed

- Fix airgapped proxy enviroment issue with the new Helm version

v3.17.1 by downgrading helm to v3.16.4 (#4497

)

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.32.0 |

✔ |

— |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

✔ |

✔ |

✔ |

— |

Changed

- EKS Distro:

- Golang:

1.21 to 1.23 (#9312

)

- CAPAS:

v0.1.30 to v0.2.0

Fixed

- Address vulnerability GO-2025-3503

in golang.org/x/net package v0.33.0 (#9405

)

- Fixing cilium routingMode parameters in helm configuration (#9401

)

- Update RHEL OS version validation from image builder (#4423

)

- Update Bottlerocket host containers source extraction logic (#4400

)

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.32.0 |

✔ |

- |

— |

— |

— |

| RHEL 8.x (*) |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

✔ |

✔ |

✔ |

— |

* RHEL 8’s kernel version (4.18) is not supported by kubeadm for Kubernetes versions 1.32 and above (see Kubernetes GitHub issue #129462

). As a result, EKS Anywhere does not support using RHEL 8 as the node operating system for Kubernetes versions 1.32 and above.

Added

- Support for Kubernetes v1.32

- Extended support for Kubernetes versions (#6793

, #4174

, #9112

, #9115

, #9150

, #9209

, #9218

, #9222

, #9225

)

- Support for deploying EKS-A clusters across vSphere Failure Domains. Available behind feature flag

VSPHERE_FAILURE_DOMAIN_ENABLED (#9239

)

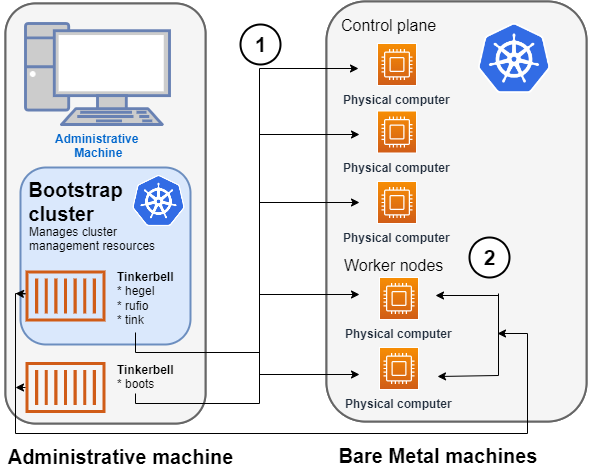

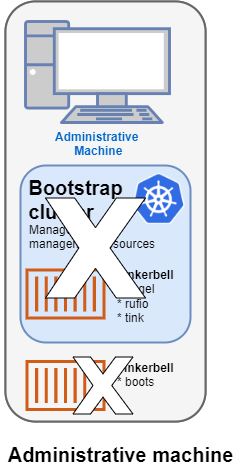

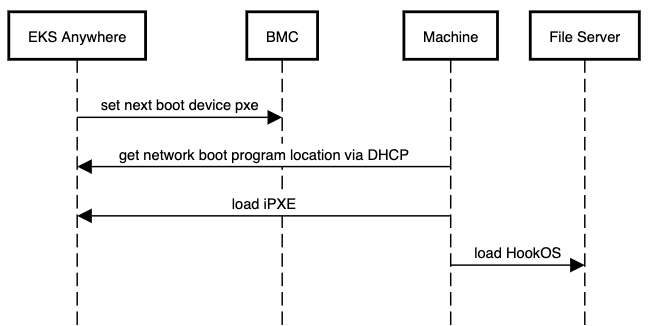

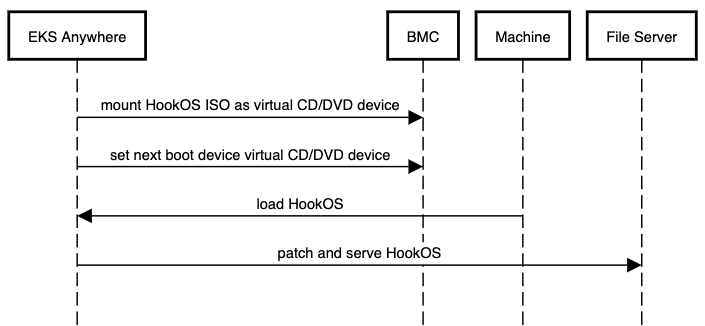

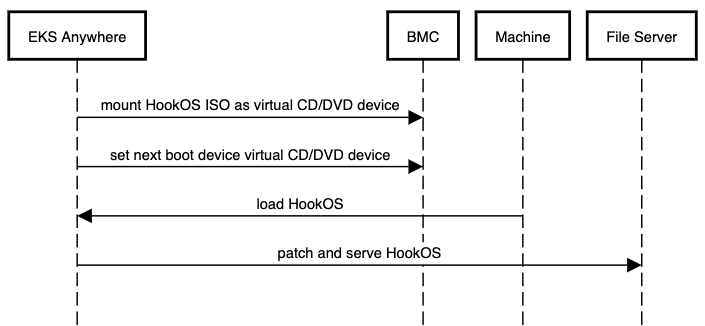

- Enable hardware Provisioning through ISO booting for baremetal Provider (#9213

). Provides an alternative for customers who do not have L2 connectivity between management and workload clusters as this feature removes the dependency on DHCP for bare metal deployments.

Changed

- Added EKS-D for 1-32:

- Cert Manager:

v1.15.3 to v1.16.3

- Cilium:

v1.14.12 to v1.15.13

- Cluster API:

v1.8.3 to v1.9.4

- Cluster API Provider Nutanix:

v1.4.0 to v1.5.3

- Cluster API Provider Tinkerbell:

v0.5.3 to v0.6.4

- Cluster API Provider vSphere:

v1.11.2 to v1.12.0

- Cri-tools:

v1.31.1 to v1.32.0

- Flux:

v2.4.0 to v2.5.0

- Govmomi:

v0.44.1 to v0.48.1

- Helm:

v3.16.4 to v3.17.1

- Image builder:

v0.1.40 to v0.1.41

- Kind:

v0.24.0 to v0.26.0

- Kube-vip:

v0.8.0 to v0.8.9

- Tinkerbell Stack:

- Rufio:

v0.4.1 to v0.6.4

- Hegel:

v0.12.0 to v0.14.2

- Hook:

v0.9.1 to v0.10.0

- Troubleshoot:

v0.107.4 to v0.117.0

Removed

- Support for Kubernetes v1.27

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.26.2 |

✔ |

— |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

✔ |

✔ |

✔ |

— |

Changed

- EKS Distro:

- Golang:

1.21 to 1.23 (#9382

)

- Image builder:

v0.1.40 to v0.1.42

- Curated package controller:

v0.4.5 to v0.4.6

- Containerd:

v1.7.25 to v1.7.27

Fixed

- Assign multiple vcenter tags to a machine (#9707

)

- Fix an issue when creating vSphere group via EKS-A CLI (#9458

)

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.26.2 |

✔ |

— |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

✔ |

✔ |

✔ |

— |

Changed

- EKS Distro:

- Cilium:

v1.14.12-eksa.2 to v1.14.12-eksa.3

- cluster-api-provider-aws-snow:

v0.1.27 to v0.1.30

- New base images with CVE fixes for Amazon Linux 2

Fixed

- Update cilium 1.14 to fix issue with hostport functionality (#4330

)

- Add namespace to external etcd ref (#9252

)

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.26.2 |

✔ |

— |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

✔ |

✔ |

✔ |

— |

Changed

- EKS Distro:

- local-path-provisioner:

v0.0.30 to v0.0.31

- New base images with CVE fixes for Amazon Linux 2

Fixed

- Update corefile-migration patch to support CoreDNS v1.11.4 (#4285

)

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.26.2 |

✔ |

— |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

✔ |

✔ |

✔ |

— |

Changed

- EKS Distro:

- Cert Manager:

v1.15.3 to v1.15.5

- containerd:

v1.7.23 to v1.7.25

- kube-vip:

v0.8.7 to v0.8.9

- linuxkit:

v1.5.2 to v1.5.3

- hook:

v0.9.1 to v0.9.2

- New base images with CVE fixes for Amazon Linux 2

Fixed

- Ensure Kubernetes version is always parsed as string (#9188

)

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.26.2 |

✔ |

— |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

✔ |

✔ |

✔ |

— |

Changed

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.26.2 |

✔ |

— |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

✔ |

✔ |

✔ |

— |

Changed

- EKS Distro:

- Helm:

v3.16.3 to v3.16.4

- Metallb:

v0.14.8 to v0.14.9

- New base images with CVE fixes for Amazon Linux 2

Fixed

- Add kube-vip and optional list of ip addresses to CCM node ip addresses ignore list for Nutanix Provider. (#9072

)

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.26.1 |

✔ |

* |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

✔ |

✔ |

✔ |

— |

* EKS Anywhere issue regarding deprecation of Bottlerocket bare metal variants

Upgraded

- Bumped EKS-D:

- Kube-rbac-proxy:

v0.18.1 to v0.18.2

- Kube-vip:

v0.8.6 to v0.8.7

Fixed

- Fix iam kubeconfig generation in workload clusters #9048

- Update collectors for curated packages namespaces #9044

- Fixed redhat image builds for ansible version v10.0.0 and up #4109

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.26.1 |

✔ |

* |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

✔ |

✔ |

✔ |

— |

* EKS Anywhere issue regarding deprecation of Bottlerocket bare metal variants

Upgraded

- Bumped EKS-D:

- Cluster API Provider vSphere:

v1.11.2 to v1.11.3

- Govmomi:

v0.44.1 to v0.46.1

- Helm:

v3.16.2 to v3.16.3

- Troubleshoot:

v0.107.4 to v0.107.5

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.26.1 |

✔ |

* |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

✔ |

✔ |

✔ |

— |

* EKS Anywhere issue regarding deprecation of Bottlerocket bare metal variants

Added

- Support for Kubernetes v1.31

- Support for configuring tinkerbell stack load balancer interface in cluster spec (#8805

)

- GPU support for Nutanix provider (#8745

)

- Support for worker nodes failure domains on Nutanix (#8837

)

Upgraded

- Added EKS-D for 1-31:

- Cert Manager:

v1.14.7 to v1.15.3

- Cilium:

v1.13.20 to v1.14.12

- Cluster API:

v1.7.2 to v1.8.3

- Cluster API Provider CloudStack:

v0.4.10-rc.1 to v0.5.0

- Cluster API Provider Nutanix:

v1.3.5 to v1.4.0

- Cluster API Provider vSphere:

v1.10.4 to v1.11.2

- Cri-tools:

v1.30.1 to v1.31.1

- Flux:

v2.3.0 to v2.4.0

- Govmomi:

v0.37.3 to v0.44.1

- Kind:

v0.23.0 to v0.24.0

- Kube-vip:

v0.7.0 to v0.8.0

- Tinkerbell Stack:

- Rufio:

v0.3.3 to v0.4.1

- Hook:

v0.8.1 to v0.9.1

- Troubleshoot:

v0.93.2 to v0.107.4

Changed

- Use HookOS embedded images in Tinkerbell Templates by default (#8708

and #3471

)

Removed

- Support for Kubernetes v1.26

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.20.5 |

✔ |

— |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

✔ |

✔ |

✔ |

— |

Upgraded

- EKS Distro:

- eks-anywhere-packages:

v0.4.4 to v0.4.5

- image-builder:

v0.1.39 to v0.1.40

- containerd:

v1.7.23 to v1.7.24

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.20.5 |

✔ |

— |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

✔ |

✔ |

✔ |

— |

Changed

- EKS Distro:

- cloud-provider-nutanix:

v0.3.2 to v0.4.1

- kube-rbac-proxy:

v0.18.1 to v0.18.2

- kube-vip:

v0.8.6 to v0.8.7

Fixed

- Add retries for transient error

server doesn't have a Resource type errors after clusterctl move. (#9065

)

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.20.5 |

✔ |

— |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

✔ |

✔ |

✔ |

— |

Changed

- cilium:

v1.13.20-eksa.1 to v1.13.21-eksa.5

- cloud-provider-vsphere

v1.29.1 to v1.29.2v1.30.1 to v1.30.2

- EKS Distro:

- cluster-api-provider-vsphere(CAPV):

v1.10.3 to v1.10.4

- etcdadm-bootstrap-provider:

v1.0.14 to v1.0.15

- kube-vip:

v0.8.4 to v0.8.6

Fixed

- Release init-lock when the owner machine fails to launch. (#41

)

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.20.5 |

✔ |

— |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

✔ |

✔ |

✔ |

— |

Changed

- EKS Distro:

- image-builder:

v0.1.36 to v0.1.39

- cluster-api-provider-vsphere(CAPV):

v1.10.3 to v1.10.4

- etcdadm-controller:

v1.0.23 to v1.0.24

- etcdadm-bootstrap-provider:

v1.0.13 to v1.0.14

- kube-vip:

v0.8.3 to v0.8.4

- containerd:

v1.7.22 to v1.7.23

- runc:

v1.1.14 to v1.1.15

- local-path-provisioner:

v0.0.29 to v0.0.30

Fixed

- Skip hardware validation logic for InPlace upgrades. #8779

- Status reconciliation of etcdadm cluster in etcdadm-controller when etcd-machines are unhealthy. #63

- Skip generating AWS IAM Kubeconfig on cluster upgrade. #8851

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.20.5 |

✔ |

— |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

✔ |

✔ |

✔ |

— |

Changed

- EKS Distro:

- cilium:

v1.13.19 to v1.13.20

- image-builder:

v0.1.30 to v0.1.36

- cluster-api-provider-vsphere(CAPV):

v1.10.2 to v1.10.3

Fixed

- Fixed support for efi on rhel 9 raw builds. (#3824

)

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.20.5 |

✔ |

— |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

✔ |

✔ |

✔ |

— |

Changed

- EKS Distro:

- cilium:

v1.13.18 to v1.13.19

- containerd

v1.7.21 to v.1.7.22

- etcdadm-controller:

v1.0.22 to v1.0.23

- kube-vip:

v0.8.2 to v0.8.3

- Kube-rbac-proxy:

v0.18.0 to v0.18.1

Added

- Enable EFI boot support on RHEL9 images for bare-meal. (#3684

)

Fixed

- Status reconciliation of etcdadm cluster in etcdadm-controller when

etcd-machines are unhealthy. (#63

)

- Skip hardware validation logic for InPlace upgrades. ([#8779]https://github.com/aws/eks-anywhere/pull/8779))

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.20.5 |

✔ |

— |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

✔ |

✔ |

✔ |

— |

Changed

- EKS Distro:

- Tinkerbell Stack:

- runc

v1.1.13 to v1.1.14

- containerd

v1.7.20 to v.1.7.21

- local-path-provisioner

v0.0.28 to v0.0.29

Fixed

- Rollout new nodes for OSImageURL change on spec without changing K8s version (#8656

)

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.20.5 |

✔ |

— |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

✔ |

✔ |

✔ |

— |

Changed

- EKS Distro:

- EKS Anywhere Packages Controller:

v0.4.3 to v0.4.4

- Helm:

v3.15.3 to v3.15.4

Fixed

- Fix Kubelet Configuration apply when host OS config is specified. (#8606

)

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.20.5 |

✔ |

— |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

✔ |

✔ |

✔ |

— |

Changed

Added

- Enable Tinkerbell stack to use dhcprelay instead of using smee in hostNetwork mode. (#8568

)

Fixed

- Enable

lb_class_only env var on kube-vip so that it only manages IP for services with LoadBalancerClass set to kube-vip.io/kube-vip-class on the service. (#8493

)

- Nil pointer panic for etcdadm-controller when

apiserver-etcd-client secret got deleted. (#62

)

Changed

- kube-vip:

v0.8.1 to v0.8.2

- cilium:

v1.13.16 to v1.13.18

- cert-manager:

v1.14.5 to v1.14.7

- etcdadm-controller:

v1.0.21 to v1.0.22

- local-path-provisioner:

v0.0.27 to v0.0.28

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.20.0 |

✔ |

— |

— |

— |

— |

| Bottlerocket 1.19.4 |

— |

* |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

✔ |

✔ |

✔ |

— |

* EKS Anywhere issue regarding deprecation of Bottlerocket bare metal variants

Changed

Fixed

- Fix panic when datacenter obj is not found (8495

)

- Fix Subnet Validation Bug for Nutanix provider (8499

)

- Fix machine config panic when ref object not found (8533

)

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.20.0 |

✔ |

— |

— |

— |

— |

| Bottlerocket 1.19.4 |

— |

* |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

✔ |

✔ |

✔ |

— |

* EKS Anywhere issue regarding deprecation of Bottlerocket bare metal variants

Changed

Fixed

- Fix cluster status reconciliation for control plane and worker nodes (8455

)

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.20.0 |

✔ |

— |

— |

— |

— |

| Bottlerocket 1.19.4 |

— |

* |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

✔ |

✔ |

✔ |

— |

* EKS Anywhere issue regarding deprecation of Bottlerocket bare metal variants

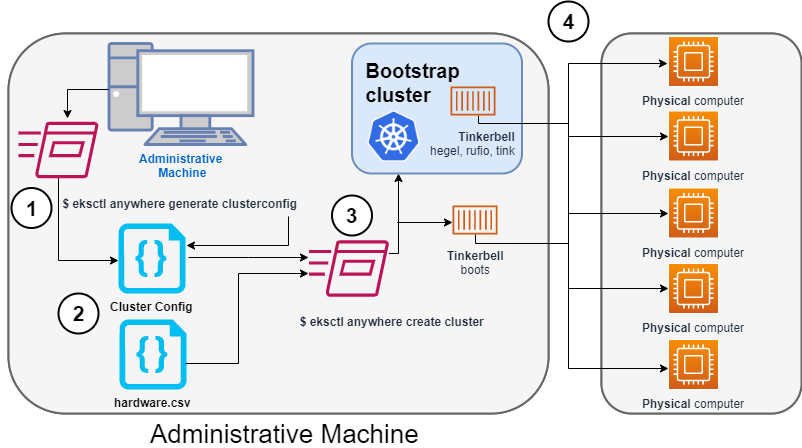

Added

- Support for Kubernetes v1.30

- Support for configuring kube-apiserver flags in cluster spec (#7755

)

- Redhat 9 support for Bare Metal (#3032

)

- Support for configuring kubelet settings in cluster spec (#7708

)

- Support for control plane failure domains on Nutanix (#8192

)

Changed

- Generate cluster config command includes OSImageURL in tinkerbell machine config objects (#8226

)

- Added EKS-D for 1-30:

- Cilium:

v1.13.9 to v1.13.16

- Cluster API:

v1.6.1 to v1.7.2

- Cluster API Provider vSphere:

v1.8.5 to v1.10.0

- Cluster API Provider Nutanix:

v1.2.3 to v1.3.5

- Flux:

v2.2.3 to v2.3.0

- Kube-vip:

v0.7.0 to v0.8.0

- Image-builder:

v0.1.24 to v0.1.26

- Kind:

v0.22.0 to v0.23.0

- Etcdadm Controller:

v1.0.17 to v1.0.21

- Tinkerbell Stack:

- Cluster API Provider Tinkerbell:

v0.4.0 to v0.5.3

- Hegel:

v0.10.1 to v0.12.0

- Rufio:

afd7cd82fa08dae8f9f3ffac96eb030176f3abbd to v0.3.3

- Tink:

v0.8.0 to v0.10.0

- Boots/Smee:

v0.8.1 to v0.11.0

- Hook:

9d54933a03f2f4c06322969b06caa18702d17f66 to v0.8.1

- Charts:

v0.4.5

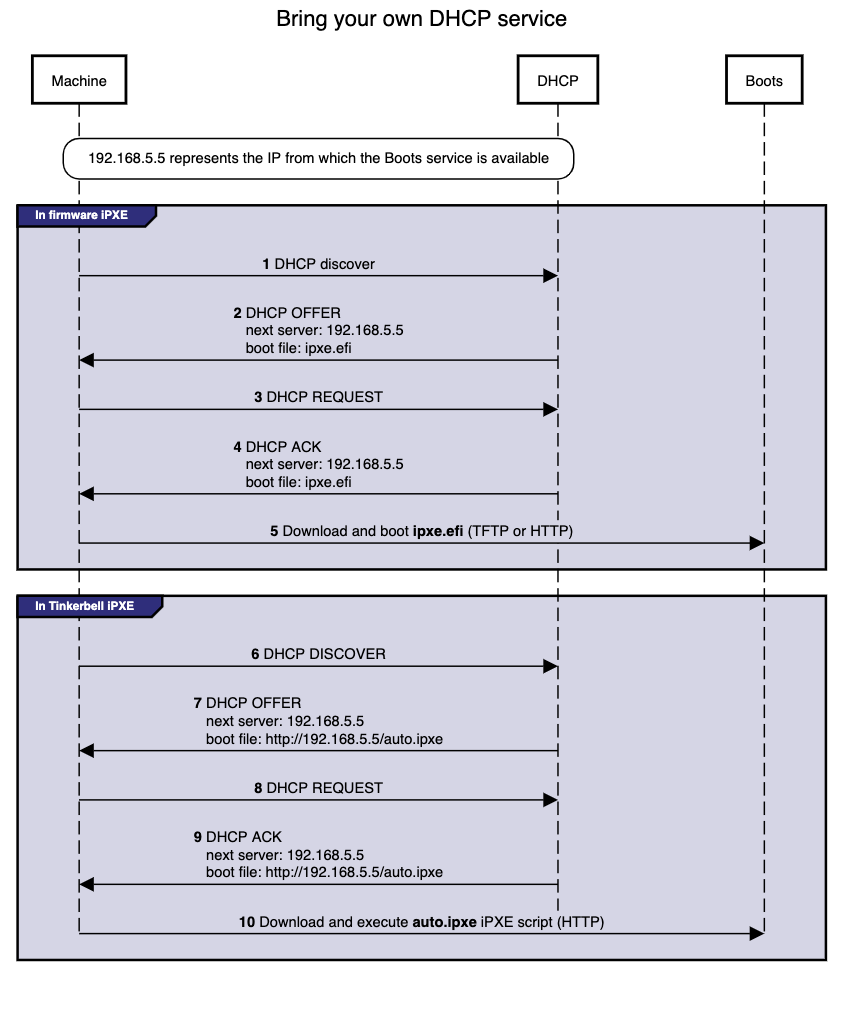

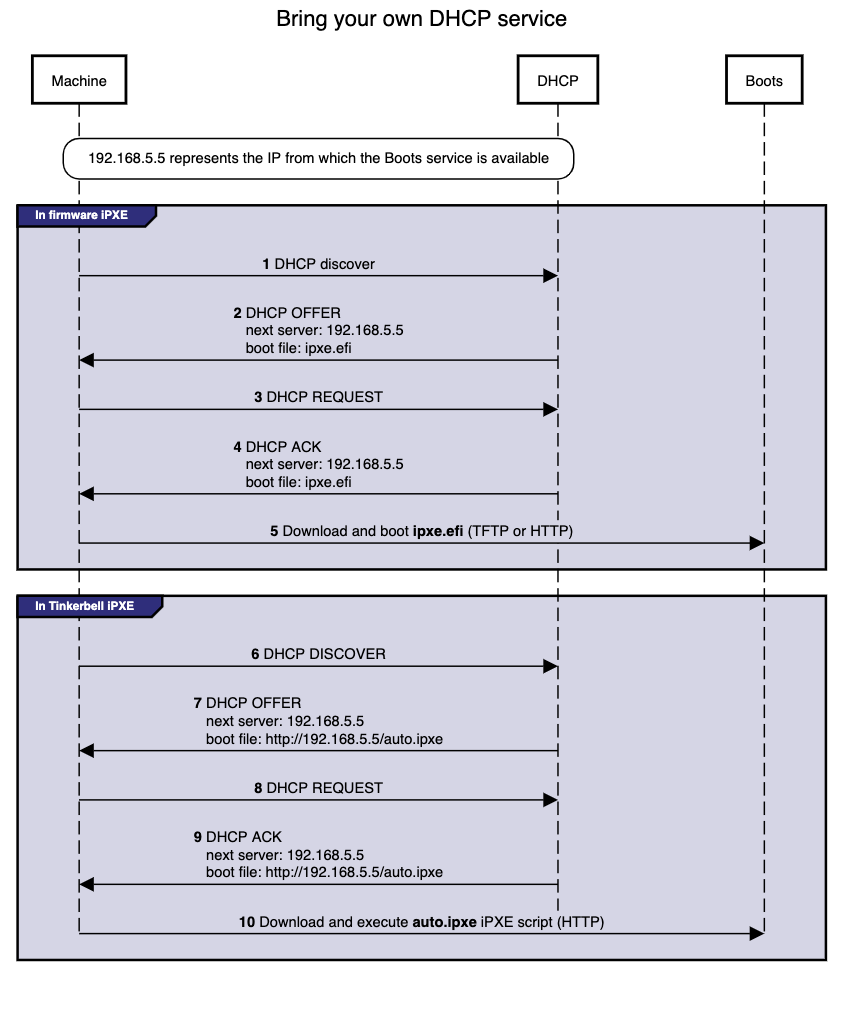

Note: The Boots service has been renamed to Smee by the upstream tinkerbell community with this version upgrade. Any reference to Boots or Smee in our docs refer to the same service.

Removed

- Support for Kubernetes v1.25

- Support for certain curated packages CLI commands (#8240

)

Fixed

- CLI commands for packages to honor the registry mirror setup in cluster spec (#8026

)

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.20.5 |

✔ |

* |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

— |

✔ |

✔ |

— |

* EKS Anywhere issue regarding deprecation of Bottlerocket bare metal variants

Upgraded

- EKS Distro:

- Image-builder:

v0.1.36 to v0.1.39 (CVE-2024-9594

)

- containerd:

v1.7.22 to v1.7.23

- Cilium:

v1.13.19 to v1.13.20

- etcdadm-controller:

v1.0.23 to v1.0.24

- etcdadm-bootstrap-provider:

v1.0.13 to v1.0.14

- local-path-provisioner:

v0.0.29 to v0.0.30

- runc:

v1.1.14 to v1.1.15

Fixed

- Skip hardware validation logic for InPlace upgrades. #8779

- Status reconciliation of etcdadm cluster in etcdadm-controller when etcd-machines are unhealthy. #63

- Skip generating AWS IAM Kubeconfig on cluster upgrade. #8851

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.20.0 |

✔ |

* |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

— |

✔ |

✔ |

— |

* EKS Anywhere issue regarding deprecation of Bottlerocket bare metal variants

Upgraded

- EKS Distro:

- EKS Anywhere Packages:

v0.4.3 to v0.4.4

- Cilium:

v1.13.18 to v1.13.19

- containerd:

v1.7.20 to v1.7.22

- runc:

v1.1.13 to v1.1.14

- local-path-provisioner:

v0.0.28 to v0.0.29

- etcdadm-controller:

v1.0.22 to v1.0.23

- New base images with CVE fixes for Amazon Linux 2

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.19.2 |

✔ |

* |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

— |

✔ |

✔ |

— |

* EKS Anywhere issue regarding deprecation of Bottlerocket bare metal variants

Upgraded

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.19.2 |

✔ |

* |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

— |

✔ |

✔ |

— |

* EKS Anywhere issue regarding deprecation of Bottlerocket bare metal variants

Upgraded

-

Kube-rbac-proxy: v0.16.0 to v0.16.1

-

Containerd: v1.7.13 to v1.7.20

-

Kube VIP: v0.7.0 to v0.7.2

-

Helm: v3.14.3 to v3.14.4

-

Cluster API Provider vSphere: v1.8.5 to v1.8.10

-

Runc: v1.1.12 to v1.1.13

-

EKS Distro:

Changed

- Added additional validation before marking controlPlane and workers ready #8455

Fixed

- Fix panic when datacenter obj is not found #8494

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.19.2 |

✔ |

* |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

— |

✔ |

✔ |

— |

* EKS Anywhere issue regarding deprecation of Bottlerocket bare metal variants

Upgraded

- Cluster API Provider Nutanix:

v1.3.3 to v1.3.5

- Image Builder:

v0.1.24 to v0.1.26

- EKS Distro:

Changed

- Updated cluster status reconciliation logic for worker node groups with autoscaling

configuration #8254

- Added logic to apply new hardware on baremetal cluster upgrades #8288

Fixed

- Fixed bug when installer does not create CCM secret for Nutanix workload cluster #8191

- Fixed upgrade workflow for registry mirror certificates in EKS Anywhere packages #7114

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.19.2 |

✔ |

* |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

— |

✔ |

✔ |

— |

* EKS Anywhere issue regarding deprecation of Bottlerocket bare metal variants

Changed

- Backporting dependency bumps to fix vulnerabilities #8118

- Upgraded EKS-D:

Fixed

- Fixed cluster directory being created with root ownership #8120

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.19.2 |

✔ |

* |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

— |

✔ |

✔ |

— |

* EKS Anywhere issue regarding deprecation of Bottlerocket bare metal variants

Changed

- Upgraded EKS-Anywhere Packages from

v0.4.2 to v0.4.3

Fixed

- Fixed registry mirror with authentication for EKS Anywhere packages

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.19.2 |

✔ |

* |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

— |

✔ |

✔ |

— |

* EKS Anywhere issue regarding deprecation of Bottlerocket bare metal variants

Changed

- Support Docs site for penultime EKS-A version #8010

- Update Ubuntu 22.04 ISO URLs to latest stable release #3114

- Upgraded EKS-D:

Fixed

- Added processor for Tinkerbell Template Config #7816

- Added nil check for eksa-version when setting etcd url #8018

- Fixed registry mirror secret credentials set to empty #7933

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.19.2 |

✔ |

* |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

— |

✔ |

✔ |

— |

* EKS Anywhere issue regarding deprecation of Bottlerocket bare metal variants

Changed

- Updated helm to v3.14.3 #3050

Fixed

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.19.2 |

✔ |

* |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

— |

✔ |

✔ |

— |

* EKS Anywhere issue regarding deprecation of Bottlerocket bare metal variants

Changed

- Update CAPC to 0.4.10-rc1 #3105

- Upgraded EKS-D:

Fixed

- Fixing tinkerbell action image URIs while using registry mirror with proxy cache.

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.19.2 |

✔ |

* |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

— |

✔ |

✔ |

— |

* EKS Anywhere issue regarding deprecation of Bottlerocket bare metal variants

Changed

Added

- Preflight check for upgrade management components such that it ensures management components is at most 1 EKS Anywhere minor version greater than the EKS Anywhere version of cluster components #7800

.

Fixed

- EKS Anywhere package bundles

ending with 152, 153, 154, 157 have image tag issues which have been resolved in bundle 158. Example for kubernetes version v1.29 we have

public.ecr.aws/eks-anywhere/eks-anywhere-packages-bundles:v1-29-158

- Fixed InPlace custom resources from being created again after a successful node upgrade due to delay in objects in client cache #7779

.

- Fixed #7623

by encoding the basic auth credentials to base64 when using them in templates #7829

.

- Added a fix

for error that may occur during upgrading management components where if the cluster object is modified by another process before applying, it throws the conflict error prompting a retry.

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.19.0 |

✔ |

* |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

— |

✔ |

✔ |

— |

* EKS Anywhere issue regarding deprecation of Bottlerocket bare metal variants

Added

- Support for Kubernetes v1.29

- Support for in-place EKS Anywhere and Kubernetes version upgrades on Bare Metal clusters

- Support for horizontally scaling

etcd count in clusters with external etcd deployments (#7127

)

- External

etcd support for Nutanix (#7550

)

- Etcd encryption for Nutanix (#7565

)

- Nutanix Cloud Controller Manager integration (#7534

)

- Enable image signing for all images used in cluster operations

- RedHat 9 support for CloudStack (#2842

)

- New

upgrade management-components command which upgrades management components independently of cluster components (#7238

)

- New

upgrade plan management-components command which provides new release versions for the next management components upgrade (#7447

)

- Make

maxUnhealthy count configurable for control plane and worker machines (#7281

)

Changed

- Unification of controller and CLI workflows for cluster lifecycle operations such as create, upgrade, and delete

- Perform CAPI Backup on workload cluster during upgrade(#7364

)

- Extend

maxSurge and maxUnavailable configuration support to all providers

- Upgraded Cilium to v1.13.19

- Upgraded EKS-D:

- Cluster API Provider AWS Snow:

v0.1.26 to v0.1.27

- Cluster API:

v1.5.2 to v1.6.1

- Cluster API Provider vSphere:

v1.7.4 to v1.8.5

- Cluster API Provider Nutanix:

v1.2.3 to v1.3.1

- Flux:

v2.0.0 to v2.2.3

- Kube-vip:

v0.6.0 to v0.7.0

- Image-builder:

v0.1.19 to v0.1.24

- Kind:

v0.20.0 to v0.22.0

Removed

Fixed

- Validate OCI namespaces for registry mirror on Bottlerocket (#7257

)

- Make Cilium reconciler use provider namespace when generating network policy (#7705

)

- EKS Anywhere v0.18.7 Admin AMI with CVE fixes for Amazon Linux 2

Supported Operating Systems

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.19.0 |

✔ |

✔ |

— |

— |

— |

| RHEL 8.7 |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

— |

✔ |

— |

— |

- EKS Anywhere v0.18.6 Admin AMI with CVE fixes for

runc

- New base images with CVE fixes for Amazon Linux 2

- Bottlerocket

v1.15.1 to 1.19.0

- runc

v1.1.10 to v1.1.12 (CVE-2024-21626

)

- containerd

v1.7.11 to v.1.7.12

Supported Operating Systems

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.19.0 |

✔ |

✔ |

— |

— |

— |

| RHEL 8.x |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

— |

✔ |

— |

— |

- New EKS Anywhere Admin AMI with CVE fixes for Amazon Linux 2

- New base images with CVE fixes for Amazon Linux 2

Supported Operating Systems

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.15.1 |

✔ |

✔ |

— |

— |

— |

| RHEL 8.7 |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

— |

✔ |

— |

— |

Feature

- Nutanix: Enable api-server audit logging for Nutanix (#2664

)

Bug

- CNI reconciler now properly pulls images from registry mirror instead of public ECR in airgapped environments: #7170

Supported Operating Systems

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.15.1 |

✔ |

✔ |

— |

— |

— |

| RHEL 8.7 |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

— |

✔ |

— |

— |

Fixed

- Etcdadm: Renew client certificates when nodes rollover (etcdadm/#56

)

- Include DefaultCNIConfigured condition in Cluster Ready status except when Skip Upgrades is enabled (#7132

)

- EKS Distro (Kubernetes):

v1.25.15 to v1.25.16v1.26.10 to v1.26.11v1.27.7 to v1.27.8v1.28.3 to v1.28.4

- Etcdadm Controller:

v1.0.15 to v1.0.16

Supported Operating Systems

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.15.1 |

✔ |

✔ |

— |

— |

— |

| RHEL 8.7 |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

— |

✔ |

— |

— |

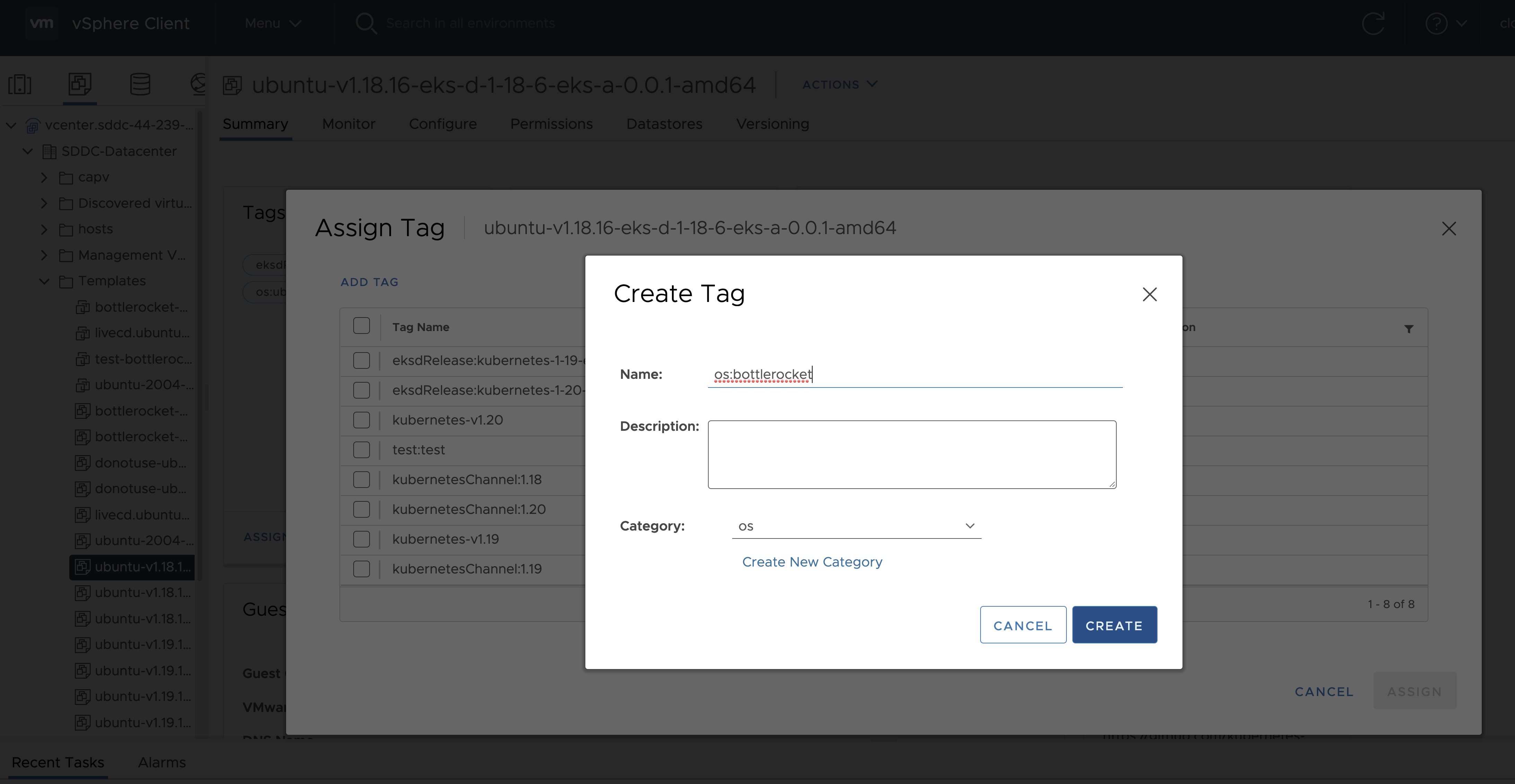

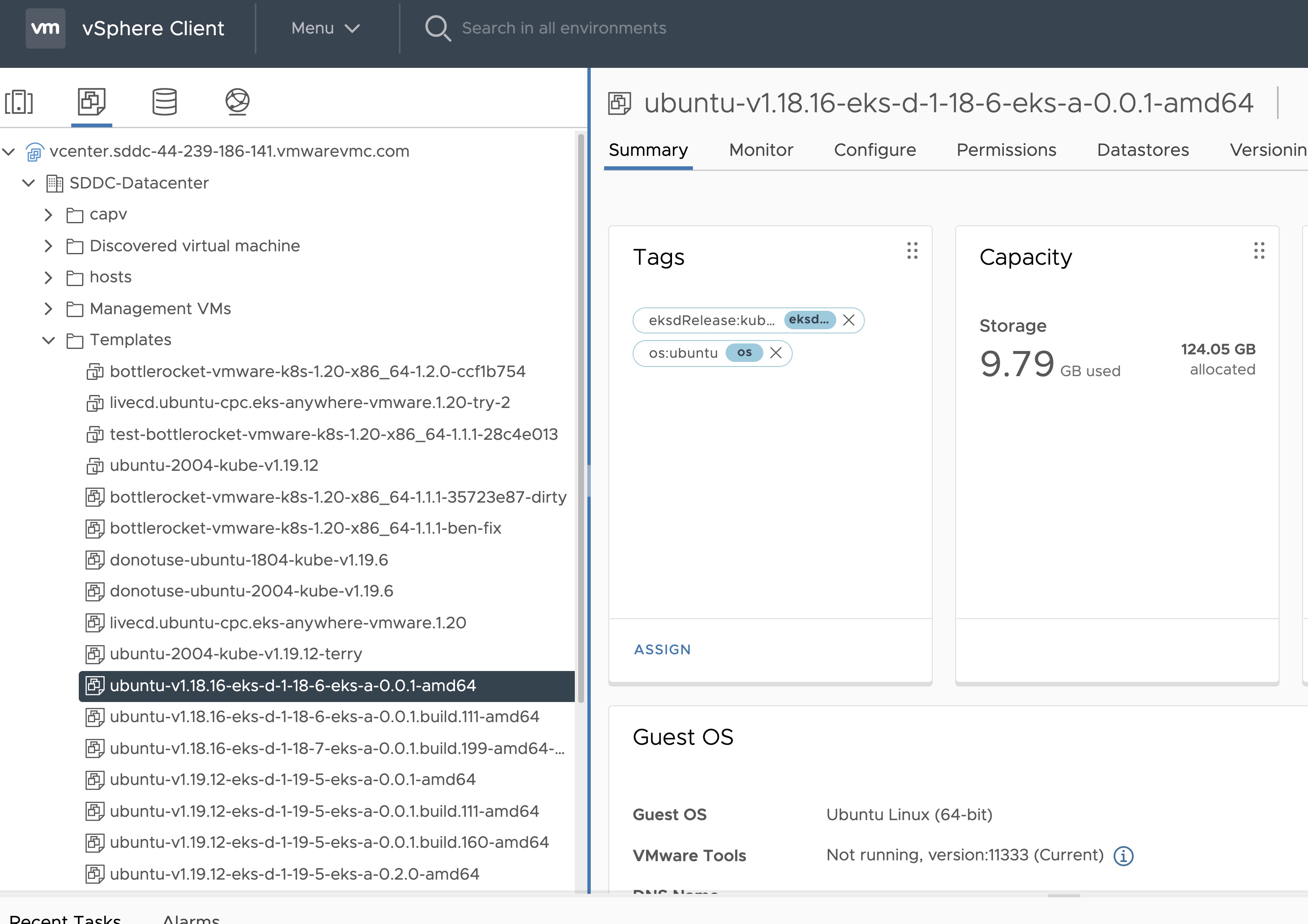

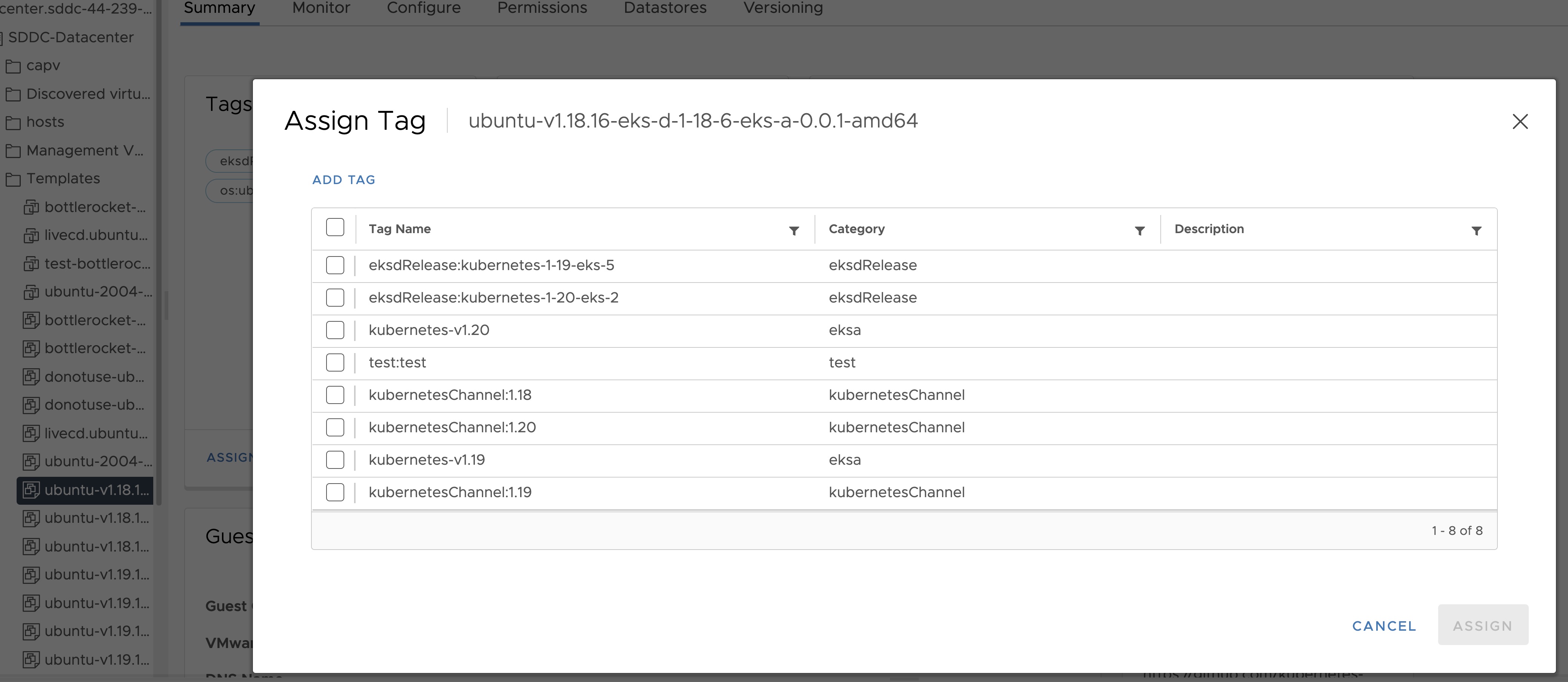

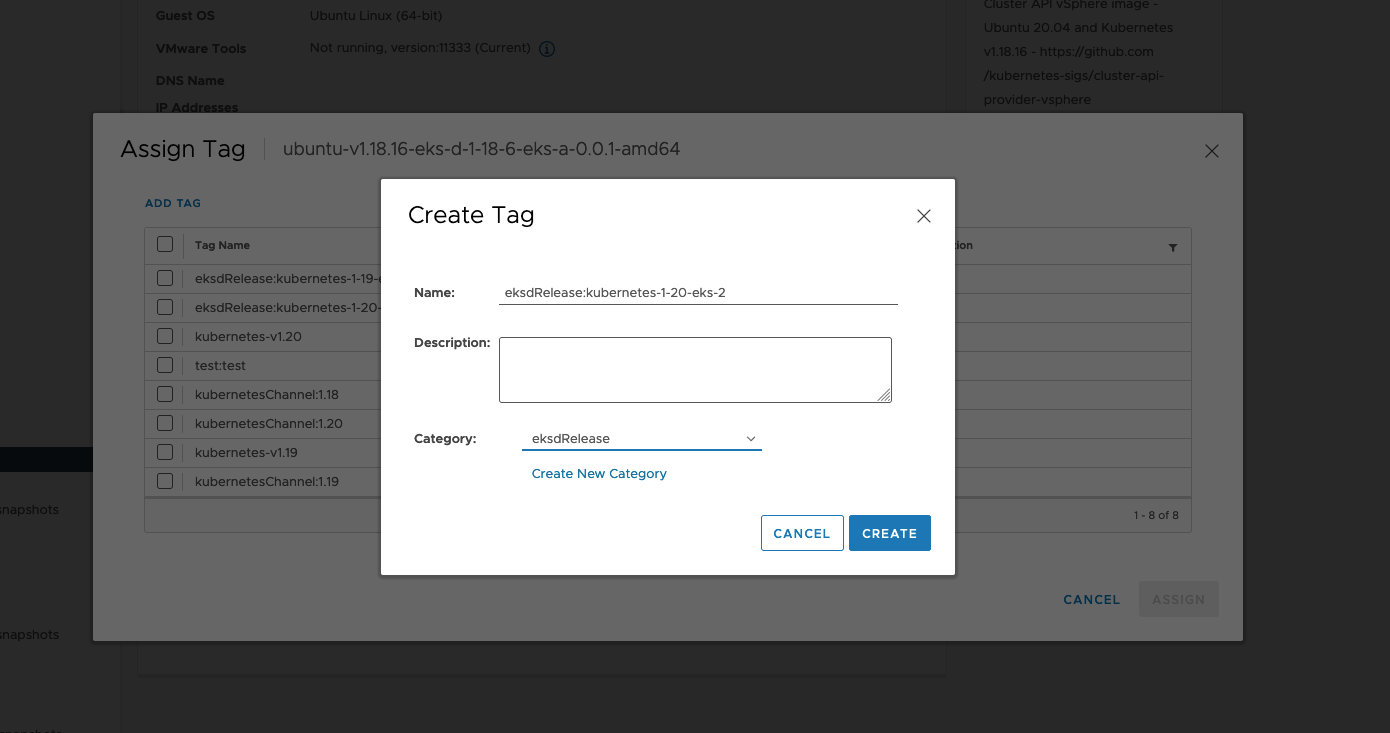

Fixed

- Image Builder: Correctly parse

no_proxy inputs when both Red Hat Satellite and Proxy is used in image-builder. (#2664

)

- vSphere: Fix template tag validation by specifying the full template path (#6437

)

- Bare Metal: Skip

kube-vip deployment when TinkerbellDatacenterConfig.skipLoadBalancerDeployment is set to true. (#6990

)

Other

- Security: Patch incorrect conversion between uint64 and int64 (#7048

)

- Security: Fix incorrect regex for matching curated package registry URL (#7049

)

- Security: Patch malicious tarballs directory traversal vulnerability (#7057

)

Supported Operating Systems

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.15.1 |

✔ |

✔ |

— |

— |

— |

| RHEL 8.7 |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

— |

✔ |

— |

— |

- EKS Distro (Kubernetes):

v1.25.14 to v1.25.15v1.26.9 to v1.26.10v1.27.6 to v1.27.7v1.28.2 to v1.28.3

- Etcdadm Bootstrap Provider:

v1.0.9 to v1.0.10

- Etcdadm Controller:

v1.0.14 to v1.0.15

- Cluster API Provider CloudStack:

v0.4.9-rc7 to v0.4.9-rc8

- EKS Anywhere Packages Controller :

v0.3.12 to v0.3.13

Bug

- Bare Metal: Ensure the Tinkerbell stack continues to run on management clusters when worker nodes are scaled to 0 (#2624

)

Supported Operating Systems

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.15.1 |

✔ |

✔ |

— |

— |

— |

| RHEL 8.7 |

✔ |

✔ |

✔ |

✔ |

— |

| RHEL 9.x |

— |

— |

✔ |

— |

— |

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu |

20.04 |

20.04 |

20.04 |

Not supported |

20.04 |

|

22.04 |

22.04 |

22.04 |

Not supported |

Not supported |

| Bottlerocket |

1.15.1 |

1.15.1 |

Not supported |

Not supported |

Not supported |

| RHEL |

8.7 |

8.7 |

9.x, 8.7 |

8.7 |

Not supported |

Added

- Etcd encryption for CloudStack and vSphere: #6557

- Generate TinkerbellTemplateConfig command: #3588

- Support for modular Kubernetes version upgrades with bare metal: #6735

- OSImageURL added to Tinkerbell Machine Config

- Bare metal out-of-band webhook: #5738

- Support for Kubernetes v1.28

- Support for air gapped image building: #6457

- Support for RHEL 8 and RHEL 9 for Nutanix provider: #6822

- Support proxy configuration on Redhat image building #2466

- Support Redhat Satellite in image building #2467

Changed

- KinD-less upgrades: #6622

- Management cluster upgrades don’t require a local bootstrap cluster anymore.

- The control plane of management clusters can be upgraded through the API. Previously only changes to the worker nodes were allowed.

- Increased control over upgrades by separating external etcd reconciliation from control plane nodes: #6496

- Upgraded Cilium to 1.12.15

- Upgraded EKS-D:

- Cluster API Provider CloudStack:

v0.4.9-rc6 to v0.4.9-rc7

- Cluster API Provider AWS Snow:

v0.1.26 to v0.1.27

- Upgraded CAPI to

v1.5.2

Removed

- Support for Kubernetes v1.23

Fixed

- Fail on

eksctl anywhere upgrade cluster plan -f: #6716

- Error out when management kubeconfig is not present for workload cluster operations: 6501

- Empty vSphereMachineConfig users fails CLI upgrade: 5420

- CLI stalls on upgrade with Flux Gitops: 6453

Bug

- CNI reconciler now properly pulls images from registry mirror instead of public ECR in airgapped environments: #7170

- Waiting for control plane to be fully upgraded: #6764

Other

- Check for k8s version in the Cloudstack template name: #7130

Supported Operating Systems

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.14.3 |

✔ |

✔ |

— |

— |

— |

| RHEL 8.7 |

✔ |

✔ |

_ |

✔ |

— |

- Cluster API Provider CloudStack:

v0.4.9-rc7 to v0.4.9-rc8

Supported Operating Systems

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu 20.04 |

✔ |

✔ |

✔ |

— |

✔ |

| Ubuntu 22.04 |

✔ |

✔ |

✔ |

— |

— |

| Bottlerocket 1.14.3 |

✔ |

✔ |

— |

— |

— |

| RHEL 8.7 |

✔ |

✔ |

_ |

✔ |

— |

Supported OS version details

|

vSphere |

Bare Metal |

Nutanix |

CloudStack |

Snow |

| Ubuntu |

20.04 |

20.04 |

20.04 |

Not supported |

20.04 |

|

22.04 |

22.04 |

22.04 |

Not supported |

Not supported |

| Bottlerocket |

1.14.3 |

1.14.3 |